But as mentioned ... you learned at semester 1 or 2 in computer science the Big O notation (https://en.wikipedia.org/wiki/Big_O_notation).

You learned, that if a result is 0.12 * 10^2. Hardware changes the coefficent ... so it can make a 1.24 * 10^2 or a 0.03 * 10^2.

The exponent of the 10^2 is changed very very little. A factor of 10 - 100 or 1000 is very hard to be archived with hardware.

Usually a professor teaches the NP problem. NP problems can't be solved with the "fastest" hardware.

I am aware and yes that is correct - but Big O notation, as I mentioned in my previous reply, does not give you any meaningful in our case.

To quote from the same article you linked:

"Big O notation characterizes functions according to their growth rates: different functions with the same asymptotic growth rate may be represented using the same O notation."

So yes, while hardware "just changes the coefficient" and is thus not "important" in terms of classifying the order of whatever algorithm that is being used, that "coefficient" nevertheless has a very meaningful impact for real world applications.

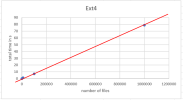

Moreover, you cannot just categorize filesystems by Big O (it's certainly possible, but it is damn hard) - the algorithms SMB or NFS could very well be in the same order as those that ext4 uses. The fact that those filesystems are slow is because they usually have to wait on other stuff to happen first. NFS usually has to wait until all writes have been committed to disk, for example. As far as I know, the NFS server still runs with

sync by default (correct me if I'm wrong), so all writes are synchronous and not asynchronous. This makes a HUGE difference if you're on HDDs. Conversely, if you use something like a SLOG on ZFS, you can actually improve the speed of NFS significantly.If you really want to see how fast NFS performs, have that test machine of yours act as the server instead of creating a localhost-to-localhost connection. Have a decently fast client machine so you can rule out any slowdowns caused by the client, and a decently fast connection with low latency so you can rule out bandwidth and latency issues. If you want, test differences between client

sync / async and server sync / async as well (so four additional tests). This separation will also allow you to rule out any interferences that running your tests might cause or whatever else is going on on your host system. Removing the coefficients, as you say.So I agree that your overall conclusion is correct - NFS and SMB should be avoided if one can afford to choose something else - but while I appreciate your efforts, your application of the theory you're referencing is incorrect.