note that i know that benchmarking storage is hard and error prone, but that has to be correctly done to produce results that are usable:

i looked over the code and the results a bit and a few things stand out that might be not showing the full or correct picture:

about the methodology:

* i'd use 'fio' as a base benchmark for disks (not only because i find bonnie++ output confusing, but it does not really show the rand read/write IOPS performance for e.g. 4k blocks, and i don't reallly know how bonnie behaves with cache)

Please carefully read the test. We are not benchmarking the "disk io" that is just an additional information. It's not about the speed of the disk - it's about the speed of the filesystem.

* you only let the benchmark run for one round, in general, but especially for benchmarking storage, i'd recommend using at least 3-5 passes for each test, so you can weed out outlier (with one pass you don't know if any of them is an outlier)

What will you gain in a multipass? We see the local filesystem is 1.2seconds (for 65536 directories) - while samba is 18 minutes), NFS is 40 seconds and ssh fs is 20 seconds. Seeing is believing. Even if a 3rd or 5rd pass would have a 10% variance - what do you think is bad?

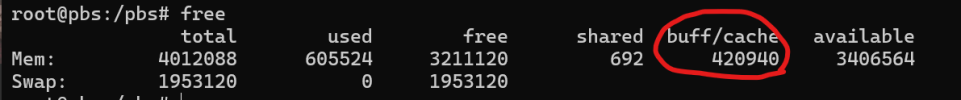

Caches are eliminated in every pass. We are not benchmarking the ram. (echo "1" .... "3" > /proc/sys/vm/drop_caches)

| target dir | sha256_name_generation | create_buckets |

| . | 0.25s | 1.19s |

| /nfs | 0.27s | 38.14s |

| /smb | 0.30s | 1129.43s |

| /sshfs | 0.30s | 19.50s |

| /iscsi | 0.26s | 1.87s |

| /ntfs | 0.24s | 1.99s |

| /loopback-on-nfs | 0.25s | 1.50s |

* your 'drop_caches' is correct for "normal" linux filesystems, but not for zfs since it has it's own arc (which size would also be interesting), basically you'd have to limit the arc to something very small to properly benchmark it (also there is an extra option for zfs cache dropping)

* you have an error in the 'find_all_files' function, you list each chunk bucket folder for every file in it, so for 500.000 files you list each of the ~65000 folder about ~7-8 times Wrong see below

With all respect. That is totally irrelevant. ext4 vs zfs are equal (line 1 and line 2) I don't care if a 8 year old hardware needs 60 sec or 80 sec to create 500.000 random files in 65500 buckets. That's irrelevant.

NFS and SMB are about 2 hours for the same operation. That is crazy. Only sshfs is near something that I would except - if I am forced to pick a remote fs.

| target dir | filesystem detected by stat(1) | create_buckets | create_random_files | read_file_content_by_id | stat_file_by_id | find_all_files |

| . | ext2/ext3 | 6.39 sec | 67.58 sec | 24.99 sec | 10.98 sec | 42.25 sec |

| /zfs | zfs | 2.43s | 85.24s | 24.86s | 16.52s | 76.11s |

| /nfs | nfs | 496.37 sec | 7256.65 sec | 97.60 sec | 59.51 sec | 189.97 sec |

| /smb | smb2 | 3455.01s | 6120.51s | 171.50s | 169.93s | 1023.71s |

| /sshfs | fuseblk | 170.25 sec | 2434.56 sec | 258.87 sec | 183.00 sec | 1469.04 sec |

| /iscsi | ext2/ext3 | 8.01 sec | 132.06 sec | 49.01 sec | 23.55 sec | 55.53 sec |

* the benchmark parameters of the filesystems/mounts would be nice, which options did you use (if any), how did you create the filesystem, etc. especially for zfs that would be interesting because it can make a big difference

Feel free to optimize that. I personally see no "magic mounting option sauce" to reduce the 2 hours of samba/nfs and make it near the 60-80 sec timings of the local filesystem. That magic option would make a factor 1000 speedup. Again - we are droping caches in every pass to have the worst case.

* you list 50000 files not 500000, but the 'no_buckets' version lists all?

Yes we saturate the filesystem with a "real word-is" 500.000 files. That is what I have on most of my systems after a few months as chunks in my working set. We run a buckets vs. no buckets operation. For the "read/stat files" we take 10% of that files, restore operation.

Again - we are not interessted in the disk speed, that is why the files contain only a simple integer. We are measuring the open() (and internal fs seek operation) and stat() methods of the OS

The find all files is what the garbage collection method does. It does the equivalent of a "find /foo - type f" call.

* each "chunk" you create only has 1-6 bytes (he index itself) which skews the benchmark enomoursly. please try again by using "real" chunks in the order of 128k - 16MiB (most often the chunks are between 1 and 4 MiB)

this is the biggest issue i have with this, because the benchmark does not produce any "real" metric with that. you probably benchmark more how many files you can open/read not how much throughput you get in a real pbs scenario

I totally agree that the chunk size is a relevant factor. That why we tried to eliminate that factor by all means and to have as few read/write operations as possible. We are not interessed about a speed competiton in "what hardware is the most capeable of linear speed read and writes of block". We only have a single read/write with the size of an i-node of the filesystem.

That is done on purpose - as this is a filesystem and not read/write throughput benchmark.

I totally agreee one can do such tests and that they are beneficial. We are still convinced that nfs and samba are the worst fileystems that you can take, as you have to wait 2 hours to create 500.000 files with 4 bytes. Just imagine we would create 500.000 x 4mb files - that is eternity...

only two of the benchmarks have a relation to real world usage of the datastore:

* create random chunks, mostly behaves like a backup

* stat_file_by_id: similarly behaves like a garbage collect but not identically, since there are two passes there: a pass where we update the atime of the files from indices and one pass to check and delete chunks that are not necessary any more)

Check

'read_file_content_by_id' is a suboptimal try to recreate a restore because you read the files in the exact same order as they were written, which can make a huge difference depending on disk type and filesystem

Fun fact

that is exactly what is happening and on purpose.

File 1...500.000 (and file with 1..50.000) So we exaclty know that the 50.000 we read are the same 50.000 that are "connected" like in a realworld backup.

Python:

# write

for x in range(FILES_TO_WRITE):

# ...

filename = BASE_DIR + "/buckets/" + prefix + "/" + sha

# ...

f.write(str(x))

# read

for x in range(FILES_TO_READ):

# ...

filename = BASE_DIR + "/buckets/" + prefix + "/" + sha

# ...

bytes = f.read()

'find_all_files' is IMHO unnecessary since that is not an operation that is done anywhere in pbs (we don't just list chunks anywhere)

I am not sure about the pbs code - but you have some read_dir() calls:

https://github.com/search?q=repo roxmox/proxmox-backup+read_dir&type=code

roxmox/proxmox-backup+read_dir&type=code

* stat_file_by_id: similarly behaves like a garbage collect but not identically, since there are two passes there: a pass where we update the atime of the files from indices and one pass to check and delete chunks that are not necessary any more

I totally agree. The modification of the fstat and the unlink test are not implemented. That is on purpose. The required step is the stat() call - and as you can see in the numbers are sort of ok in between the filesystems for this operation

Best / Worst:

3s vs. 45s (nvme)

611s vs. 674s (usb sata)

10s vs. 183s (old m.2)

But what is worse - the hours you wasted until you came to this point to have the 500.000 on the disk. Here what I expect - updating the stat and unlinking - will have a similar "hell no!" recomendation for nfs and samba - ssh will be have ok-ish. There will be no much difference in zfs vs. ext4.

Do you think it's worth adding that test?

'read_file_content_by_id' is a suboptimal try to recreate a restore because you read the files in the exact same order as they were written, which can make a huge difference depending on disk type and filesystem

As mentioned - that's on purpuse.

Should I read this in inverse order?

there are some results that are probably not real and is masked by some cache (probably the drives): e.g. you get ~200000 20000 IOPS on a QLC ssd, i very much doubt that is sustainable, i guess there is some SLC cache or similar going on (which skews your benchmark)

please don't use usb disks, the usb connection/adapter alone will introduce any number of caches and slowdown that skews the benchmark

I used - on purpose - the worst hardware that I could find. Using the Big O notation we learned, that hardware just changes a coefficient. It is very hard to change a factor in a power of 10 e.g. "this is 1000x faster".

Thanks for your rant on usb for any proxmox product

Yes the internal caches of hardware are an additional factor that you have to eliminate by the content size of the blocks.

Anyway

That is what makes this test optimal. For the localhost/localhost nfs, smb, sshfs... we eliminated the factor hardware - since we run on the same disk ask the ext4. For benchmarking the filesyste it's quite accurate.

I hope that you don't live under the impression putting a nfs/samba server on top of a ramdisk would make it "fast"

I can do such a test. We will still see the 40 sec vs. 2 hours.

now for the conclusions (at least some thoughts on some of them):

* yes i agree, we should more prominently in the docs tell users to avoid remote and slow storages

* zfs has other benefits aside from "speed", actually it's the reverse, it's probably slower but has more features (like partiy, redunancy, send/receive capability, etc.)

* SATA has nothing to do with performance, there are enterprise SATA SSDs as well

* there is already a 'benchmark' option in the pbs client, we could extend that to test other things besides chunk uploading of course

* i'm not conviced the number of buckets make a big difference in the real world (because of the methodology points i pointed out above)

* i'm not sure sshfs is a good choice for remote storage, everytime i use it find it much slower than nfs/smb, also it may not support all filesystem features we need (it does not have strict POSIX semantics)

- ZFS might be faster with more disks /raid (I was lazy not to measure that) - I coudn't find any "dramatic" gain over ext4

- We used SATA spinning rust. If you have the option to choose - use flash for the backups/restore and spinning rust for backups of backups

- the number of buckets (and how you create them) are a major issue (on remote filesystems) the tests clearly indicates that

- sshfs is the only remote fs - the numbers - prove to chose do you really recomend to wait 2h for samba / nfs - while sshfs is done in 5 minutes?

Can you please elaborate what made you guys use buckets? That would be interesting. Here what squid does:

Code:

# cache_dir ufs /var/spool/squid XXXXX 16 256

/var/spool/squid/00/00

/var/spool/squid/00/BA

...

/var/spool/squid/00/98

/var/spool/squid/00/2B

You get 16 toplevel buckets each with 256 child buckets. So the user can decide what's "optimal".