It looks like you may have missed my edit (Extremely appreciative of your quick responses), but it seems to be working. I'm not sure why there were errors when I first attempted it.Hey!

There is no need to add any records to the fstab. Storage.cfg should add the storage on OS boot.

As for why it doesn't happen, can you please check the /etc/lvm/lvm.conf file and let me know what you have as value forglobal { event_activation }?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Glad to hear!It looks like you may have missed my edit (Extremely appreciative of your quick responses), but it seems to be working. I'm not sure why there were errors when I first attempted it.

Hey Vlad. It's me again.Glad to hear!

I rebooted the host to add some SATA disks (I have not done any configuration whatsoever with them other than physical install) and now I am seeing...

Code:

kvm: -drive if=pflash,unit=1,format=raw,id=drive-efidisk0,size=131072,file=/dev/fusionvmdata/vm-100-disk-1: Could not open '/dev/fusionvmdata/vm-100-disk-1': No such file or directory

TASK ERROR: start failed: QEMU exited with code 1

Code:

root@pve:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,vztmpl,backup

zfspool: local-zfs

pool rpool/data

content images,rootdir

sparse 1

lvmthin: local-lvm

thinpool fusionlv

vgname fusionvmdata

content images

Code:

root@pve:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

fusionlv fusionvmdata twi---tz-- 1.15t

lvol0 fusionvmdata -wi------- 80.00m

snap_vm-100-disk-0_Snapshot1 fusionvmdata Vri---tz-k 500.00g fusionlv vm-100-disk-0

snap_vm-100-disk-1_Snapshot1 fusionvmdata Vri---tz-k 4.00m fusionlv vm-100-disk-1

vm-100-disk-0 fusionvmdata Vwi---tz-- 500.00g fusionlv

vm-100-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv

vm-100-state-Snapshot1 fusionvmdata Vwi---tz-- <16.50g fusionlv

vm-101-disk-0 fusionvmdata Vwi---tz-- 250.00g fusionlv

vm-101-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv

vm-102-disk-0 fusionvmdata Vwi---tz-- 200.00g fusionlv

vm-103-disk-0 fusionvmdata Vwi---tz-- 500.00g fusionlv

vm-103-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv

oczlv ocz twi-a-tz-- 220.00g 0.00 10.42

root@pve:~# vgs

VG #PV #LV #SN Attr VSize VFree

fusionvmdata 1 12 0 wz--n- <1.18t 27.30g

ocz 1 1 0 wz--n- <223.57g <3.35g

root@pve:~# pvs

PV VG Fmt Attr PSize PFree

/dev/fioa1 fusionvmdata lvm2 a-- <1.18t 27.30g

/dev/sdc1 ocz lvm2 a-- <223.57g <3.35g

Code:

root@pve:/var/log# fio-status -a

Found 1 ioMemory device in this system

Driver version: 3.2.16 build 1731

Adapter: Single Controller Adapter

Fusion-io ioScale 1.30TB, Product Number:F11-001-1T30-CS-0001, SN:1228D0162, FIO SN:1228D0162

ioDrive2 Adapter Controller, PN:PA004341001

External Power: NOT connected

PCIe Bus voltage: avg 11.93V

PCIe Bus current: avg 0.78A

PCIe Bus power: avg 9.31W

PCIe Power limit threshold: 75.00W

PCIe slot available power: unavailable

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:F11-001-1T30-CS-0001, SN:1228D0162

fct0 Attached

ioDrive2 Adapter Controller, Product Number:F11-001-1T30-CS-0001, SN:1228D0162

ioDrive2 Adapter Controller, PN:PA004341001

SMP(AVR) Versions: App Version: 1.0.35.0, Boot Version: 0.0.9.1

Located in slot 0 Center of ioDrive2 Adapter Controller SN:1228D0162

Powerloss protection: protected

PCI:06:00.0, Slot Number:7

Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001

Firmware v7.1.17, rev 116786 Public

1294.00 GBytes device size

Format: v500, 2527343750 sectors of 512 bytes

PCIe slot available power: unavailable

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 58.08 degC, max 63.49 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.48V, max 2.48V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 10.00 PB, 81.41% remaining

Lifetime data volumes:

Physical bytes written: 1,858,797,377,056,944

Physical bytes read : 2,865,698,604,603,560

RAM usage:

Current: 489,277,120 bytes

Peak : 489,277,120 bytes

Contained VSUs:

fioa: ID:0, UUID:ee25746a-4202-468d-a041-c3073e593c50

fioa State: Online, Type: block device

ID:0, UUID:ee25746a-4202-468d-a041-c3073e593c50

1294.00 GBytes device size

Format: 2527343750 sectors of 512 bytesNot sure what I've done wrong. Any ideas?

Hey!Hey Vlad. It's me again.

I rebooted the host to add some SATA disks (I have not done any configuration whatsoever with them other than physical install) and now I am seeing...

Code:kvm: -drive if=pflash,unit=1,format=raw,id=drive-efidisk0,size=131072,file=/dev/fusionvmdata/vm-100-disk-1: Could not open '/dev/fusionvmdata/vm-100-disk-1': No such file or directory TASK ERROR: start failed: QEMU exited with code 1

Code:root@pve:~# cat /etc/pve/storage.cfg dir: local path /var/lib/vz content iso,vztmpl,backup zfspool: local-zfs pool rpool/data content images,rootdir sparse 1 lvmthin: local-lvm thinpool fusionlv vgname fusionvmdata content imagesCode:root@pve:~# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert fusionlv fusionvmdata twi---tz-- 1.15t lvol0 fusionvmdata -wi------- 80.00m snap_vm-100-disk-0_Snapshot1 fusionvmdata Vri---tz-k 500.00g fusionlv vm-100-disk-0 snap_vm-100-disk-1_Snapshot1 fusionvmdata Vri---tz-k 4.00m fusionlv vm-100-disk-1 vm-100-disk-0 fusionvmdata Vwi---tz-- 500.00g fusionlv vm-100-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv vm-100-state-Snapshot1 fusionvmdata Vwi---tz-- <16.50g fusionlv vm-101-disk-0 fusionvmdata Vwi---tz-- 250.00g fusionlv vm-101-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv vm-102-disk-0 fusionvmdata Vwi---tz-- 200.00g fusionlv vm-103-disk-0 fusionvmdata Vwi---tz-- 500.00g fusionlv vm-103-disk-1 fusionvmdata Vwi---tz-- 4.00m fusionlv oczlv ocz twi-a-tz-- 220.00g 0.00 10.42 root@pve:~# vgs VG #PV #LV #SN Attr VSize VFree fusionvmdata 1 12 0 wz--n- <1.18t 27.30g ocz 1 1 0 wz--n- <223.57g <3.35g root@pve:~# pvs PV VG Fmt Attr PSize PFree /dev/fioa1 fusionvmdata lvm2 a-- <1.18t 27.30g /dev/sdc1 ocz lvm2 a-- <223.57g <3.35g

Code:root@pve:/var/log# fio-status -a Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: Single Controller Adapter Fusion-io ioScale 1.30TB, Product Number:F11-001-1T30-CS-0001, SN:1228D0162, FIO SN:1228D0162 ioDrive2 Adapter Controller, PN:PA004341001 External Power: NOT connected PCIe Bus voltage: avg 11.93V PCIe Bus current: avg 0.78A PCIe Bus power: avg 9.31W PCIe Power limit threshold: 75.00W PCIe slot available power: unavailable PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total Connected ioMemory modules: fct0: Product Number:F11-001-1T30-CS-0001, SN:1228D0162 fct0 Attached ioDrive2 Adapter Controller, Product Number:F11-001-1T30-CS-0001, SN:1228D0162 ioDrive2 Adapter Controller, PN:PA004341001 SMP(AVR) Versions: App Version: 1.0.35.0, Boot Version: 0.0.9.1 Located in slot 0 Center of ioDrive2 Adapter Controller SN:1228D0162 Powerloss protection: protected PCI:06:00.0, Slot Number:7 Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001 Firmware v7.1.17, rev 116786 Public 1294.00 GBytes device size Format: v500, 2527343750 sectors of 512 bytes PCIe slot available power: unavailable PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total Internal temperature: 58.08 degC, max 63.49 degC Internal voltage: avg 1.01V, max 1.02V Aux voltage: avg 2.48V, max 2.48V Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00% Active media: 100.00% Rated PBW: 10.00 PB, 81.41% remaining Lifetime data volumes: Physical bytes written: 1,858,797,377,056,944 Physical bytes read : 2,865,698,604,603,560 RAM usage: Current: 489,277,120 bytes Peak : 489,277,120 bytes Contained VSUs: fioa: ID:0, UUID:ee25746a-4202-468d-a041-c3073e593c50 fioa State: Online, Type: block devicey case ID:0, UUID:ee25746a-4202-468d-a041-c3073e593c50 1294.00 GBytes device size Format: 2527343750 sectors of 512 bytes

Not sure what I've done wrong. Any ideas?

The obvious question - have you tried rebooting again? Does the problem persist?

I had once a similar problem long ago due to the fact that the volume not being activated on boot.

What do you see when you run

lvscan -v?In my case the cause was related to the

global/event_activation setting in the lvm.confLast time you've sent me the

global/activation instead of the global/event_activation.You can also check it to make sure it's set to 0 (zero).

Vlad, I'm gonna have to get you real drunk.. global/event_activation=0 fixed it.Hey!

The obvious question - have you tried rebooting again? Does the problem persist?

I had once a similar problem long ago due to the fact that the volume not being activated on boot.

What do you see when you runlvscan -v?

In my case the cause was related to theglobal/event_activationsetting in the lvm.conf

Last time you've sent me theglobal/activationinstead of theglobal/event_activation.

You can also check it to make sure it's set to 0 (zero).

Glad it worked out for you!Vlad, I'm gonna have to get you real drunk.. global/event_activation=0 fixed it.

Hi, Vlad

PVE 6.4-6. No ideas.

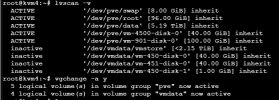

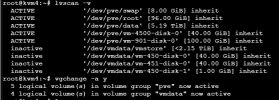

global/event_activation=1:

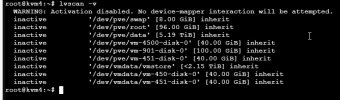

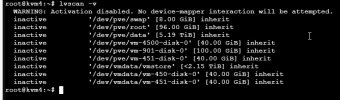

global/event_activation=0:

PVE 6.4-6. No ideas.

global/event_activation=1:

global/event_activation=0:

Code:

root@kvm4:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-aotz-- 5.19t 0.26 0.27

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

vm-450-disk-0 pve Vwi-aotz-- 40.00g data 5.51

vm-4500-disk-0 pve Vwi-a-tz-- 40.00g data 2.32

vm-451-disk-0 pve Vwi-a-tz-- 40.00g data 4.89

vm-901-disk-0 pve Vwi-a-tz-- 100.00g data 8.71

vm-450-disk-0 vmdata Vwi---tz-- 40.00g vmstore

vm-451-disk-0 vmdata Vwi---tz-- 40.00g vmstore

vmstore vmdata twi---tz-- <2.15t

root@kvm4:~# pvs

PV VG Fmt Attr PSize PFree

/dev/fioa1 vmdata lvm2 a-- <1.08t <4.34g

/dev/fiob1 vmdata lvm2 a-- <1.08t 0

/dev/sda3 pve lvm2 a-- 5.32t 0

root@kvm4:~#Hey!Hi, Vlad

PVE 6.4-6. No ideas.

global/event_activation=1:

View attachment 26069

global/event_activation=0:

View attachment 26070

Code:root@kvm4:~# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert data pve twi-aotz-- 5.19t 0.26 0.27 root pve -wi-ao---- 96.00g swap pve -wi-ao---- 8.00g vm-450-disk-0 pve Vwi-aotz-- 40.00g data 5.51 vm-4500-disk-0 pve Vwi-a-tz-- 40.00g data 2.32 vm-451-disk-0 pve Vwi-a-tz-- 40.00g data 4.89 vm-901-disk-0 pve Vwi-a-tz-- 100.00g data 8.71 vm-450-disk-0 vmdata Vwi---tz-- 40.00g vmstore vm-451-disk-0 vmdata Vwi---tz-- 40.00g vmstore vmstore vmdata twi---tz-- <2.15t root@kvm4:~# pvs PV VG Fmt Attr PSize PFree /dev/fioa1 vmdata lvm2 a-- <1.08t <4.34g /dev/fiob1 vmdata lvm2 a-- <1.08t 0 /dev/sda3 pve lvm2 a-- 5.32t 0 root@kvm4:~#

A couple of obvious questions:

1) what is the generation of the cards? Am i correct to assume it's the 2nd?

2) i see there are 2 cards installed. Have you tested with only one added?

3) has this setup worked in the past and stopped after the recent kernel update?

4) is the DKMS set up correctly? What is the output when doing

ls /var/lib/initramfs-tools | sudo xargs -n1 /usr/lib/dkms/dkms_autoinstaller start?5) after setting global/event_activation=0, have you rebooted the server?

6) have you attempted a clean installation of the drivers?

7) are the temperatures on the drives ok-ish? What is the output of fio-status -a?

Just to clarify - i have a couple of servers running with the latest kernel and ioDrive2 and ioScale2 cards in them with this drivers, so it should work eventually in your case as well.

Hi Vlad!

yes - DUO

root@kvm4:~# fio-status

Found 2 ioMemory devices in this system with 1 ioDrive Duo

Driver version: 3.2.16 build 1731

Adapter: Dual Controller Adapter

HP 2410GB MLC PCIe ioDrive2 Duo for ProLiant Servers, Product Number:673648-B21, SN:3UN322F00U

External Power: NOT connected

PCIe Power limit threshold: 24.75W

Connected ioMemory modules:

fct0: Product Number:673648-B21, SN:1321D07F1-1121fct1: Product Number:673648-B21, SN:1321D07F1-1111

One duo card (2 * 1 gb) in LV

root@kvm4:~# pvs

PV VG Fmt Attr PSize PFree

/dev/fioa1 vmdata lvm2 a-- <1.08t <4.34g

/dev/fiob1 vmdata lvm2 a-- <1.08t 0

/dev/sda3 pve lvm2 a-- 5.32t 0

root@kvm4:~#

--- Logical volume ---

LV Name vmstore

VG Name vmdata

LV UUID uOreAM-PeMi-h4Ei-ge8Y-41x3-tcWC-QpCsfu

LV Write Access read/write

LV Creation host, time kvm4, 2021-05-11 06:41:16 +0400

LV Pool metadata vmstore_tmeta

LV Pool data vmstore_tdata

LV Status NOT available

LV Size <2.15 TiB

Current LE 563200

Segments 1

Allocation inherit

Read ahead sectors auto

root@kvm4:~# pvs

PV VG Fmt Attr PSize PFree

/dev/fioa1 vmdata lvm2 a-- <1.08t <4.34g

/dev/fiob1 vmdata lvm2 a-- <1.08t 0

/dev/sda3 pve lvm2 a-- 5.32t 0

root@kvm4:~#

--- Logical volume ---

LV Name vmstore

VG Name vmdata

LV UUID uOreAM-PeMi-h4Ei-ge8Y-41x3-tcWC-QpCsfu

LV Write Access read/write

LV Creation host, time kvm4, 2021-05-11 06:41:16 +0400

LV Pool metadata vmstore_tmeta

LV Pool data vmstore_tdata

LV Status NOT available

LV Size <2.15 TiB

Current LE 563200

Segments 1

Allocation inherit

Read ahead sectors auto

New Proxmox installation 6-4-4,

root@kvm4:~# ls /var/lib/initramfs-tools | sudo xargs -n1 /usr/lib/dkms/dkms_autoinstaller start

[ ok ] dkms: running auto installation service for kernel 5.4.106-1-pve:.

[ ok ] dkms: running auto installation service for kernel 5.4.114-1-pve:.

[....] dkms: running auto installation service for kernel 5.4.73-1-pve:Error! Your kernel headers for kernel 5.4.73-1-pve cannot be found.

Please install the linux-headers-5.4.73-1-pve package,

or use the --kernelsourcedir option to tell DKMS where it's located. ok

hmm..

[ ok ] dkms: running auto installation service for kernel 5.4.106-1-pve:.

[ ok ] dkms: running auto installation service for kernel 5.4.114-1-pve:.

[....] dkms: running auto installation service for kernel 5.4.73-1-pve:Error! Your kernel headers for kernel 5.4.73-1-pve cannot be found.

Please install the linux-headers-5.4.73-1-pve package,

or use the --kernelsourcedir option to tell DKMS where it's located. ok

hmm..

Yes, and all lv inactive

yes

Found 2 ioMemory devices in this system with 1 ioDrive Duo

Driver version: 3.2.16 build 1731

Adapter: Dual Controller Adapter

HP 2410GB MLC PCIe ioDrive2 Duo for ProLiant Servers, Product Number:673648-B21, SN:3UN322F00U

ioDrive2 Adapter Controller, PN:674328-001

SMP(AVR) Versions: App Version: 1.0.33.0, Boot Version: 0.0.8.1

External Power: NOT connected

PCIe Bus voltage: avg 12.02V

PCIe Bus current: avg 1.75A

PCIe Bus power: avg 21.20W

PCIe Power limit threshold: 24.75W

PCIe slot available power: unavailable

PCIe negotiated link: 8 lanes at 5.0 Gt/sec each, 4000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:673648-B21, SN:1321D07F1-1121

fct1: Product Number:673648-B21, SN:1321D07F1-1111

fct0 Attached

SN:1321D07F1-1121

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 1 Lower of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24187 sec

PCI:0b:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 49.22 degC, max 49.71 degC

Internal voltage: avg 1.02V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 184,980,444,633,752

Physical bytes read : 106,574,911,311,960

RAM usage:

Current: 389,595,200 bytes

Peak : 389,595,200 bytes

Contained VSUs:

fioa: ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

fioa State: Online, Type: block device

ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

fct1 Attached

SN:1321D07F1-1111

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 0 Upper of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24186 sec

PCI:0c:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 48.23 degC, max 48.72 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 185,034,828,369,280

Physical bytes read : 106,209,206,414,064

RAM usage:

Current: 411,173,120 bytes

Peak : 411,173,120 bytes

Contained VSUs:

fiob: ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

fiob State: Online, Type: block device

ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

Driver version: 3.2.16 build 1731

Adapter: Dual Controller Adapter

HP 2410GB MLC PCIe ioDrive2 Duo for ProLiant Servers, Product Number:673648-B21, SN:3UN322F00U

ioDrive2 Adapter Controller, PN:674328-001

SMP(AVR) Versions: App Version: 1.0.33.0, Boot Version: 0.0.8.1

External Power: NOT connected

PCIe Bus voltage: avg 12.02V

PCIe Bus current: avg 1.75A

PCIe Bus power: avg 21.20W

PCIe Power limit threshold: 24.75W

PCIe slot available power: unavailable

PCIe negotiated link: 8 lanes at 5.0 Gt/sec each, 4000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:673648-B21, SN:1321D07F1-1121

fct1: Product Number:673648-B21, SN:1321D07F1-1111

fct0 Attached

SN:1321D07F1-1121

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 1 Lower of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24187 sec

PCI:0b:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 49.22 degC, max 49.71 degC

Internal voltage: avg 1.02V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 184,980,444,633,752

Physical bytes read : 106,574,911,311,960

RAM usage:

Current: 389,595,200 bytes

Peak : 389,595,200 bytes

Contained VSUs:

fioa: ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

fioa State: Online, Type: block device

ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

fct1 Attached

SN:1321D07F1-1111

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 0 Upper of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24186 sec

PCI:0c:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 48.23 degC, max 48.72 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 185,034,828,369,280

Physical bytes read : 106,209,206,414,064

RAM usage:

Current: 411,173,120 bytes

Peak : 411,173,120 bytes

Contained VSUs:

fiob: ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

fiob State: Online, Type: block device

ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

Interesting. Am i correct to assume that the drivers do load the drive every time and it's only the LVs that are not activated? What kind of storage are you using? Is it LVM thin? I assume we're in the same timezone, so drop me a message and i can connect remotely and have a look.Hi Vlad!yes - DUOroot@kvm4:~# fio-statusFound 2 ioMemory devices in this system with 1 ioDrive DuoDriver version: 3.2.16 build 1731Adapter: Dual Controller AdapterHP 2410GB MLC PCIe ioDrive2 Duo for ProLiant Servers, Product Number:673648-B21, SN:3UN322F00UExternal Power: NOT connectedPCIe Power limit threshold: 24.75WConnected ioMemory modules:fct0: Product Number:673648-B21, SN:1321D07F1-1121

fct1: Product Number:673648-B21, SN:1321D07F1-1111

One duo card (2 * 1 gb) in LV

root@kvm4:~# pvs

PV VG Fmt Attr PSize PFree

/dev/fioa1 vmdata lvm2 a-- <1.08t <4.34g

/dev/fiob1 vmdata lvm2 a-- <1.08t 0

/dev/sda3 pve lvm2 a-- 5.32t 0

root@kvm4:~#

--- Logical volume ---

LV Name vmstore

VG Name vmdata

LV UUID uOreAM-PeMi-h4Ei-ge8Y-41x3-tcWC-QpCsfu

LV Write Access read/write

LV Creation host, time kvm4, 2021-05-11 06:41:16 +0400

LV Pool metadata vmstore_tmeta

LV Pool data vmstore_tdata

LV Status NOT available

LV Size <2.15 TiB

Current LE 563200

Segments 1

Allocation inherit

Read ahead sectors auto

View attachment 26074New Proxmox installation 6-4-4,root@kvm4:~# ls /var/lib/initramfs-tools | sudo xargs -n1 /usr/lib/dkms/dkms_autoinstaller start

[ ok ] dkms: running auto installation service for kernel 5.4.106-1-pve:.

[ ok ] dkms: running auto installation service for kernel 5.4.114-1-pve:.

[....] dkms: running auto installation service for kernel 5.4.73-1-pve:Error! Your kernel headers for kernel 5.4.73-1-pve cannot be found.

Please install the linux-headers-5.4.73-1-pve package,

or use the --kernelsourcedir option to tell DKMS where it's located. ok

hmm..Yes, and all lv inactiveyesFound 2 ioMemory devices in this system with 1 ioDrive Duo

Driver version: 3.2.16 build 1731

Adapter: Dual Controller Adapter

HP 2410GB MLC PCIe ioDrive2 Duo for ProLiant Servers, Product Number:673648-B21, SN:3UN322F00U

ioDrive2 Adapter Controller, PN:674328-001

SMP(AVR) Versions: App Version: 1.0.33.0, Boot Version: 0.0.8.1

External Power: NOT connected

PCIe Bus voltage: avg 12.02V

PCIe Bus current: avg 1.75A

PCIe Bus power: avg 21.20W

PCIe Power limit threshold: 24.75W

PCIe slot available power: unavailable

PCIe negotiated link: 8 lanes at 5.0 Gt/sec each, 4000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:673648-B21, SN:1321D07F1-1121

fct1: Product Number:673648-B21, SN:1321D07F1-1111

fct0 Attached

SN:1321D07F1-1121

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 1 Lower of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24187 sec

PCI:0b:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 49.22 degC, max 49.71 degC

Internal voltage: avg 1.02V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 184,980,444,633,752

Physical bytes read : 106,574,911,311,960

RAM usage:

Current: 389,595,200 bytes

Peak : 389,595,200 bytes

Contained VSUs:

fioa: ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

fioa State: Online, Type: block device

ID:0, UUID:224c2d16-8fb9-442e-9e1d-db313bb4804f

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

fct1 Attached

SN:1321D07F1-1111

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 0 Upper of ioDrive2 Adapter Controller SN:1321D07F1

Powerloss protection: protected

Last Power Monitor Incident: 24186 sec

PCI:0c:00.0, Slot Number:1

Vendor:1aed, Device:2001, Sub vendor:1590, Sub device:70

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 48.23 degC, max 48.72 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.48V, max 2.49V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 98.91% remaining

Lifetime data volumes:

Physical bytes written: 185,034,828,369,280

Physical bytes read : 106,209,206,414,064

RAM usage:

Current: 411,173,120 bytes

Peak : 411,173,120 bytes

Contained VSUs:

fiob: ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

fiob State: Online, Type: block device

ID:0, UUID:8f16d50a-6520-4260-bff9-82c73bff16a3

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

yes, exactly.Interesting. Am i correct to assume that the drivers do load the drive every time and it's only the LVs that are not activated?

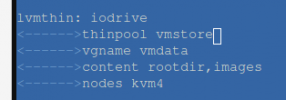

lvm thin, drive added like here iodrive lvm.

Storage.cfg:

vgchange -a y vmdata - solves the problem before next reboot.

Ok, i'll drop msg when i can.

The driver reports that my ioscale card has a hardware problem. The driver is in minimal mode....

Not sure if i got an ebay dud or is there something else wrong?

Not sure if i got an ebay dud or is there something else wrong?

Code:

Found 1 ioMemory device in this system

Driver version: 3.2.16 build 1731

Adapter: ioMono

Fusion-io 410GB ioScale2, Product Number:F11-003-410G-CS-0001, SN:1312G0379, FIO SN:1312G0379

ioDrive2 Adapter Controller, PN:PA005004005

External Power: NOT connected

PCIe Power limit threshold: 24.75W

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:F11-003-410G-CS-0001, SN:1312G0379

fct0 Status unknown: Driver is in MINIMAL MODE:

Device has a hardware failure

ioDrive2 Adapter Controller, Product Number:F11-003-410G-CS-0001, SN:1312G0379

!! ---> There are active errors or warnings on this device! Read below for details.

ioDrive2 Adapter Controller, PN:PA005004005

SMP(AVR) Versions: App Version: 1.0.13.0, Boot Version: 1.0.4.1

Powerloss protection: not available

PCI:02:00.0, Slot Number:17

Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001

Firmware v7.1.15, rev 110356 Public

Geometry and capacity information not available.

Format: not low-level formatted

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 56.11 degC, max 56.60 degC

Internal voltage: avg 1.01V, max 1.01V

Aux voltage: avg 2.48V, max 2.48V

Rated PBW: 2.00 PB

Lifetime data volumes:

Physical bytes written: 0

Physical bytes read : 0

RAM usage:

Current: 0 bytes

Peak : 0 bytes

ACTIVE WARNINGS:

The ioMemory is currently running in a minimal state.The driver reports that my ioscale card has a hardware problem. The driver is in minimal mode....

Not sure if i got an ebay dud or is there something else wrong?

Code:Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: ioMono Fusion-io 410GB ioScale2, Product Number:F11-003-410G-CS-0001, SN:1312G0379, FIO SN:1312G0379 ioDrive2 Adapter Controller, PN:PA005004005 External Power: NOT connected PCIe Power limit threshold: 24.75W PCIe slot available power: 75.00W PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total Connected ioMemory modules: fct0: Product Number:F11-003-410G-CS-0001, SN:1312G0379 fct0 Status unknown: Driver is in MINIMAL MODE: Device has a hardware failure ioDrive2 Adapter Controller, Product Number:F11-003-410G-CS-0001, SN:1312G0379 !! ---> There are active errors or warnings on this device! Read below for details. ioDrive2 Adapter Controller, PN:PA005004005 SMP(AVR) Versions: App Version: 1.0.13.0, Boot Version: 1.0.4.1 Powerloss protection: not available PCI:02:00.0, Slot Number:17 Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001 Firmware v7.1.15, rev 110356 Public Geometry and capacity information not available. Format: not low-level formatted PCIe slot available power: 75.00W PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total Internal temperature: 56.11 degC, max 56.60 degC Internal voltage: avg 1.01V, max 1.01V Aux voltage: avg 2.48V, max 2.48V Rated PBW: 2.00 PB Lifetime data volumes: Physical bytes written: 0 Physical bytes read : 0 RAM usage: Current: 0 bytes Peak : 0 bytes ACTIVE WARNINGS: The ioMemory is currently running in a minimal state.

Looks like it's a dead card. It looks very similar to my dead ioDrive2. It may be the case that it's not formatted, but i doubt it. Sorry, mate...

Hello all,

I am trying to get a Fusion-io ioXtream 80Gb working with proxmox 7 and I am not getting too far. I have followed the instructions in the 1st page to install the driver and firmware and I get the following:

I guess there is a problem of incompatibility between the firmware version (v5.0.7) and the driver version (3.2.16) from what I have read, but I am not sure how to align them.

I have also tried to install the driver from https://github.com/RemixVSL/iomemory-vsl but made no difference (as at first, I thought the driver was the problem).

I then tried to update the firmware version but I am getting nowhere, probably because I do not know exactly how to do it. I ended up going to the WD website to download the driver and firmware but for ioXtream, I only find version 2.3.x which I do not manage to successfully compile with my 5.11 kernel libs. In the github documentation, it does say that ioXtream 80Gb can work with driver version 3.

I am out of ideas how to get this card working and if it is compatible.

Anyone has any ideas?

Thanks

I am trying to get a Fusion-io ioXtream 80Gb working with proxmox 7 and I am not getting too far. I have followed the instructions in the 1st page to install the driver and firmware and I get the following:

Code:

Found 1 ioMemory device in this system

Driver version: 3.2.16 build 1731

Adapter: ioMono

Fusion-io ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065, FIO SN:16065

ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS

External Power: NOT connected

PCIe Power limit threshold: Disabled

PCIe slot available power: 26.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:FS4-002-081-CS, SN:16065

fct0 Status unknown: Driver is in MINIMAL MODE:

The firmware on this device is not compatible with the currently installed version of the driver

ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065

!! ---> There are active errors or warnings on this device! Read below for details.

ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS

Powerloss protection: not available

PCI:01:00.0

Vendor:1aed, Device:1006, Sub vendor:1aed, Sub device:1010

Firmware v5.0.7, rev 101971 Public

Geometry and capacity information not available.

Format: not low-level formatted

PCIe slot available power: 26.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Internal temperature: 53.65 degC, max 54.14 degC

Internal voltage: avg 1.01V, max 2.38V

Aux voltage: avg 2.49V, max 2.38V

Lifetime data volumes:

Physical bytes written: 0

Physical bytes read : 0

RAM usage:

Current: 0 bytes

Peak : 0 bytes

ACTIVE WARNINGS:

The ioMemory is currently running in a minimal state.I guess there is a problem of incompatibility between the firmware version (v5.0.7) and the driver version (3.2.16) from what I have read, but I am not sure how to align them.

I have also tried to install the driver from https://github.com/RemixVSL/iomemory-vsl but made no difference (as at first, I thought the driver was the problem).

I then tried to update the firmware version but I am getting nowhere, probably because I do not know exactly how to do it. I ended up going to the WD website to download the driver and firmware but for ioXtream, I only find version 2.3.x which I do not manage to successfully compile with my 5.11 kernel libs. In the github documentation, it does say that ioXtream 80Gb can work with driver version 3.

I am out of ideas how to get this card working and if it is compatible.

Anyone has any ideas?

Thanks

It may be the case that you're using ioDrive 1st gen card.Hello all,

I am trying to get a Fusion-io ioXtream 80Gb working with proxmox 7 and I am not getting too far. I have followed the instructions in the 1st page to install the driver and firmware and I get the following:

Code:Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: ioMono Fusion-io ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065, FIO SN:16065 ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS External Power: NOT connected PCIe Power limit threshold: Disabled PCIe slot available power: 26.00W PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total Connected ioMemory modules: fct0: Product Number:FS4-002-081-CS, SN:16065 fct0 Status unknown: Driver is in MINIMAL MODE: The firmware on this device is not compatible with the currently installed version of the driver ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065 !! ---> There are active errors or warnings on this device! Read below for details. ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS Powerloss protection: not available PCI:01:00.0 Vendor:1aed, Device:1006, Sub vendor:1aed, Sub device:1010 Firmware v5.0.7, rev 101971 Public Geometry and capacity information not available. Format: not low-level formatted PCIe slot available power: 26.00W PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total Internal temperature: 53.65 degC, max 54.14 degC Internal voltage: avg 1.01V, max 2.38V Aux voltage: avg 2.49V, max 2.38V Lifetime data volumes: Physical bytes written: 0 Physical bytes read : 0 RAM usage: Current: 0 bytes Peak : 0 bytes ACTIVE WARNINGS: The ioMemory is currently running in a minimal state.

I guess there is a problem of incompatibility between the firmware version (v5.0.7) and the driver version (3.2.16) from what I have read, but I am not sure how to align them.

I have also tried to install the driver from https://github.com/RemixVSL/iomemory-vsl but made no difference (as at first, I thought the driver was the problem).

I then tried to update the firmware version but I am getting nowhere, probably because I do not know exactly how to do it. I ended up going to the WD website to download the driver and firmware but for ioXtream, I only find version 2.3.x which I do not manage to successfully compile with my 5.11 kernel libs. In the github documentation, it does say that ioXtream 80Gb can work with driver version 3.

I am out of ideas how to get this card working and if it is compatible.

Anyone has any ideas?

Thanks

Please check this list: https://docs.google.com/spreadsheet...CfswQxHAhVee3rW-04baqKg1qN2fp7wEzuFm6/pubhtml

@Vladimir Bulgaru Thanks for your reply. I did check that doc and I do see my card:It may be the case that you're using ioDrive 1st gen card.

Please check this list: https://docs.google.com/spreadsheet...CfswQxHAhVee3rW-04baqKg1qN2fp7wEzuFm6/pubhtml

| Fusion-io | ioXtreme | 80 | FS4-002-081-CS | 188000101 | Fusion-io ioXtreme 80GB | Single Adapter | 2.0 x4 | 3 |

Any ideas what I could do to get it working?

Thanks

On a test server today I tried to get my Fusion-IO 2 1.2tb card working. I tired version 6.1 and was able to get the script to find the headers by commenting out this list

and changing the apt sources

nano /etc/apt/sources.list

adding this

This installed the card and showed stats like temp, firmware version, etc

The next test I tried the same method to get my Fusion-IO 2 1.2gb card working with Proxmox 7.1

Commenting out this list

and changing the apt sources

nano /etc/apt/sources.list

adding this

This time I ran into some trouble

Kernel preparation unnecessary for this kernel. Skipping...

Building module:

cleaning build area...

'make' DKMS_KERNEL_VERSION=5.13.19-2-pve...(bad exit status: 2)

Error! Bad return status for module build on kernel: 5.13.19-2-pve (x86_64)

Consult /var/lib/dkms/iomemory-vsl/3.2.16/build/make.log for more information.

root@pve:/home/temp/iomemory-vsl# [/ICODE]

I consulted /var/lib/dkms/iomemory-vsl/3.2.16/build/make.log

make: *** [Makefile:132: modules] Error 2[/ICODE]

I'm not really sure what to do with this one? I wanted to say thanks to vlad for writing that script. Without that it would be quite difficult.

/etc/apt/sources.list.d/pve-enterprise.list and changing the apt sources

nano /etc/apt/sources.list

adding this

http://download.proxmox.com/debian buster pve-no-subscriptionThis installed the card and showed stats like temp, firmware version, etc

The next test I tried the same method to get my Fusion-IO 2 1.2gb card working with Proxmox 7.1

Commenting out this list

/etc/apt/sources.list.d/pve-enterprise.list and changing the apt sources

nano /etc/apt/sources.list

adding this

http://download.proxmox.com/debian bullseye pve-no-subscriptionThis time I ran into some trouble

Kernel preparation unnecessary for this kernel. Skipping...

Building module:

cleaning build area...

'make' DKMS_KERNEL_VERSION=5.13.19-2-pve...(bad exit status: 2)

Error! Bad return status for module build on kernel: 5.13.19-2-pve (x86_64)

Consult /var/lib/dkms/iomemory-vsl/3.2.16/build/make.log for more information.

root@pve:/home/temp/iomemory-vsl# [/ICODE]

I consulted /var/lib/dkms/iomemory-vsl/3.2.16/build/make.log

[ICODE]O_DRIVER_NAME=iomemory-vsl \

FUSION_DRIVER_DIR=/var/lib/dkms/iomemory-vsl/3.2.16/build \

M=/var/lib/dkms/iomemory-vsl/3.2.16/build \

EXTRA_CFLAGS+="-I/var/lib/dkms/iomemory-vsl/3.2.16/build/include -DBUILDING_MODULE -DLINUX_IO_SCHED -Wall -Werror" \

INSTALL_MOD_DIR=extra/fio \

INSTALL_MOD_PATH= \

KFIO_LIB=kfio/x86_64_cc102_libkfio.o_shipped \

modules

make[1]: Entering directory '/usr/src/linux-headers-5.13.19-2-pve'

printf '#include "linux/module.h"\nMODULE_LICENSE("GPL");\n' >/var/lib/dkms/iomemory-vsl/3.2.16/build/license.c

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/main.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/pci.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/sysrq.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/driver_init.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kfio.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/errno.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/state.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kcache.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kfile.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kmem.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kfio_common.o

CC [M] /var/lib/dkms/iomemory-vsl/3.2.16/build/kcpu.o

/var/lib/dkms/iomemory-vsl/3.2.16/build/pci.c:615:55: error: ‘enum pci_channel_state’ declared inside parameter list will not be visible outside of this definition or declaration [->

615 | iodrive_pci_error_detected (struct pci_dev *dev, enum pci_channel_state error)

| ^~~~~~~~~~~~~~~~~

/var/lib/dkms/iomemory-vsl/3.2.16/build/pci.c:615:73: error: parameter 2 (‘error’) has incomplete type

615 | iodrive_pci_error_detected (struct pci_dev *dev, enum pci_channel_state error)

| ~~~~~~~~~~~~~~~~~~~~~~~^~~~~

/var/lib/dkms/iomemory-vsl/3.2.16/build/pci.c:615:1: error: function declaration isn’t a prototype [-Werror=strict-prototypes]

615 | iodrive_pci_error_detected (struct pci_dev *dev, enum pci_channel_state error)

| ^~~~~~~~~~~~~~~~~~~~~~~~~~

cc1: all warnings being treated as errors

make[2]: *** [scripts/Makefile.build:281: /var/lib/dkms/iomemory-vsl/3.2.16/build/pci.o] Error 1

make[2]: *** Waiting for unfinished jobs....

/var/lib/dkms/iomemory-vsl/3.2.16/build/kmem.c: In function ‘kfio_get_user_pages’:

/var/lib/dkms/iomemory-vsl/3.2.16/build/kmem.c:203:29: error: ‘struct mm_struct’ has no member named ‘mmap_sem’; did you mean ‘mmap_base’?

203 | down_read(¤t->mm->mmap_sem);

| ^~~~~~~~

| mmap_base

/var/lib/dkms/iomemory-vsl/3.2.16/build/kmem.c:205:27: error: ‘struct mm_struct’ has no member named ‘mmap_sem’; did you mean ‘mmap_base’?

205 | up_read(¤t->mm->mmap_sem);

| ^~~~~~~~

| mmap_base

make[2]: *** [scripts/Makefile.build:281: /var/lib/dkms/iomemory-vsl/3.2.16/build/kmem.o] Error 1

make[1]: *** [Makefile:1879: /var/lib/dkms/iomemory-vsl/3.2.16/build] Error 2

make[1]: Leaving directory '/usr/src/linux-headers-5.13.19-2-pve'make: *** [Makefile:132: modules] Error 2[/ICODE]

I'm not really sure what to do with this one? I wanted to say thanks to vlad for writing that script. Without that it would be quite difficult.

My test with Proxmox 7.1 was invalid because I used the wrong version of the script, so once again with the correct version of the script I tried again to get my Fusion-IO 2 1.2gb working with Proxmox 7.1.

commenting out this list

adding a line the apt sources

This time it ran like a charm!!!

fio-status -a Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: Single Controller Adapter Fusion-io ioDrive2 1.205TB, Product Number:F00-001-1T20-CS-0001, SN:1213D1800, FIO SN:1213D1800 ioDrive2 Adapter Controller, PN A004137009 External Power: NOT connected PCIe Bus voltage: avg 12.05V PCIe Bus current: avg 0.70A PCIe Bus power: avg 8.52W PCIe Power limit threshold: 24.75W PCIe slot available power: 25.00W PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

A004137009 External Power: NOT connected PCIe Bus voltage: avg 12.05V PCIe Bus current: avg 0.70A PCIe Bus power: avg 8.52W PCIe Power limit threshold: 24.75W PCIe slot available power: 25.00W PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:F00-001-1T20-CS-0001, SN:1213D1800

fct0 Attached

ioDrive2 Adapter Controller, Product Number:F00-001-1T20-CS-0001, SN:XXXXXXXX

ioDrive2 Adapter Controller, PN A004137009

A004137009

SMP(AVR) Versions: App Version: 1.0.35.0, Boot Version: 0.0.9.1

Located in slot 0 Center of ioDrive2 Adapter Controller SN:XXXXXXXXX

Powerloss protection: protected

PCI:04:00.0, Slot Number:5

Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 46.76 degC, max 47.25 degC

Internal voltage: avg 1.02V, max 1.02V

Aux voltage: avg 2.48V, max 2.48V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 100.00% remaining

Lifetime data volumes:

Physical bytes written: 42,363,256,688

Physical bytes read : 193,713,431,808

RAM usage:

Current: 389,595,200 bytes

Peak : 389,595,200 bytes

Contained VSUs:

fioa: ID:0, UUID:137e065d-c927-438e-bff9-21cb9cacfd39

fioa State: Online, Type: block device

ID:0, UUID:137e065d-c927-438e-bff9-21cb9cacfd39

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

So now that it's working any thoughts would be helpful, It needs to be formatted and mounted, will it ever show up in the GUI? What about pass through? Thanks

commenting out this list

/etc/apt/sources.list.d/pve-enterprise.list adding a line the apt sources

nano /etc/apt/sources.list

deb http://download.proxmox.com/debian bullseye pve-no-subscriptionThis time it ran like a charm!!!

fio-status -a Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: Single Controller Adapter Fusion-io ioDrive2 1.205TB, Product Number:F00-001-1T20-CS-0001, SN:1213D1800, FIO SN:1213D1800 ioDrive2 Adapter Controller, PN

Connected ioMemory modules:

fct0: Product Number:F00-001-1T20-CS-0001, SN:1213D1800

fct0 Attached

ioDrive2 Adapter Controller, Product Number:F00-001-1T20-CS-0001, SN:XXXXXXXX

ioDrive2 Adapter Controller, PN

SMP(AVR) Versions: App Version: 1.0.35.0, Boot Version: 0.0.9.1

Located in slot 0 Center of ioDrive2 Adapter Controller SN:XXXXXXXXX

Powerloss protection: protected

PCI:04:00.0, Slot Number:5

Vendor:1aed, Device:2001, Sub vendor:1aed, Sub device:2001

Firmware v7.1.17, rev 116786 Public

1205.00 GBytes device size

Format: v500, 2353515625 sectors of 512 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 46.76 degC, max 47.25 degC

Internal voltage: avg 1.02V, max 1.02V

Aux voltage: avg 2.48V, max 2.48V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 17.00 PB, 100.00% remaining

Lifetime data volumes:

Physical bytes written: 42,363,256,688

Physical bytes read : 193,713,431,808

RAM usage:

Current: 389,595,200 bytes

Peak : 389,595,200 bytes

Contained VSUs:

fioa: ID:0, UUID:137e065d-c927-438e-bff9-21cb9cacfd39

fioa State: Online, Type: block device

ID:0, UUID:137e065d-c927-438e-bff9-21cb9cacfd39

1205.00 GBytes device size

Format: 2353515625 sectors of 512 bytes

So now that it's working any thoughts would be helpful, It needs to be formatted and mounted, will it ever show up in the GUI? What about pass through? Thanks

Last edited:

New poster, user, etc. Just installed pve for the first time yesterday. Long time Hyper-V user. Anyway, I came across your post and thought this might help:Hello all,

I am trying to get a Fusion-io ioXtream 80Gb working with proxmox 7 and I am not getting too far. I have followed the instructions in the 1st page to install the driver and firmware and I get the following:

Code:Found 1 ioMemory device in this system Driver version: 3.2.16 build 1731 Adapter: ioMono Fusion-io ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065, FIO SN:16065 ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS External Power: NOT connected PCIe Power limit threshold: Disabled PCIe slot available power: 26.00W PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total Connected ioMemory modules: fct0: Product Number:FS4-002-081-CS, SN:16065 fct0 Status unknown: Driver is in MINIMAL MODE: The firmware on this device is not compatible with the currently installed version of the driver ioXtreme 80GB, Product Number:FS4-002-081-CS, SN:16065 !! ---> There are active errors or warnings on this device! Read below for details. ioXtreme 80GB, PN:00188000101, Alt PN:FS4-0S2-081-CS Powerloss protection: not available PCI:01:00.0 Vendor:1aed, Device:1006, Sub vendor:1aed, Sub device:1010 Firmware v5.0.7, rev 101971 Public Geometry and capacity information not available. Format: not low-level formatted PCIe slot available power: 26.00W PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total Internal temperature: 53.65 degC, max 54.14 degC Internal voltage: avg 1.01V, max 2.38V Aux voltage: avg 2.49V, max 2.38V Lifetime data volumes: Physical bytes written: 0 Physical bytes read : 0 RAM usage: Current: 0 bytes Peak : 0 bytes ACTIVE WARNINGS: The ioMemory is currently running in a minimal state.

I guess there is a problem of incompatibility between the firmware version (v5.0.7) and the driver version (3.2.16) from what I have read, but I am not sure how to align them.

I have also tried to install the driver from https://github.com/RemixVSL/iomemory-vsl but made no difference (as at first, I thought the driver was the problem).

I then tried to update the firmware version but I am getting nowhere, probably because I do not know exactly how to do it. I ended up going to the WD website to download the driver and firmware but for ioXtream, I only find version 2.3.x which I do not manage to successfully compile with my 5.11 kernel libs. In the github documentation, it does say that ioXtream 80Gb can work with driver version 3.

I am out of ideas how to get this card working and if it is compatible.

Anyone has any ideas?

Thanks

Have you enabled SR-IOV or tried to? I was attempting to set up both storage on a FusionIO card (HP ioDrive2 Duo 2TB here) and SR-IOV for my Mellanox Connect-x 4 LS adapter. When I enabled the boot flags for iommu=on, I noticed EXACTLY the same behavior you saw above. Right now I reverted those changes as having any storage is a bit more important to me than accelerated networking.

Just a thought! hopefully this helps.

First off, thank you for this tutorial. I am out of my depths in linux but can follow instructions, and posts like these are a big help.

I have followed your tutorial and have everything built and installed, except the firmware update:

# fio-status -a

Found 1 ioMemory device in this system

Driver version: 3.2.16 build 1731

Adapter: ioDimm

Fusion-io ioDIMM3 320GB, Product Number:FS1-001-321-CS, SN:6607, FIO SN:6607

ioDIMM3, PN:001194012, Alt PN:FS1-SS1-321-CS

PCIe Power limit threshold: Disabled

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:FS1-001-321-CS, SN:6607

fct0 Status unknown: Driver is in MINIMAL MODE:

The firmware on this device is not compatible with the currently installed version of the driver

ioDIMM3, Product Number:FS1-001-321-CS, SN:6607

!! ---> There are active errors or warnings on this device! Read below for details.

ioDIMM3, PN:001194012, Alt PN:FS1-SS1-321-CS

Powerloss protection: not available

PCI:02:00.0, Slot Number:17

Vendor:1aed, Device:1005, Sub vendor:1aed, Sub device:1010

Firmware v5.0.7, rev 107053 Public

Geometry and capacity information not available.

Format: not low-level formatted

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Internal temperature: 51.68 degC, max 52.17 degC

Internal voltage: avg 0.97V, max 2.38V

Aux voltage: avg 2.49V, max 2.38V

Lifetime data volumes:

Physical bytes written: 0

Physical bytes read : 0

RAM usage:

Current: 0 bytes

Peak : 0 bytes

ACTIVE WARNINGS:

The ioMemory is currently running in a minimal state.

I'm having trouble recognizing if and where in the instructions the firmware is updated.

If it isn't part of the tutorial, would you mind pointing me in the direction of where I can find instructions?

The instructions on the Fusion IO USB I got with the card are for rpm packages.

Thanks in advance.

I have followed your tutorial and have everything built and installed, except the firmware update:

# fio-status -a

Found 1 ioMemory device in this system

Driver version: 3.2.16 build 1731

Adapter: ioDimm

Fusion-io ioDIMM3 320GB, Product Number:FS1-001-321-CS, SN:6607, FIO SN:6607

ioDIMM3, PN:001194012, Alt PN:FS1-SS1-321-CS

PCIe Power limit threshold: Disabled

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:FS1-001-321-CS, SN:6607

fct0 Status unknown: Driver is in MINIMAL MODE:

The firmware on this device is not compatible with the currently installed version of the driver

ioDIMM3, Product Number:FS1-001-321-CS, SN:6607

!! ---> There are active errors or warnings on this device! Read below for details.

ioDIMM3, PN:001194012, Alt PN:FS1-SS1-321-CS

Powerloss protection: not available

PCI:02:00.0, Slot Number:17

Vendor:1aed, Device:1005, Sub vendor:1aed, Sub device:1010

Firmware v5.0.7, rev 107053 Public

Geometry and capacity information not available.

Format: not low-level formatted

PCIe slot available power: 75.00W

PCIe negotiated link: 4 lanes at 2.5 Gt/sec each, 1000.00 MBytes/sec total

Internal temperature: 51.68 degC, max 52.17 degC

Internal voltage: avg 0.97V, max 2.38V

Aux voltage: avg 2.49V, max 2.38V

Lifetime data volumes:

Physical bytes written: 0

Physical bytes read : 0

RAM usage:

Current: 0 bytes

Peak : 0 bytes

ACTIVE WARNINGS:

The ioMemory is currently running in a minimal state.

I'm having trouble recognizing if and where in the instructions the firmware is updated.

If it isn't part of the tutorial, would you mind pointing me in the direction of where I can find instructions?

The instructions on the Fusion IO USB I got with the card are for rpm packages.

Thanks in advance.