Hi to All,

I'm writing here since i can't find enough information about the issue I'm facing.

I have decided to test 4 nodes cluster with proxmox . Everything was running just fine for the last 4 weeks.Ceph running good.Vm's running well no issues.

Yesterday I have decided to update /upgrade all machines, because I have checked for any updates and there was a lot available.

After the first machine update/upgrade and reboot the whole cluster died.

Although i first updated only 1 machine -the whole cluster Died.

I'm glad that this was not a production environment !!!>

Till now I cant make the cluster running ..

When i try to login via ssh to whichever of the machines it hangs and cant continue .

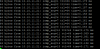

When I try to log in it stays like this on the login page...

I have tried to with little to no luck.

I have tried to check whether the proxmox cluster pem certificate that i have created during the cluster creation is there but ssh drops and hangs

killall -9 corosync

systemctl restart pve-cluster

systemctl restart pvedaemon

systemctl restart pveproxy

systemctl restart pvestatd

Its important to find out whether its possible to revive cluster in such situation if happends becouse imagine 100 's of machines and You decide to update only 1 machine and the whole cluster Dies...

I'm writing here since i can't find enough information about the issue I'm facing.

I have decided to test 4 nodes cluster with proxmox . Everything was running just fine for the last 4 weeks.Ceph running good.Vm's running well no issues.

Yesterday I have decided to update /upgrade all machines, because I have checked for any updates and there was a lot available.

After the first machine update/upgrade and reboot the whole cluster died.

Although i first updated only 1 machine -the whole cluster Died.

I'm glad that this was not a production environment !!!>

Till now I cant make the cluster running ..

When i try to login via ssh to whichever of the machines it hangs and cant continue .

When I try to log in it stays like this on the login page...

I have tried to with little to no luck.

I have tried to check whether the proxmox cluster pem certificate that i have created during the cluster creation is there but ssh drops and hangs

killall -9 corosync

systemctl restart pve-cluster

systemctl restart pvedaemon

systemctl restart pveproxy

systemctl restart pvestatd

Its important to find out whether its possible to revive cluster in such situation if happends becouse imagine 100 's of machines and You decide to update only 1 machine and the whole cluster Dies...