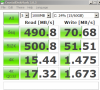

Relating to Ceph performance, I really have no idea if your benchmarks are ok or not, just started using CEPH last week.

I have 4 CEPH nodes, each has 4 SATA disks. One disk is used for journals, the other 3 disks are used for OSDs for a total of 12 OSDs.

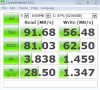

What kind of disk do your use? SSD for journal? How fast is it without extra journal disk?