Yes, 10G ethernet. I've got 3 nodes, 12 disks per node. Each node also has 2 SSDs being used for OS/mon.

You might be right that my 10G interface on Proxmox could be a bottleneck. After that point, the IO Aggregator (connects all the blades together) has a 40G trunk to the primary switch, which then has 10G connections to each Ceph node giving the Ceph cluster a 30G combined bandwidth.

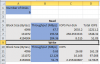

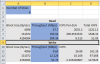

I just did another set of tests directly on one Proxmox host, these are to a 2x replication pool as well so they should be real world as the replication from OSDs will be happening. Results below:

[TABLE="width: 428"]

[TR]

[TD="colspan: 4"]Read[/TD]

[/TR]

[TR]

[TD]Block Size (Bytes)[/TD]

[TD]Throughput (MBps)[/TD]

[TD]IOPS Per disk[/TD]

[TD]Total IOPS[/TD]

[/TR]

[TR]

[TD="align: right"]4096[/TD]

[TD="align: right"]48.027[/TD]

[TD="align: right"]341.5253333[/TD]

[TD="align: right"]12294.912[/TD]

[/TR]

[TR]

[TD="align: right"]131072[/TD]

[TD="align: right"]535.739[/TD]

[TD="align: right"]119.0531111[/TD]

[TD="align: right"]4285.912[/TD]

[/TR]

[TR]

[TD="align: right"]4194304[/TD]

[TD="align: right"]885.099[/TD]

[TD="align: right"]6.146520833[/TD]

[TD="align: right"]221.27475[/TD]

[/TR]

[TR]

[TD="colspan: 4"]Write[/TD]

[/TR]

[TR]

[TD]Block Size (Bytes)[/TD]

[TD]Throughput (MBps)[/TD]

[TD]IOPS[/TD]

[TD][/TD]

[/TR]

[TR]

[TD="align: right"]4096[/TD]

[TD="align: right"]22.034[/TD]

[TD="align: right"]156.6862222[/TD]

[TD="align: right"]5640.704[/TD]

[/TR]

[TR]

[TD="align: right"]131072[/TD]

[TD="align: right"]328.495[/TD]

[TD="align: right"]72.99888889[/TD]

[TD="align: right"]2627.96[/TD]

[/TR]

[TR]

[TD="align: right"]4194304[/TD]

[TD="align: right"]616.88[/TD]

[TD="align: right"]4.283888889[/TD]

[TD="align: right"]154.22[/TD]

[/TR]

[/TABLE]

To test the theory of my 10G link on Proxmox being saturated I will try again running the test from two nodes at once and combine the results.

Here is my benchmark of CEPH from one of the Proxmox node using rados. CEPH network is bonded 2gbps.

Block size 4194303 read maxed out the bandwidth to 204.35 mb/s. So seems like the reorganized network working fine. 4096 block Read is the only number is my setup was able to catch up at 47.7 mb/s. You have 36 OSDs i got 6. Does the number of OSDs really makes significant difference.

Ran some #hdparm benchmarks on HDD themselves. Here are the avg. numbers from 10 benchmarks:

CEPH Node 1 : 131 MB/s

CEPH Node 2 : 134 MB/s

Last edited: