Hi, me again!

Rocking that 5.0 installation now! great work guys.

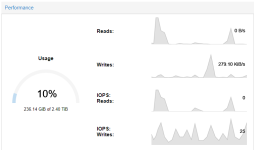

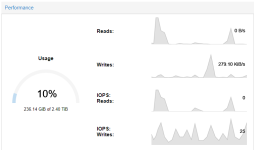

Although i found a slight issue. using my ceph monitor, it was showing ~240GB usage.

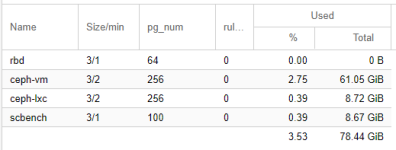

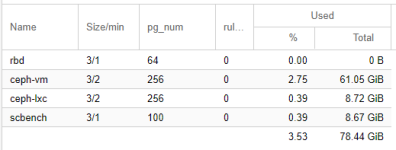

However my pools combined are ~80GB. even with a duplication of 2, that does add up to ~160. not even close to the 240 it is 'thinking' it is using (? if that is how it works, maybe it already count the duplication).

i dont have 'hidden' pools or anything that i know of.

I have no clue how ceph takes all this data in to account, but in my eyes it should be way lower.

Edit during making of the post. i deleted the 'scbench' pool. and this seem to take up 26GB! That pool was 8.67GB? :O, that seems more like a duplication of x3!

i'd love to hear some input / discussion,

D0peX

Rocking that 5.0 installation now! great work guys.

Although i found a slight issue. using my ceph monitor, it was showing ~240GB usage.

However my pools combined are ~80GB. even with a duplication of 2, that does add up to ~160. not even close to the 240 it is 'thinking' it is using (? if that is how it works, maybe it already count the duplication).

i dont have 'hidden' pools or anything that i know of.

Code:

root@hv1:~# ceph osd lspools

0 rbd,1 ceph-vm,2 ceph-lxc,3 scbench,

Code:

root@hv1:~# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

2459G 2223G 236G 9.60

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

rbd 0 0 0 680G 0

ceph-vm 1 62512M 8.23 680G 15700

ceph-lxc 2 8930M 1.27 680G 2310

scbench 3 8880M 1.26 680G 2221

root@hv1:~#I have no clue how ceph takes all this data in to account, but in my eyes it should be way lower.

Edit during making of the post. i deleted the 'scbench' pool. and this seem to take up 26GB! That pool was 8.67GB? :O, that seems more like a duplication of x3!

i'd love to hear some input / discussion,

D0peX