Last edited:

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The OSD is down and out, ceph has to recover from other OSDs. Depending on the speed of the other OSDs this might take some time. Do you see any recovery traffic?

I found following messages. Is it stucked?

Degraded data redundancy: 46/1454715 objects degraded (0.003%), 1 pg degraded, 1 pg undersized

pg 11.45 is stuck undersized for 220401.107415, current state active+undersized+degraded, last acting [5,4]

Degraded data redundancy: 46/1454715 objects degraded (0.003%), 1 pg degraded, 1 pg undersized

pg 11.45 is stuck undersized for 220401.107415, current state active+undersized+degraded, last acting [5,4]

What size/min_size does the pool have? And are those OSDs online?pg 11.45 is stuck undersized for 220401.107415, current state active+undersized+degraded, last acting [5,4]

And aside, the 5.4 is EoL.My version is Virtual Environment 5.4-15

osd pool default min size = 2What size/min_size does the pool have? And are those OSDs online?

osd pool default size = 3

Yes, the OSDs are online.

I know. I will upgrade it after the replacement from HDD to SSD.And aside, the 5.4 is EoL.

pg 11.45 is stuck undersized for XXXXX.XXXXX, current state active+undersized+degraded, last acting [5,4]

The number XXXXX.XXXXX is always changing every time. Is it mean the recovering running?

Last edited:

These are seconds. As long as Ceph will not be able to recreate the third copy the message will stay.The number XXXXX.XXXXX is always changing every time. Is it mean the recovering running?

How does the

ceph osd tree output look like?These are seconds. As long as Ceph will not be able to recreate the third copy the message will stay.

How does theceph osd treeoutput look like?

Code:

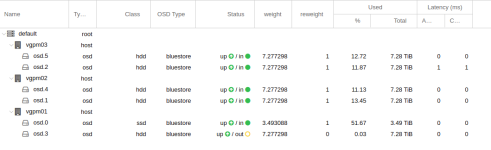

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 39.87958 root default

-3 10.77039 host vgpm01

3 hdd 7.27730 osd.3 up 0 1.00000

0 ssd 3.49309 osd.0 up 1.00000 1.00000

-5 14.55460 host vgpm02

1 hdd 7.27730 osd.1 up 1.00000 1.00000

4 hdd 7.27730 osd.4 up 1.00000 1.00000

-7 14.55460 host vgpm03

2 hdd 7.27730 osd.2 up 1.00000 1.00000

5 hdd 7.27730 osd.5 up 1.00000 1.00000And do you have any special crush rules

Also, is there enough space on the cluster, since the SSDs are only half the size of the HDDs.

Since there are only two OSDs on one host, the OSD with reweight 1 will need to hold the data of the OSD with reweight 0. If there isn't enough space to do that the recovery can't continue. But since you have two copies of your data left, the replacement of the HDD can continue, as long as there will be enough space on the new SSDs.

ceph osd dump?Also, is there enough space on the cluster, since the SSDs are only half the size of the HDDs.

Since there are only two OSDs on one host, the OSD with reweight 1 will need to hold the data of the OSD with reweight 0. If there isn't enough space to do that the recovery can't continue. But since you have two copies of your data left, the replacement of the HDD can continue, as long as there will be enough space on the new SSDs.

Code:

# ceph osd dump

epoch 676

fsid 0caf72c1-b05d-4f73-88da-ca4a2b89225f

created 2017-11-29 08:33:35.211810

modified 2021-03-01 18:29:29.970358

flags sortbitwise,recovery_deletes,purged_snapdirs

crush_version 19

full_ratio 0.95

backfillfull_ratio 0.9

nearfull_ratio 0.85

require_min_compat_client jewel

min_compat_client jewel

require_osd_release luminous

pool 10 'vgpool01' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 503 flags hashpspool stripe_width 0 application rbd

removed_snaps [1~25,28~2,2d~2]

pool 11 'cephfs_data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 355 flags hashpspool stripe_width 0 application cephfs

pool 12 'cephfs_metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 last_change 355 flags hashpspool stripe_width 0 application cephfs

max_osd 6

osd.0 up in weight 1 up_from 529 up_thru 654 down_at 0 last_clean_interval [0,0) 172.20.0.11:6805/1760366 172.20.0.11:6806/1760366 172.20.0.11:6807/1760366 172.20.0.11:6808/1760366 exists,up fd4702b5-605e-4079-8fd5-8a38e75b82d1

osd.1 up in weight 1 up_from 393 up_thru 670 down_at 391 last_clean_interval [373,390) 172.20.0.12:6801/3418 172.20.0.12:6802/3418 172.20.0.12:6803/3418 172.20.0.12:6804/3418 exists,up 7ac6976a-bee6-4b89-b2af-db2e1094d153

osd.2 up in weight 1 up_from 402 up_thru 658 down_at 398 last_clean_interval [378,397) 172.20.0.13:6805/3446 172.20.0.13:6806/3446 172.20.0.13:6807/3446 172.20.0.13:6808/3446 exists,up b7c24889-a023-4ca6-9d4b-e5fda00be3e4

osd.3 up out weight 0 up_from 676 up_thru 532 down_at 675 last_clean_interval [674,674) 172.20.0.11:6801/1693427 172.20.0.11:6802/1693427 172.20.0.11:6803/1693427 172.20.0.11:6804/1693427 exists,up dfd6c311-1fd7-474e-b737-afb23941de3c

osd.4 up in weight 1 up_from 412 up_thru 656 down_at 410 last_clean_interval [395,411) 172.20.0.12:6805/3578 172.20.0.12:6809/1003578 172.20.0.12:6810/1003578 172.20.0.12:6811/1003578 exists,up c3db964e-282c-4330-93a3-e5d300e496a4

osd.5 up in weight 1 up_from 401 up_thru 672 down_at 398 last_clean_interval [380,397) 172.20.0.13:6801/3217 172.20.0.13:6802/3217 172.20.0.13:6803/3217 172.20.0.13:6804/3217 exists,up 9a9ede50-ecef-44d2-a0b0-2bcad894ee05I think there is enough space.

Should I mark the pg as lost by following command? I don't know how it works.

https://docs.ceph.com/en/latest/rados/troubleshooting/troubleshooting-pg/

ceph pg 11.45 mark_unfound_lost deletehttps://docs.ceph.com/en/latest/rados/troubleshooting/troubleshooting-pg/

Besides that one PG you have three copies of your data, each on one node. The PG is from the cephfs_data pool. Just replace the OSD, the recovery should take care of PG.

Do you mean just ignore the warning and continue to the replacement process?Besides that one PG you have three copies of your data, each on one node. The PG is from the cephfs_data pool. Just replace the OSD, the recovery should take care of PG.

Will the recovery process start when the osd.3 is destroyed? but it is very strange why only one pg is degraded.

yes.Do you mean just ignore the warning and continue to the replacement process?

Yes that should happen. Probably Ceph can't place any more data on that one PG. The Cephfs has to many PGs, checkout the pgcalc from Ceph.Will the recovery process start when the osd.3 is destroyed? but it is very strange why only one pg is degraded.

Thank you very much. I will try it.

I have one more question. If I mark the pg as lost by 'mark_unfound_lost delete' command that I mentioned, is it meaningless?

I have one more question. If I mark the pg as lost by 'mark_unfound_lost delete' command that I mentioned, is it meaningless?

This will drop the reference to that PG and with that its data that would be still on the other OSDs.I have one more question. If I mark the pg as lost by 'mark_unfound_lost delete' command that I mentioned, is it meaningless?

So, will the command recover the lost PG from other OSDs to keep 3 pg replicas?This will drop the reference to that PG and with that its data that would be still on the other OSDs.

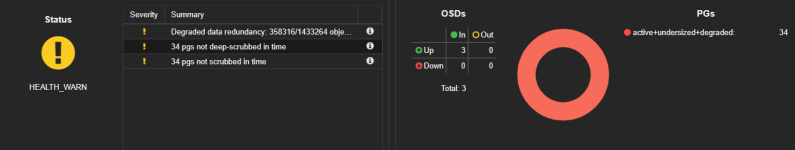

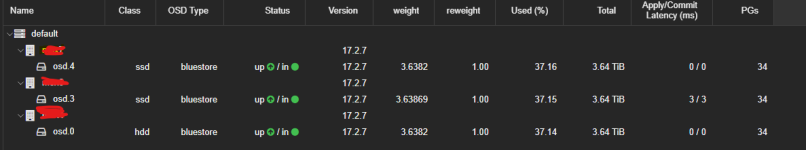

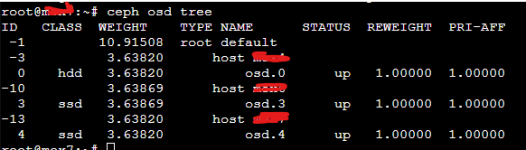

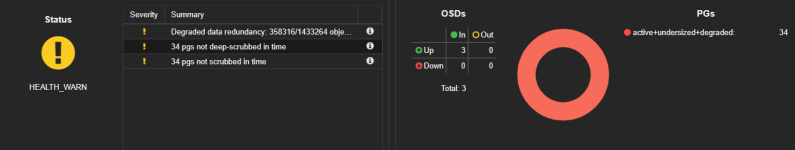

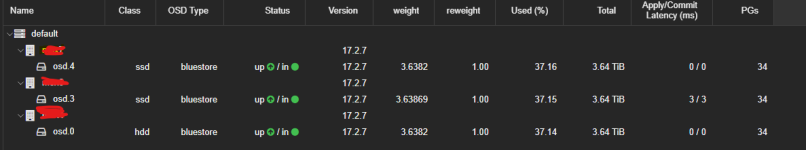

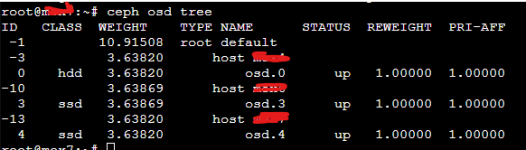

Hello, I'm new here to Proxmox. My environment is based on Proxmox 8.0.4 and Ceph 17.2.7. Some time ago (June 2025), one of the cluster nodes (there were four) failed, now there are three. I decided to clean up the data left by this failed node. I ran the following commands:

After all this, the node disappeared from the host list. I'm seeing the same issue in Ceph, but I still get the notification?

I tested cleaning up the node in a test environment, and unfortunately, no such errors occurred.

What could be the problem?

Code:

pvecm nodes

pvecm delnode name_of_node

rm -rf /etc/pve/nodes/name_of_node

ceph osd tree

ceph osd out osd.1

ceph osd out osd.2

ceph osd crush remove osd.1

ceph osd crush remove osd.2

ceph auth del osd.1

ceph auth del osd.2

ceph osd rm osd.1

ceph osd rm osd.2

ceph mon remove name_of_node

ceph osd crush tree

ceph osd crush remove name_of_nodeAfter all this, the node disappeared from the host list. I'm seeing the same issue in Ceph, but I still get the notification?

Code:

Degraded data redundancy: 358316/1433264 objects degraded (25.000%), 34 pgs degraded, 34 pgs undersized

pg 1.0 is stuck undersized for 2d, current state active+undersized+degraded, last acting [0,3,4]

pg 2.0 is stuck undersized for 2d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.1 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.2 is stuck undersized for 2d, current state active+undersized+degraded, last acting [3,4,0]

pg 2.3 is stuck undersized for 2d, current state active+undersized+degraded, last acting [4,3,0]

pg 2.4 is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,3,4]

pg 2.5 is stuck undersized for 13d, current state active+undersized+degraded, last acting [4,0,3]

pg 2.6 is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.7 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,4,0]

pg 2.8 is stuck undersized for 2d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.9 is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.a is stuck undersized for 13d, current state active+undersized+degraded, last acting [4,0,3]

pg 2.b is stuck undersized for 2d, current state active+undersized+degraded, last acting [4,0,3]

pg 2.c is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.d is stuck undersized for 2d, current state active+undersized+degraded, last acting [4,3,0]

pg 2.e is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.f is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.10 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.11 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.12 is stuck undersized for 2d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.13 is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,3,4]

pg 2.14 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,4,0]

pg 2.15 is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.16 is stuck undersized for 2d, current state active+undersized+degraded, last acting [0,3,4]

pg 2.17 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,4,0]

pg 2.18 is stuck undersized for 13d, current state active+undersized+degraded, last acting [4,3,0]

pg 2.19 is stuck undersized for 13d, current state active+undersized+degraded, last acting [4,0,3]

pg 2.1a is stuck undersized for 2d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.1b is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,4,0]

pg 2.1c is stuck undersized for 2d, current state active+undersized+degraded, last acting [4,3,0]

pg 2.1d is stuck undersized for 2d, current state active+undersized+degraded, last acting [3,0,4]

pg 2.1e is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,4,3]

pg 2.1f is stuck undersized for 13d, current state active+undersized+degraded, last acting [0,3,4]

pg 3.0 is stuck undersized for 13d, current state active+undersized+degraded, last acting [3,4,0]

Code:

34 pgs not deep-scrubbed in time

pg 2.1f not deep-scrubbed since 2025-06-29T00:57:16.018258+0200

pg 2.1e not deep-scrubbed since 2025-06-29T14:36:09.200353+0200

pg 2.1d not deep-scrubbed since 2025-06-26T03:44:09.339075+0200

pg 2.1c not deep-scrubbed since 2025-06-28T11:02:51.533858+0200

pg 2.b not deep-scrubbed since 2025-06-26T12:22:49.060403+0200

pg 2.a not deep-scrubbed since 2025-06-25T01:00:14.869681+0200

pg 2.9 not deep-scrubbed since 2025-06-25T13:18:01.419705+0200

pg 2.8 not deep-scrubbed since 2025-06-26T08:33:55.385664+0200

pg 2.7 not deep-scrubbed since 2025-06-28T03:41:17.250540+0200

pg 2.6 not deep-scrubbed since 2025-06-29T06:32:50.278146+0200

pg 2.5 not deep-scrubbed since 2025-06-25T19:19:52.694212+0200

pg 2.4 not deep-scrubbed since 2025-06-24T01:18:55.816872+0200

pg 2.2 not deep-scrubbed since 2025-06-26T11:38:47.880316+0200

pg 3.0 not deep-scrubbed since 2025-06-29T17:18:53.638818+0200

pg 2.1 not deep-scrubbed since 2025-06-25T09:01:41.711958+0200

pg 2.0 not deep-scrubbed since 2025-06-29T12:01:53.612728+0200

pg 2.3 not deep-scrubbed since 2025-06-23T13:47:28.743175+0200

pg 1.0 not deep-scrubbed since 2025-06-28T06:56:17.382737+0200

pg 2.c not deep-scrubbed since 2025-06-23T04:38:38.001734+0200

pg 2.d not deep-scrubbed since 2025-06-25T08:25:24.127408+0200

pg 2.e not deep-scrubbed since 2025-06-27T00:48:15.770905+0200

pg 2.f not deep-scrubbed since 2025-06-27T20:32:53.724855+0200

pg 2.10 not deep-scrubbed since 2025-06-24T21:42:05.810651+0200

pg 2.11 not deep-scrubbed since 2025-06-29T23:49:32.538246+0200

pg 2.12 not deep-scrubbed since 2025-06-29T11:53:41.806235+0200

pg 2.13 not deep-scrubbed since 2025-06-29T13:22:51.715994+0200

pg 2.14 not deep-scrubbed since 2025-06-29T00:07:38.994351+0200

pg 2.15 not deep-scrubbed since 2025-06-22T13:17:01.163373+0200

pg 2.16 not deep-scrubbed since 2025-06-25T07:01:04.985874+0200

pg 2.17 not deep-scrubbed since 2025-06-29T02:02:46.110410+0200

pg 2.18 not deep-scrubbed since 2025-06-28T21:54:32.383481+0200

pg 2.19 not deep-scrubbed since 2025-06-28T10:21:04.576592+0200

pg 2.1a not deep-scrubbed since 2025-06-29T21:54:46.256726+0200

pg 2.1b not deep-scrubbed since 2025-06-30T08:39:44.515952+0200

Code:

HEALTH_WARN

17.2.7

OSDs

In Out

Up 3 0

Down 0 0

Total: 3

PGs

active+undersized+degraded:34

Monitors

name_of_node4: name_of_node6: name_of_node7:

Managers

name_of_nod4: name_of_nod6: name_of_nod7:

Meta Data Servers

Usage

4.06 TiB of 10.92 TiB

Recovery/ Rebalance:

75.00%

75.00%

Reads:

Writes:

IOPS: Reads:

IOPS: Writes:

Server View

Logs

()

34 pgs not scrubbed in time

pg 2.1f not scrubbed since 2025-06-29T00:57:16.018258+0200

pg 2.1e not scrubbed since 2025-06-29T14:36:09.200353+0200

pg 2.1d not scrubbed since 2025-06-30T08:28:58.730660+0200

pg 2.1c not scrubbed since 2025-06-29T15:17:14.008050+0200

pg 2.b not scrubbed since 2025-06-28T19:59:27.540793+0200

pg 2.a not scrubbed since 2025-06-28T22:07:28.547796+0200

pg 2.9 not scrubbed since 2025-06-30T08:28:51.182830+0200

pg 2.8 not scrubbed since 2025-06-29T05:12:20.049512+0200

pg 2.7 not scrubbed since 2025-06-29T04:00:01.420769+0200

pg 2.6 not scrubbed since 2025-06-29T06:32:50.278146+0200

pg 2.5 not scrubbed since 2025-06-29T09:22:35.503815+0200

pg 2.4 not scrubbed since 2025-06-29T08:43:43.721463+0200

pg 2.2 not scrubbed since 2025-06-30T00:36:23.314842+0200

pg 3.0 not scrubbed since 2025-06-29T17:18:53.638818+0200

pg 2.1 not scrubbed since 2025-06-30T00:35:44.449062+0200

pg 2.0 not scrubbed since 2025-06-29T12:01:53.612728+0200

pg 2.3 not scrubbed since 2025-06-29T13:12:04.869167+0200

pg 1.0 not scrubbed since 2025-06-29T14:17:48.428687+0200

pg 2.c not scrubbed since 2025-06-29T16:16:46.787555+0200

pg 2.d not scrubbed since 2025-06-29T02:18:03.742347+0200

pg 2.e not scrubbed since 2025-06-29T13:37:31.337238+0200

pg 2.f not scrubbed since 2025-06-29T02:44:41.853456+0200

pg 2.10 not scrubbed since 2025-06-28T17:16:08.853755+0200

pg 2.11 not scrubbed since 2025-06-29T23:49:32.538246+0200

pg 2.12 not scrubbed since 2025-06-29T11:53:41.806235+0200

pg 2.13 not scrubbed since 2025-06-29T13:22:51.715994+0200

pg 2.14 not scrubbed since 2025-06-30T08:29:16.331041+0200

pg 2.15 not scrubbed since 2025-06-28T19:29:23.754531+0200

pg 2.16 not scrubbed since 2025-06-29T02:17:20.554940+0200

pg 2.17 not scrubbed since 2025-06-29T02:02:46.110410+0200

pg 2.18 not scrubbed since 2025-06-30T01:26:24.781887+0200

pg 2.19 not scrubbed since 2025-06-29T18:46:22.202276+0200

pg 2.1a not scrubbed since 2025-06-29T21:54:46.256726+0200

pg 2.1b not scrubbed since 2025-06-30T08:39:44.515952+0200

I tested cleaning up the node in a test environment, and unfortunately, no such errors occurred.

What could be the problem?

Please start a new thead and don't hijack old, unrelated ones.What could be the problem?