Wanting a file server basically. Hosting ISO's, vm images, plus other type of files. Images videos etc...

Had a pool running wasnt sure how to export a mount and how that mount would fail over if I lost a node. Basically how the client should connect. Think its the architecture I am struggling with.

If a node fails I need to have multiple monitor addresses in the client connection? The monitor is what actually serves?

New to ceph

This is exactly where I'm at.

I'm a total newb when it comes to Proxmox and pretty green on virtualization in general. I've played with Hyper-V and making VHDxs but that's about it. I've been ideating on a way to make a high availability storage system and came across using GlusterFS and TrueNAS, but now Gluster is being abandoned and Ceph seems like the best-supported platform for data redundancy across physical nodes.

But I can't seem to find any good documentation of how to use a Ceph pool as a target for NAS SMB/CIFs storage.

So I just said eff it, and I tried to do it myself. Insert gif of dog floating in outer space.

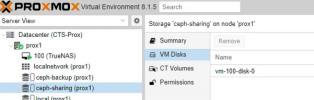

I set up a test 3-node Proxmox VE cluster and I configured Ceph with min 2 / std 3 nodes. Each node has 2 drives set up as Ceph OSDs, and I've created 2 Ceph Pools for different use cases.

This won't be a long-term setup, but I spun up a new TrueNAS scale VM on my first node, gave it a couple CPUs and half the RAM, and once I got into the weeds on configuring it, sure enough, the two pools appeared as storage that I could create partitions on! After figuring out how to create shares on TrueNAS (also a newb there), I shared a folder out to my Windows machines and got a transfer going.

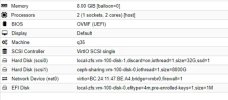

Now, just to set the stage here, this is not an ideal setup. Each of these nodes has an i5-3570, 16GB of DDR3, the VM host is on a SATA SSD, and they are connected with a single GbE NIC - so no dedicated backhaul for Ceph yet.

But, all that aside, it fucking works. File transfer from my GbE workstation, to a TrueNAS VM on Prox1, passing through to a Ceph cluster on Prox1-3.

Next up I wanted to test whether this thing would actually survive a 'disaster.' Started a 5GB file transfer and then sent a shutdown command to Prox3. Transfer speed fell, but continued, and afterward the transferred file showed a matching SHA hash.

So I'm very excited about this. I'm ordering some dual SFP+ NICs for the nodes, and plan on moving the TrueNAS VM over to a PVE host that isn't part of the Ceph cluster, then figuring out fallback in case of TrueNAS host failure.