Hello!

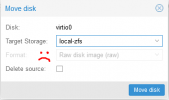

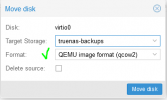

For some reason, when I'm trying to convert a RAW disk, which is stored on zfs-local, to QCOW2, the format dropdown list is inactive. But if I try to move (Move Disc button) the disk to remote storage (SMB share), the dropdown is active.

It seems like I've missed something during the installation that makes it that way. Are there any prerequisites to being able to use QCOW2 format, and is there a way to make QCOW2 work on local storage without having to do something drastic like reinstalling Proxmox? Thanks!

Some info:

- Proxmox version: the latest (sorry, can't check the exact version atm, but it was updated today)

- Repository: no-subscription

- The server only has one SSD (for both the system and guests' data) and Proxmox is installed on a ZFS (RAID 0) partition

- The machines I'm having the issue with are in a cluster

For some reason, when I'm trying to convert a RAW disk, which is stored on zfs-local, to QCOW2, the format dropdown list is inactive. But if I try to move (Move Disc button) the disk to remote storage (SMB share), the dropdown is active.

It seems like I've missed something during the installation that makes it that way. Are there any prerequisites to being able to use QCOW2 format, and is there a way to make QCOW2 work on local storage without having to do something drastic like reinstalling Proxmox? Thanks!

Some info:

- Proxmox version: the latest (sorry, can't check the exact version atm, but it was updated today)

- Repository: no-subscription

- The server only has one SSD (for both the system and guests' data) and Proxmox is installed on a ZFS (RAID 0) partition

- The machines I'm having the issue with are in a cluster

Attachments

Last edited: