Hello everyone,

today I pressed the "Shutdown" button in the upper right corner after selecting my single node on the left hand side. No HA, no Ceph, just a single PROXMOX 7.2-7 machine.

I expected the system starts shutting down all guests and shuts down itself afterwards like with the "Reboot" button in the past.

Instead it stopped all vms (like pulling their power source) and shut down itself afterwards properly...

I never used this button ever before, because I always used the "Reboot" button in the past. And this button normally shuts down the guests properly. I can't test any of those things now because its my production machine and I don't want to harm my VMs by this behaviour.

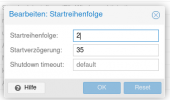

All guests have set a custom boot order and boot timeout, but no shutdown timeout. The fields are all holding the greyed-out value "default" (which should be 180s ?!)

Did I miss something in the docs, is it a bug or can you explain me why such a critical behaviour happens without any warning?

Thanks,

Christian

today I pressed the "Shutdown" button in the upper right corner after selecting my single node on the left hand side. No HA, no Ceph, just a single PROXMOX 7.2-7 machine.

I expected the system starts shutting down all guests and shuts down itself afterwards like with the "Reboot" button in the past.

Instead it stopped all vms (like pulling their power source) and shut down itself afterwards properly...

I never used this button ever before, because I always used the "Reboot" button in the past. And this button normally shuts down the guests properly. I can't test any of those things now because its my production machine and I don't want to harm my VMs by this behaviour.

All guests have set a custom boot order and boot timeout, but no shutdown timeout. The fields are all holding the greyed-out value "default" (which should be 180s ?!)

Did I miss something in the docs, is it a bug or can you explain me why such a critical behaviour happens without any warning?

Thanks,

Christian

Last edited: