I am running version 6.1-7 on a 2+1 node setup. After I ran updates on both nodes, it gave me a message that a new kernel was installed so I rebooted one server after migrating the active VMs to the other.

I waited a little over 10 minutes for the server to come back up and nothing. I connected to the Dell IPMI console interface and it just shows a grub prompt(not grub rescue).

The only post I found concerning this was Proxmox Grub Failure article, but I don't have physical access to the server at the moment to perform the steps. Walking someone else through the steps isn't an option either.

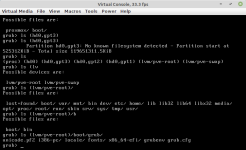

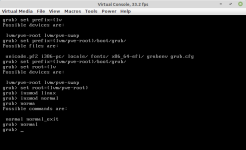

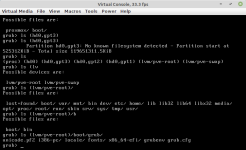

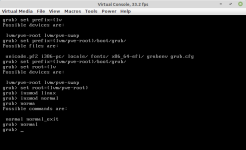

I've attempted to follow the solution in the highest rated answer from this link (pictures below), but after rebooting I'm back at the same grub prompt:

It references another walk through, which I've also tried, but when I attempted the "boot from grub" section, I don't know how to set the parameters when using LVM.

The following is the example it gives:

So, I have one of two servers functional and I can't reboot the other in case it has the same issue. Is there anything I can do remotely to fix this, or do I just hope the remaining server doesn't go down until I get back in a week? Also, am I entering the wrong parameters or will the steps I've taken not work because it is an LVM partition?

If there is no other option than to physically be there, is the Proxmox article I previously linked about recovering from a grub failure still applicable?

Thanks in advance for any help that anyone can provide.

I waited a little over 10 minutes for the server to come back up and nothing. I connected to the Dell IPMI console interface and it just shows a grub prompt(not grub rescue).

The only post I found concerning this was Proxmox Grub Failure article, but I don't have physical access to the server at the moment to perform the steps. Walking someone else through the steps isn't an option either.

I've attempted to follow the solution in the highest rated answer from this link (pictures below), but after rebooting I'm back at the same grub prompt:

The boot process can't find the root partition (the part of the disk, that contains the information for starting up the system), so you have to specify its location yourself.

I think you have to look at something like this article: how-rescue-non-booting-grub-2-linux

short: in this grub rescue> command line type

ls

... to list all available devices, then you have to go through each, type something like (depends what is shown by the ls command):

ls (hd0,1)/

ls (hd0,2)/

... and so on, until you find

(hd0,1)/boot/grub OR (hd0,1)/grub

In case of efi

(hd0,1)/efi/boot/grub OR (hd0,1)/efi/grub

... now set the boot parameters accordingly, just type this with the correct numbers and after each line press return

set prefix=(hd0,1)/grub

set root=(hd0,1)

insmod linux

insmod normal

normal

now it should boot. Start a commandline now (a terminal) and execute

sudo update-grub

... this should correct the missing information and it should boot next time.

If not, you have to go through the steps again an might have to repair or install grub again (look at this article: https://help.ubuntu.com/community/Boot-Repair)

It references another walk through, which I've also tried, but when I attempted the "boot from grub" section, I don't know how to set the parameters when using LVM.

The following is the example it gives:

Booting From grub>

This is how to set the boot files and boot the system from the grub> prompt. We know from running the ls command that there is a Linux root filesystem on (hd0,1), and you can keep searching until you verify where /boot/grub is. Then run these commands, using your own root partition, kernel, and initrd image:

grub> set root=(hd0,1)

grub> linux /boot/vmlinuz-3.13.0-29-generic root=/dev/sda1

grub> initrd /boot/initrd.img-3.13.0-29-generic

grub> boot

So, I have one of two servers functional and I can't reboot the other in case it has the same issue. Is there anything I can do remotely to fix this, or do I just hope the remaining server doesn't go down until I get back in a week? Also, am I entering the wrong parameters or will the steps I've taken not work because it is an LVM partition?

If there is no other option than to physically be there, is the Proxmox article I previously linked about recovering from a grub failure still applicable?

Thanks in advance for any help that anyone can provide.