I've replaced a 10GbE adapter in one of our Proxmox nodes that was running a bonded pair of Cat6 cables to an aggregate switch. The old NIC and its replacement are both Intel Ethernet Controllers (10-Gigabit X540-AT2) so I assumed (probably incorrectly) that all I'd need to do was plug it in, find the IDs of the new ports and update the port IDs of the bond interface, jack in the existing Cat6 cables and off we'd go. No dice.

On the outside of things, the network ports on both the new NIC and the switch show connection lights but no activity lights. On the Proxmox node, I've confirmed that the NIC is recognized by the system using

Running

Following that, I checked the systemd journal and see the bond0 interface connecting with the slave ports and transmitting MTU settings to them. Beyond this, however, I'm not sure what of the log entries I've found are helpful to rooting out why the ports and bond interface are down on the new NIC. I can see that the interfaces exist and that when they're failing, they fail together, appropriately.

I'm pretty new to both Linux/Proxmox and Ethernet bonding so any tips here to get our node back online are much appreciated!

Chris

On the outside of things, the network ports on both the new NIC and the switch show connection lights but no activity lights. On the Proxmox node, I've confirmed that the NIC is recognized by the system using

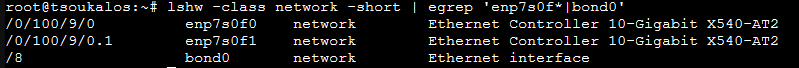

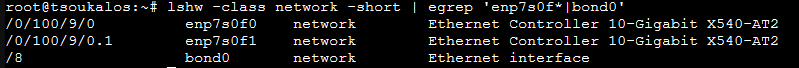

lshw -class network -short:

Running

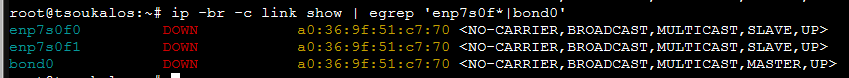

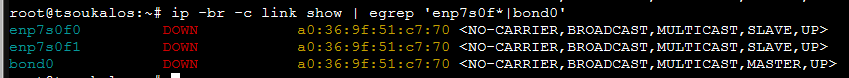

ip -br -c link show provides the following additional interface status info:

Following that, I checked the systemd journal and see the bond0 interface connecting with the slave ports and transmitting MTU settings to them. Beyond this, however, I'm not sure what of the log entries I've found are helpful to rooting out why the ports and bond interface are down on the new NIC. I can see that the interfaces exist and that when they're failing, they fail together, appropriately.

root@tsoukalos:~# journalctl | egrep 'enp7s0f*|bond0'

Feb 04 16:59:47 tsoukalos kernel: ixgbe 0000:07:00.0 enp7s0f0: renamed from eth5

Feb 04 16:59:47 tsoukalos kernel: ixgbe 0000:07:00.1 enp7s0f1: renamed from eth2

Feb 04 16:59:55 tsoukalos systemd-udevd[733]: Could not generate persistent MAC address for bond0: No such file or directory

Feb 04 16:59:55 tsoukalos kernel: ixgbe 0000:07:00.0: registered PHC device on enp7s0f0

Feb 04 16:59:55 tsoukalos kernel: bond0: (slave enp7s0f0): Enslaving as a backup interface with a down link

Feb 04 16:59:55 tsoukalos kernel: ixgbe 0000:07:00.1: registered PHC device on enp7s0f1

Feb 04 16:59:55 tsoukalos kernel: bond0: (slave enp7s0f1): Enslaving as a backup interface with a down link

Feb 04 16:59:55 tsoukalos kernel: ixgbe 0000:07:00.0 enp7s0f0: changing MTU from 1500 to 9000

Feb 04 16:59:56 tsoukalos kernel: ixgbe 0000:07:00.1 enp7s0f1: changing MTU from 1500 to 9000

Feb 04 16:59:56 tsoukalos kernel: vmbr2: port 1(bond0) entered blocking state

Feb 04 16:59:56 tsoukalos kernel: vmbr2: port 1(bond0) entered disabled state

Feb 04 16:59:56 tsoukalos kernel: device bond0 entered promiscuous mode

Feb 04 16:59:56 tsoukalos kernel: device enp7s0f0 entered promiscuous mode

Feb 04 16:59:56 tsoukalos kernel: device enp7s0f1 entered promiscuous mode

Feb 04 16:59:57 tsoukalos kernel: 8021q: adding VLAN 0 to HW filter on device enp7s0f0

Feb 04 16:59:57 tsoukalos kernel: 8021q: adding VLAN 0 to HW filter on device enp7s0f1

Feb 04 16:59:57 tsoukalos kernel: 8021q: adding VLAN 0 to HW filter on device bond0

I'm pretty new to both Linux/Proxmox and Ethernet bonding so any tips here to get our node back online are much appreciated!

Chris