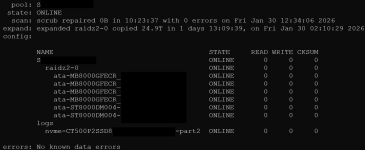

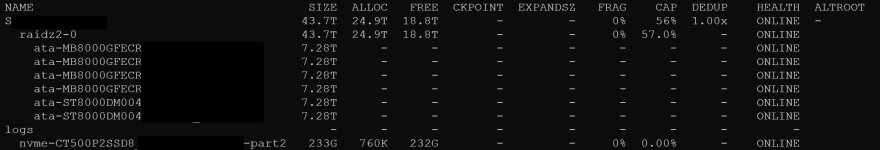

I had a zpool with 4x8TB SATA drives in a RAIDz2 with a total capacity of 16TB. I updates to PVE 9 and ZFS to 2.3 and attached 2 additional drives to the zpool using:

I waited after each drive addition for re-silvering and scrub. However, the addition of each drive only added 4TB of new space resulting in a total capacity of 24TB instead of the 32TB I was expecting. I tried to resolve this using the following steps:

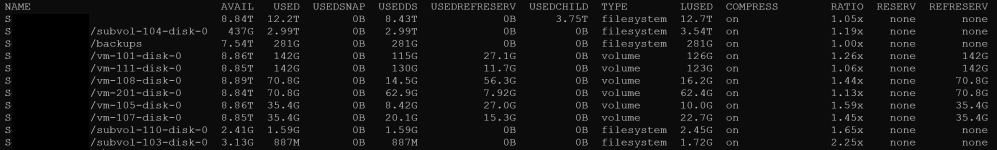

However, the resulting zpool is still showing only 24TB of total capacity. I came across a ZFS re-balancing script which just re-writes each file but zfs 2.3+ has a

I only did this for a few files but it it does not seem to be making a difference.

Does anyone have any insight into why I can't see the expected 32TB capacity and how to mitigate this?

Bash:

zpool upgrade <poolname>

zpool attach <poolname> raidz2-0 /dev/disk/by-id/<drive1>

zpool attach <poolname> raidz2-0 /dev/disk/by-id/<drive1>I waited after each drive addition for re-silvering and scrub. However, the addition of each drive only added 4TB of new space resulting in a total capacity of 24TB instead of the 32TB I was expecting. I tried to resolve this using the following steps:

Bash:

zpool set autoexpand=on <poolname>

zpool online -e <poolname> /dev/disk/by-id/<drive1>

zpool online -e <poolname> /dev/disk/by-id/<drive2>

rebootHowever, the resulting zpool is still showing only 24TB of total capacity. I came across a ZFS re-balancing script which just re-writes each file but zfs 2.3+ has a

zfs rewrite, which can do the same job. I tried to rewrite some files using a loop:

Bash:

find . -type f -size -400M -exec zfs rewrite -v {} >>./zfs_rewrite.list \;

rebootI only did this for a few files but it it does not seem to be making a difference.

Does anyone have any insight into why I can't see the expected 32TB capacity and how to mitigate this?