Hi , I have problem with IO on SSD NVME 7400 ( tested M.2 and U3 ) , i read some of threads in forum, with public ssd or "low end" , but threads with 7400 nvme seem far from my problem

On windows VM ( server / windows 10 / win11 ) different server configuration ( server motherboard with Epyc 8124P public with ryzen 5-7 and intel 10th-13th)

i 'v never hit up to 20 000 iops / 80MB/s on test with CrystalDiskMark Q32T1 4K Random.

and Q1T1 10K iops ( 30-50 MB/s)

but when i try on SSD SATA Micron 5400 i hit up than 40 000iops / 150MB/s , on read & write . ( Proxmox 6,7,8,9 tested )

the 7400 SSD are given at least for 95 000 iops for 2TO

I have tested multi host and options on virtual disk ( async/cache writing back / SSD option / IO thread enabled ) some options give +15% IO but i'm far to reach at least 30 000 iops .

also with virtio drivers 0.285 and 0.271 .

Virtuals disks are mounted with Virtio SCSI Single

Any idea for helping ?

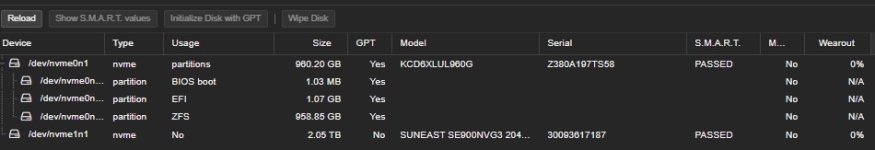

all tested host are in ZFS Mirroring with sames SSD

Complementary :

For the server i want to "patch" , the host Proxmox v8 with ZFS Mirroring with Micron 7400 results are far away from VM and the zfs cache are set at 48GB

the results are

Q32T1 write: IOPS=72.0k, BW=281MiB/s (295MB/s)

Q1T1 = write: IOPS=62.5k, BW=244MiB/s

I tried on linux VM default install from Rocky

resuslts in Q32T1 are better than host

but results with Q1T1 are slow as Windows VM ( 10 K iops & 40-50MB/s)

On windows VM ( server / windows 10 / win11 ) different server configuration ( server motherboard with Epyc 8124P public with ryzen 5-7 and intel 10th-13th)

i 'v never hit up to 20 000 iops / 80MB/s on test with CrystalDiskMark Q32T1 4K Random.

and Q1T1 10K iops ( 30-50 MB/s)

but when i try on SSD SATA Micron 5400 i hit up than 40 000iops / 150MB/s , on read & write . ( Proxmox 6,7,8,9 tested )

the 7400 SSD are given at least for 95 000 iops for 2TO

I have tested multi host and options on virtual disk ( async/cache writing back / SSD option / IO thread enabled ) some options give +15% IO but i'm far to reach at least 30 000 iops .

also with virtio drivers 0.285 and 0.271 .

Virtuals disks are mounted with Virtio SCSI Single

Any idea for helping ?

all tested host are in ZFS Mirroring with sames SSD

Complementary :

For the server i want to "patch" , the host Proxmox v8 with ZFS Mirroring with Micron 7400 results are far away from VM and the zfs cache are set at 48GB

Code:

fio --ioengine=libaio --direct=1 --rw=randwrite --bs=4k --numjobs=1 --iodepth=32 --runtime=10 --time_based --name=rand_read --filename=/tmp/pve/fio.4k --size=1GQ32T1 write: IOPS=72.0k, BW=281MiB/s (295MB/s)

Q1T1 = write: IOPS=62.5k, BW=244MiB/s

I tried on linux VM default install from Rocky

resuslts in Q32T1 are better than host

but results with Q1T1 are slow as Windows VM ( 10 K iops & 40-50MB/s)

Last edited: