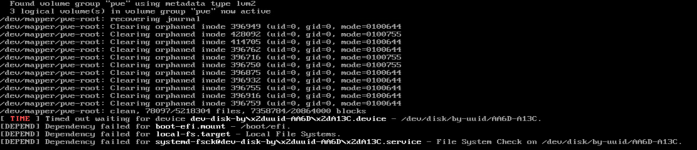

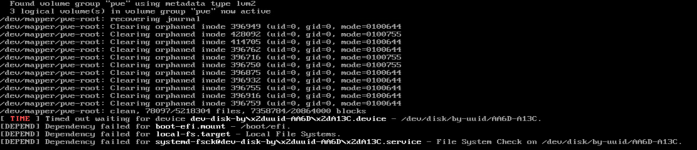

I currently have an issue during reboots of my Proxmox Hosts where the Hosts are getting a timeout error when thrying to mount the EFI Disk during Boot, causing them to go into emergency mode.

I didn't have this issue when rebooting the Hosts last month, so I'm guessing its caused by the change I've made to the /etc/lvm/lvm.conf file when setting up multipath.

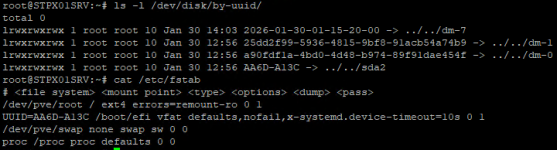

Here's my configuration:

As you can see, I've only added the '"a|/dev/sda.*|",' and '"r|/dev/sd.*|",' rows to the existing configuration. My intention was for lvm to filter out the volumes "sdX" which should only be accessed by their multipath.

I've currently been able to work around this issue by adding the nofail Option to my EFI Drive in the /etc/fstab file.

I don't have any issues mounting the EFI drive after boot has completed, although trying it with "systemctl start boot-efi.mount" does still fail with the same message that's shown in the screenshot. "Timed out waiting for device dev-disk-by ..."

Hopefully someone has an idea as to what's causing this issue and how to fix it.

I didn't have this issue when rebooting the Hosts last month, so I'm guessing its caused by the change I've made to the /etc/lvm/lvm.conf file when setting up multipath.

Here's my configuration:

Code:

devices {

# added by pve-manager to avoid scanning ZFS zvols and Ceph rbds

global_filter=[

"a|/dev/sda.*|",

"r|/dev/sd.*|",

"r|/dev/zd.*|",

"r|/dev/rbd.*|"

]

}As you can see, I've only added the '"a|/dev/sda.*|",' and '"r|/dev/sd.*|",' rows to the existing configuration. My intention was for lvm to filter out the volumes "sdX" which should only be accessed by their multipath.

I've currently been able to work around this issue by adding the nofail Option to my EFI Drive in the /etc/fstab file.

I don't have any issues mounting the EFI drive after boot has completed, although trying it with "systemctl start boot-efi.mount" does still fail with the same message that's shown in the screenshot. "Timed out waiting for device dev-disk-by ..."

Hopefully someone has an idea as to what's causing this issue and how to fix it.