Hi everyone,

I’m looking into using Proxmox for a deployment and had a few questions about its capabilities. I’d really appreciate it if anyone could share their experience or insights:

Thank You

I’m looking into using Proxmox for a deployment and had a few questions about its capabilities. I’d really appreciate it if anyone could share their experience or insights:

- Scaling: Can Proxmox handle a cluster with 128 nodes or more? Are there any practical limitations or tips for large-scale setups?

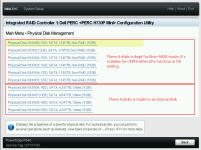

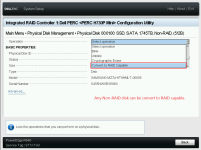

- Hot-Swap Disks: Does Proxmox have a disk replacement wizard with a graphical interface that makes hot-swapping drives easy and reliable? How well does it work in real-world scenarios?

- Storage & Backups:

- Can I set up internal storage, SAN, or NAS as a backup repository for VMs?

- Does it support common storage protocols like iSCSI, FC, or NFS?

- Architecture & Hyper-Converged Support:

- Can Proxmox run on both x86 and ARM servers?

- Is it possible to mix x86 and ARM nodes in the same cluster for unified management?

Thank You