Hello everyone,

I am experiencing an issue with my ZFS pool usage reporting.

My ZFS pool "Crucial" is showing 71.69% used (663.64 GB of 925.73 GB). However, this pool only hosts 3 virtual machine disks:

I would like to understand why ZFS is reporting this discrepancy and how I can free up space.

I am experiencing an issue with my ZFS pool usage reporting.

My ZFS pool "Crucial" is showing 71.69% used (663.64 GB of 925.73 GB). However, this pool only hosts 3 virtual machine disks:

- VM100: 274.88 GB

- VM101: 90.19 GB

- VM102: 90.19 GB

I would like to understand why ZFS is reporting this discrepancy and how I can free up space.

Questions:

- Could this be related to snapshots, ZFS metadata, or some other overhead?

- How can I properly check where the space is going?

- What would be the correct way to reclaim/optimize usage without risking data loss?

Current setup:

- Proxmox VE version: pve-manager/8.2.2

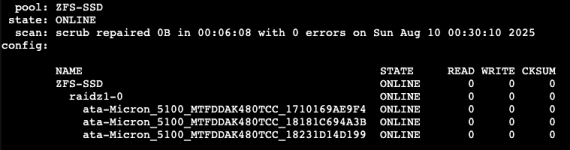

- zfs-2.2.3-pve2 created on SSD/raidz 1-0

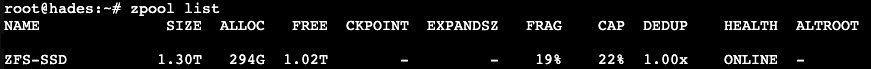

- Output of zpool list, zfs list -o space, and zpool status: