Current status; are now using Jumbo Frames (9000) on the dedicated DAC between the PBS and QNAP. Also removed default in /etc/fstab to avoid the async option.

Running mount on PBS: 10.255.255.10:/PBS on /mnt/qnap type nfs (rw,relatime,sync,vers=3,rsize=262144,wsize=262144,namlen=255,acregmin=0,acregmax=0,acdirmin=0,acdirmax=0,hard,noac,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=10.255.255.10,mountvers=3,mountport=30000,mountproto=tcp,lookupcache=none,local_lock=none,addr=10.255.255.10)

cat /etc/exports on QNAP: "/share/CACHEDEV1_DATA/PBS" 10.255.255.12(sec=sys,rw,sync,wdelay,secure,no_subtree_check,no_root_squash,fsid=79f31dbada044d5b1ad055457a33c8bf) 192.168.1.20(sec=sys,rw,sync,wdelay,secure,no_subtree_check,no_root_squash,fsid=79f31dbada044d5b1ad055457a33c8bf) 192.168.1.21(sec=sys,rw,sync,wdelay,secure,no_subtree_check,no_root_squash,fsid=79f31dbada044d5b1ad055457a33c8bf)

Current /etc/fstab on PBS:

# NFS

10.255.255.10:/PBS /mnt/qnap nfs rw,suid,dev,exec,auto,sync,nouser,fg,noac,lookupcache=none,mountproto=tcp,nfsvers=3

Also set "ballooning device" on PBS with 8 GB minimum, and 32 GB max.

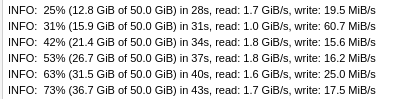

Running now, let's see how it turns out.