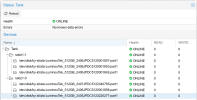

I have 6 512GB SSD drives, which is configured as SINGLE zpool with TWO RAIDZ1 vdev for balancing write-speed and redundancy.

Naviely, I should get a 2TB ZFS storage in my pve node, I named the storage as "Tank".

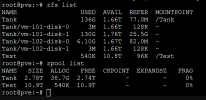

But zfs list command tells me, I've got about 1.79TB total space of Tank.

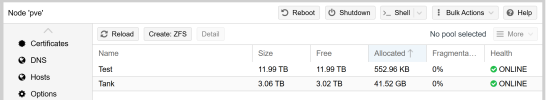

ZFS page tells me, I've got 3.02TB total space of Tank.

zpool list command tells me, I've got 2.78TB total space of Tank.

This is so confusing. I can't tell which is correct or worng.

I've google this problem for a week, but without a soultion nor an answer.

SO how can I tell which total space is correct?

Naviely, I should get a 2TB ZFS storage in my pve node, I named the storage as "Tank".

But zfs list command tells me, I've got about 1.79TB total space of Tank.

ZFS page tells me, I've got 3.02TB total space of Tank.

zpool list command tells me, I've got 2.78TB total space of Tank.

This is so confusing. I can't tell which is correct or worng.

I've google this problem for a week, but without a soultion nor an answer.

SO how can I tell which total space is correct?

Attachments

Last edited: