You can read these with

Journalctl. For example:

Code:

# Follow - live log

journalctl -f

# Reverse search

journalctl -r

# 2 days ago

journalctl --since '2 days ago'

Please enter the exact name of the drives, preferably the smart values of both drives.

Code:

smartctl -a /dev/disk/by-id/<your disk>

# for example:

smartctl -a /dev/disk/by-id/ata-ST8000NM017B-2TJ103_XXXXXXX

Sounds good.

I think we need a bit more information too:

How many memory have the machine?

What CPU is build in?

Other important hardware...?

What is the goal, what should run on the machine?

7 Days ago I had disconnected the drives because one of them was rebooting. Now, to run the tests you asked for, I have reconnected them, and they are working fine. Usually, the problem seems to occur when I turn the server off and on again, but not always.

How many memory have the machine? 32GB

What CPU is build in? Ryzen 5 2600

Other important hardware...? a nvidia gpu (maybe a gt 620) because my cpu isn't APU

What is the goal, what should run on the machine? Now i'm running as VM: PfSense, personal Minecraft Server and Windows 10 for data (where i use my zfs), as a CT: Pi-hole, Uptime Kuma, Wireguard

From this log it seems the cables...

Aug 02 14:00:28 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:00:28 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:02:50 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:02:55 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:02:55 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:05:16 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:05:22 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:05:22 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:05:23 pve pvestatd[1543]: status update time (6.064 seconds)

Aug 02 14:07:43 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:07:49 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:07:49 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:10:11 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:10:16 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:10:16 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:12:36 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:12:43 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:12:43 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:12:43 pve pvestatd[1543]: status update time (6.914 seconds)

Aug 02 14:13:23 pve systemd[1]: Starting systemd-tmpfiles-clean.service - Cleanup of Temporary Directories...

Aug 02 14:13:23 pve systemd[1]: systemd-tmpfiles-clean.service: Deactivated successfully.

Aug 02 14:13:23 pve systemd[1]: Finished systemd-tmpfiles-clean.service - Cleanup of Temporary Directories.

Aug 02 14:13:23 pve systemd[1]: run-credentials-systemd\x2dtmpfiles\x2dclean.service.mount: Deactivated successfully.

Aug 02 14:15:04 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:15:10 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:15:10 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:17:01 pve CRON[467458]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Aug 02 14:17:01 pve CRON[467459]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Aug 02 14:17:01 pve CRON[467458]: pam_unix(cron:session): session closed for user root

Aug 02 14:17:21 pve smartd[1139]: Device: /dev/sda [SAT], SMART Prefailure Attribute: 3 Spin_Up_Time changed from 210 to 211

Aug 02 14:18:07 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:18:14 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:18:14 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:18:14 pve pvestatd[1543]: status update time (7.203 seconds)

Aug 02 14:20:15 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:20:20 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:20:20 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:22:41 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:22:43 pve kernel: ata2: found unknown device (class 0)

Aug 02 14:22:47 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:22:47 pve kernel: ata2.00: configured for UDMA/33

Aug 02 14:25:08 pve kernel: ata2: SATA link down (SStatus 0 SControl 310)

Aug 02 14:25:14 pve kernel: ata2: link is slow to respond, please be patient (ready=0)

Aug 02 14:25:14 pve kernel: ata2: SATA link up 1.5 Gbps (SStatus 113 SControl 310)

Aug 02 14:25:14 pve kernel: ata2.00: configured for UDMA/33

25 minutes later:

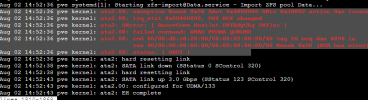

Aug 02 14:52:33 pve kernel: ata2: SATA link down (SStatus 0 SControl 300)

Aug 02 14:52:33 pve kernel: ata2: hard resetting link

Aug 02 14:52:33 pve kernel: ata2: SATA link down (SStatus 0 SControl 300)

Aug 02 14:52:33 pve kernel: ata2: limiting SATA link speed to <unknown>

Aug 02 14:52:33 pve kernel: ata2: hard resetting link

Aug 02 14:52:33 pve kernel: ata2: SATA link up 6.0 Gbps (SStatus 133 SControl 3F0)

Aug 02 14:52:33 pve kernel: ata2.00: configured for UDMA/133

Aug 02 14:52:33 pve kernel: ata2: limiting SATA link speed to 3.0 Gbps

Aug 02 14:52:33 pve kernel: ata2: SATA link up 3.0 Gbps (SStatus 123 SControl 320)

Aug 02 14:52:33 pve kernel: ata2.00: configured for UDMA/133