I had a Raid 10 ZFS pool over 4 HDD with a SSD cache, and I thought "I wish I had known about ZFS sooner".

So I put together a Raid 10 ZFS pool over 4 SSD (and later added a SSD cache), and am thinking to myself, what did I miss?

I have compression on. These numbers are worse than the 7200RPMs!

HP SSD S700 500GB drives x 4.

I created the first mirror, and then added the second mirror.

Individually (before using them in the pool) I have seen from one SSD to another read/write speeds around 90MB/s.

I created a mdadm Raid0 across partitions from two different SSDs and was very pleased with the speed. (I understand the risk of Raid0). I thought Raid10 on ZFS would accomodate a simliar experience.

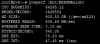

For root I have a Crucial 240GB SSD partitioned, and it shows:

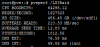

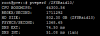

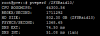

For comparison (I think I ran it right) the zpool:

What can I check? I am so ignorant on this.

PS: I also picked up a LSI MegaRAID SAS card but I haven't tried it out yet.

So I put together a Raid 10 ZFS pool over 4 SSD (and later added a SSD cache), and am thinking to myself, what did I miss?

I have compression on. These numbers are worse than the 7200RPMs!

HP SSD S700 500GB drives x 4.

I created the first mirror, and then added the second mirror.

Individually (before using them in the pool) I have seen from one SSD to another read/write speeds around 90MB/s.

I created a mdadm Raid0 across partitions from two different SSDs and was very pleased with the speed. (I understand the risk of Raid0). I thought Raid10 on ZFS would accomodate a simliar experience.

For root I have a Crucial 240GB SSD partitioned, and it shows:

For comparison (I think I ran it right) the zpool:

What can I check? I am so ignorant on this.

PS: I also picked up a LSI MegaRAID SAS card but I haven't tried it out yet.