I am new to ZFS. I’m old school and I feel like I'll take ext4, mdadm, and lvm-thin to the grave with me. But I like to learn new tricks. I'd like to make the switch but want to make sure I am not F'ing myself over. Ive done some searhing where possible but leaves me sometimes with more questions than answers.

2) I could get away with NOT putting these into a mirror and just keep backups... My brain kicked back in and said I should probably make a partition large enough to store production VMs and then partition 200-500 GB on each stick for more like non critical VMs that if lost are no big deal. Sometimes I need a space to drop few 100 GB temporally etc.

3) I think I came to the conclusion that for safety purposes I should probably create at least a ~1TB Raid1 (mirror in zfs terms) and then maybe allocate the other empty each as single drives... That gives me almost another 1.8 TB of mess around storage. that I could even use to do some local backups (say sftp from a Hosting Pane, or game server storage) .. Of course, I also offsite the main backups but this be a quick local backup spot that if I lose it so well, I have the slow backup location.

Note: In terms of ZFS... I see VDEVs as Partitions which could be in 1 or more drives. ZFS Pools then combines vdevs as assigned

-- ~80 GB "root" drive. *** The installer did this for me I gave it the size.

-- 500 GB Mirror

-- I left a ~300 GB BLANK unused area

-- Created a ~900 GB "partition" at the END of EACH drive which I then created 2 (two) 900 GB standalone ZFS areas in the GUI.

Question 1a: Related to 1st... Could I in the future delete the stand-alone sections (on each drive) and expand my mirror... might be redundant question

--- Maybe --- zpool set autoexpand=on <name of pool> ??

Question 2: Was reading about trim. I assume I should keep auto trim OFF and run a weekly trim? or how often would you recommend... I believe this kills SSD life.

Question 3: How will ZFS handle a drive failure on my mirror. Even the BOOT mirror. I am used to mdadm (linux raid) I am very good at managing this / replacing drives etc... how would I replace / rebuild my Mirrors. Can it be done ONLINE (online rebuild?)

Question 4: Will I have issues or bad practice by creating multiple "partitions / vdevs" on the SAME drive like I did with Gparted. Where I have a Mirror "pool" and then stand alone "pool"

Question 5: The installer made 3 /rpool paths on same pool... should I care. Are they used for different items?

Question 6: SWAP ... ProxMox shows me N/A SWAP usage in the GUI.. No swap partition exist from what I can see.. Should I have a SWAP space partition? Or Not needed? I’ve read some bad things happen with zfs swap. I also think SWAP would kill SSD. https://forum.proxmox.com/threads/w...-disks-zfs-file-root-system.95115/post-414319

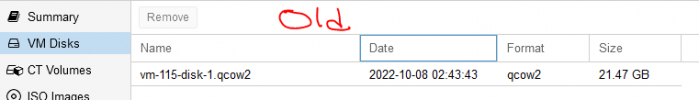

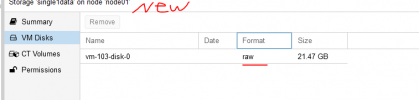

Question 7: I have a handful of .qcow2 VMs.. I also sometimes look to deploy an OVA / vmware type disk. I see some mixed messaging on if ZFS "partitions / storage" can support this... will these types of VMs work? Reference -- https://forum.proxmox.com/threads/no-qcow2-on-zfs.37518/ whats ZFS ZVOL and can it be enabled?

Question 8: Other incompatibilities / issues around ZFS ... Or things I should also be setting up? I’m trying to not get scared away from ZFS and fall back to my trusted ext4.

Conundrum: Should I just Mirror entire 2 Drives? ... was hoping to utilize the stand along part for non-critical storage. (its also NVMe, failure is rare till worn out + I’d be ok with losing the data)

Situation:

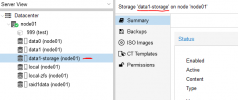

I have 2x - 1.9 TB NVMe drives in a brand new server that im trying to figure out best storage setup for. I plan on migrating off an old Proxmox 6 box. I have installed 7 to new to just play around with ZFS till comfortable.Thoughts:

1) I have been using NVMe for long time at home they have not yet failed (or worn out) I checked the new drives and these drives are fairly new only few dozen TB written.2) I could get away with NOT putting these into a mirror and just keep backups... My brain kicked back in and said I should probably make a partition large enough to store production VMs and then partition 200-500 GB on each stick for more like non critical VMs that if lost are no big deal. Sometimes I need a space to drop few 100 GB temporally etc.

3) I think I came to the conclusion that for safety purposes I should probably create at least a ~1TB Raid1 (mirror in zfs terms) and then maybe allocate the other empty each as single drives... That gives me almost another 1.8 TB of mess around storage. that I could even use to do some local backups (say sftp from a Hosting Pane, or game server storage) .. Of course, I also offsite the main backups but this be a quick local backup spot that if I lose it so well, I have the slow backup location.

Note: In terms of ZFS... I see VDEVs as Partitions which could be in 1 or more drives. ZFS Pools then combines vdevs as assigned

Currently:

My Pre-Setup / Mess around stage... I used gparted (can’t use that to make zfs itself I learned) to help me make multiple "unallocated partition" so proxmox could then see the storage and make "zfs pools"-- ~80 GB "root" drive. *** The installer did this for me I gave it the size.

-- 500 GB Mirror

-- I left a ~300 GB BLANK unused area

-- Created a ~900 GB "partition" at the END of EACH drive which I then created 2 (two) 900 GB standalone ZFS areas in the GUI.

ZFS related Questions:

Question 1: Can I EXPAND easily my "mirror" pool / vdev Into BLANK UN-allocated blocks NEXT to it "partition" wise... or for that matter any of my "partitions". I tried to go into GPARTED and expand the "partition" but it would not let me. Figure I could expand my 500GB to 700GB by adding 200 to both drives in my unused area then tell ZFS to go use that space.Question 1a: Related to 1st... Could I in the future delete the stand-alone sections (on each drive) and expand my mirror... might be redundant question

--- Maybe --- zpool set autoexpand=on <name of pool> ??

Question 2: Was reading about trim. I assume I should keep auto trim OFF and run a weekly trim? or how often would you recommend... I believe this kills SSD life.

Question 3: How will ZFS handle a drive failure on my mirror. Even the BOOT mirror. I am used to mdadm (linux raid) I am very good at managing this / replacing drives etc... how would I replace / rebuild my Mirrors. Can it be done ONLINE (online rebuild?)

Question 4: Will I have issues or bad practice by creating multiple "partitions / vdevs" on the SAME drive like I did with Gparted. Where I have a Mirror "pool" and then stand alone "pool"

Question 5: The installer made 3 /rpool paths on same pool... should I care. Are they used for different items?

Question 6: SWAP ... ProxMox shows me N/A SWAP usage in the GUI.. No swap partition exist from what I can see.. Should I have a SWAP space partition? Or Not needed? I’ve read some bad things happen with zfs swap. I also think SWAP would kill SSD. https://forum.proxmox.com/threads/w...-disks-zfs-file-root-system.95115/post-414319

Question 7: I have a handful of .qcow2 VMs.. I also sometimes look to deploy an OVA / vmware type disk. I see some mixed messaging on if ZFS "partitions / storage" can support this... will these types of VMs work? Reference -- https://forum.proxmox.com/threads/no-qcow2-on-zfs.37518/ whats ZFS ZVOL and can it be enabled?

Question 8: Other incompatibilities / issues around ZFS ... Or things I should also be setting up? I’m trying to not get scared away from ZFS and fall back to my trusted ext4.

Conundrum: Should I just Mirror entire 2 Drives? ... was hoping to utilize the stand along part for non-critical storage. (its also NVMe, failure is rare till worn out + I’d be ok with losing the data)

Code:

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

raid1data 960K 481G 96K /raid1data

rpool 1.47G 75.1G 104K /rpool

rpool/ROOT 1.46G 75.1G 96K /rpool/ROOT

rpool/ROOT/pve-1 1.46G 75.1G 1.46G /

rpool/data 96K 75.1G 96K /rpool/data

single0data 1.43M 845G 96K /single0data

single1data 876K 845G 96K /single1data

~# lsblk -o NAME,FSTYPE,SIZE,MOUNTPOINT,LABEL

NAME FSTYPE SIZE MOUNTPOINT LABEL

zd0 32G

nvme1n1 1.7T

├─nvme1n1p1 1007K

├─nvme1n1p2 vfat 512M

├─nvme1n1p3 zfs_member 79.5G rpool

├─nvme1n1p4 zfs_member 500G raid1data

└─nvme1n1p5 zfs_member 878.9G single1data

nvme0n1 1.7T

├─nvme0n1p1 1007K

├─nvme0n1p2 vfat 512M

├─nvme0n1p3 zfs_member 79.5G rpool

├─nvme0n1p4 zfs_member 500G raid1data

└─nvme0n1p5 zfs_member 878.9G single0data

##### fdisk -l

Disk model: SAMSUNG MZQLB1T9HAJR-00007

Device Start End Sectors Size Type

/dev/nvme1n1p1 34 2047 2014 1007K BIOS boot

/dev/nvme1n1p2 2048 1050623 1048576 512M EFI System

/dev/nvme1n1p3 1050624 167772160 166721537 79.5G Solaris /usr & Apple ZFS

/dev/nvme1n1p4 167774208 1216350207 1048576000 500G Solaris /usr & Apple ZFS

/dev/nvme1n1p5 1907548160 3750748159 1843200000 878.9G Solaris /usr & Apple ZFS

Disk /dev/nvme0n1: 1.75 TiB, 1920383410176 bytes, 3750748848 sectors

Disk model: SAMSUNG MZQLB1T9HAJR-00007

Device Start End Sectors Size Type

/dev/nvme0n1p1 34 2047 2014 1007K BIOS boot

/dev/nvme0n1p2 2048 1050623 1048576 512M EFI System

/dev/nvme0n1p3 1050624 167772160 166721537 79.5G Solaris /usr & Apple ZFS

/dev/nvme0n1p4 167774208 1216350207 1048576000 500G Solaris /usr & Apple ZFS

/dev/nvme0n1p5 1907548160 3750748159 1843200000 878.9G Solaris /usr & Apple ZFSAttachments

Last edited: