Hi,

I recently installed Proxmox VE on a new server and I'm getting performance issues with disks on a ZFS pool.

Proxmox: 7.4-3

CPU: AMD EPYC 9354P

RAM: 512GB

NVME: 4*1.6TB Dell Ent NVMe PM1735a

The ZFS pool looks like that:

Here's the result of pveversion -v:

Here's the config of the VM i'm using:

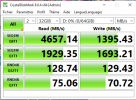

Here are some benchmark results, from the VM:

To add some details, I experience issues when using the VMs, mainly with programs that rely on databases a lot. I recently moved a VM from an old Hyper-V server to this brand new Proxmox server, and the performance when using the database on ZFS with NVMe is worse than on 8 years old SSDs (on Hyper-V).

Also I decided to limit ZFS memory usage for ARC cache. I love the "ZFS manages the memory, everything will be fine" idea, but I need to be able to see the amount of RAM used on the server from the Proxmox UI for resource provisionning. I allocated 64GB of RAM to arc cache, for 6.4 TB of disk storage, which I think is plenty.

Any idea what the issue could be ?

I recently installed Proxmox VE on a new server and I'm getting performance issues with disks on a ZFS pool.

Proxmox: 7.4-3

CPU: AMD EPYC 9354P

RAM: 512GB

NVME: 4*1.6TB Dell Ent NVMe PM1735a

The ZFS pool looks like that:

Code:

root@pve-1-1:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

nvme 2.91T 1.92T 1008G - - 41% 66% 1.00x ONLINE -

root@pve-1-1:~# zpool get all nvme

NAME PROPERTY VALUE SOURCE

nvme size 2.91T -

nvme capacity 66% -

nvme altroot - default

nvme health ONLINE -

nvme guid 16418581346593086261 -

nvme version - default

nvme bootfs - default

nvme delegation on default

nvme autoreplace off default

nvme cachefile - default

nvme failmode wait default

nvme listsnapshots off default

nvme autoexpand off default

nvme dedupratio 1.00x -

nvme free 1008G -

nvme allocated 1.92T -

nvme readonly off -

nvme ashift 12 local

nvme comment - default

nvme expandsize - -

nvme freeing 0 -

nvme fragmentation 41% -

nvme leaked 0 -

nvme multihost off default

nvme checkpoint - -

nvme load_guid 13402657768618699534 -

nvme autotrim off default

nvme compatibility off default

nvme feature@async_destroy enabled local

nvme feature@empty_bpobj active local

nvme feature@lz4_compress active local

nvme feature@multi_vdev_crash_dump enabled local

nvme feature@spacemap_histogram active local

nvme feature@enabled_txg active local

nvme feature@hole_birth active local

nvme feature@extensible_dataset enabled local

nvme feature@embedded_data active local

nvme feature@bookmarks enabled local

nvme feature@filesystem_limits enabled local

nvme feature@large_blocks enabled local

nvme feature@large_dnode enabled local

nvme feature@sha512 enabled local

nvme feature@skein enabled local

nvme feature@edonr enabled local

nvme feature@userobj_accounting enabled local

nvme feature@encryption enabled local

nvme feature@project_quota enabled local

nvme feature@device_removal enabled local

nvme feature@obsolete_counts enabled local

nvme feature@zpool_checkpoint enabled local

nvme feature@spacemap_v2 active local

nvme feature@allocation_classes enabled local

nvme feature@resilver_defer enabled local

nvme feature@bookmark_v2 enabled local

nvme feature@redaction_bookmarks enabled local

nvme feature@redacted_datasets enabled local

nvme feature@bookmark_written enabled local

nvme feature@log_spacemap active local

nvme feature@livelist enabled local

nvme feature@device_rebuild enabled local

nvme feature@zstd_compress enabled local

nvme feature@draid enabled localHere's the result of pveversion -v:

Code:

root@pve-1-1:~# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.102-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.3-3

pve-kernel-5.15.102-1-pve: 5.15.102-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-3

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.6.3

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20221111-1

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.11-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1Here's the config of the VM i'm using:

Code:

root@pve-1-1:~# qm config 116

agent: 1

bios: ovmf

boot:

cores: 2

cpu: EPYC-Rome

efidisk0: hdd:vm-116-disk-1,efitype=4m,pre-enrolled-keys=1,size=4M

memory: 4096

name: infra-dev-net.vpc-home.routethis.online

numa: 0

onboot: 1

scsi0: hdd:vm-116-disk-0,iothread=1,size=32G

scsi1: nvme:vm-116-disk-0,iothread=1,size=64G

scsihw: virtio-scsi-single

smbios1: uuid=07a0be9d-3690-4008-85c4-5dbc59152629

sockets: 1

startup: order=4

vmgenid: 52ad949c-9df9-4da5-a41e-a30e4bad45baHere are some benchmark results, from the VM:

To add some details, I experience issues when using the VMs, mainly with programs that rely on databases a lot. I recently moved a VM from an old Hyper-V server to this brand new Proxmox server, and the performance when using the database on ZFS with NVMe is worse than on 8 years old SSDs (on Hyper-V).

Also I decided to limit ZFS memory usage for ARC cache. I love the "ZFS manages the memory, everything will be fine" idea, but I need to be able to see the amount of RAM used on the server from the Proxmox UI for resource provisionning. I allocated 64GB of RAM to arc cache, for 6.4 TB of disk storage, which I think is plenty.

Any idea what the issue could be ?