Good morning/good afternoon/good evening everyone!

I have a Windows Server 2016 Standard VM here in my PVE, but it is very slow to open, load files and programs. Processor and memory I believe is the bottleneck as I have a lot and when monitoring it reaches a maximum of 60% usage. Company users connect via TS locally to this Windows Server.

The VM is located at: local-lvm (pve).

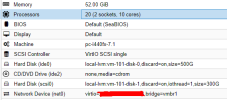

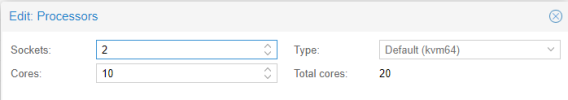

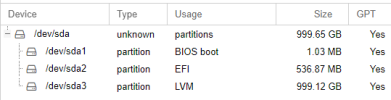

Below is a printout of the VM configurations. The PVE's storage is two 1TB Samsung SSDs, connected in RAID 1. So one SSD is used as storage and the other is doing the copying.

What could this slowness be? And how to solve it?

Thanks.

I have a Windows Server 2016 Standard VM here in my PVE, but it is very slow to open, load files and programs. Processor and memory I believe is the bottleneck as I have a lot and when monitoring it reaches a maximum of 60% usage. Company users connect via TS locally to this Windows Server.

The VM is located at: local-lvm (pve).

Below is a printout of the VM configurations. The PVE's storage is two 1TB Samsung SSDs, connected in RAID 1. So one SSD is used as storage and the other is doing the copying.

What could this slowness be? And how to solve it?

Thanks.