Just an off-topic reply..

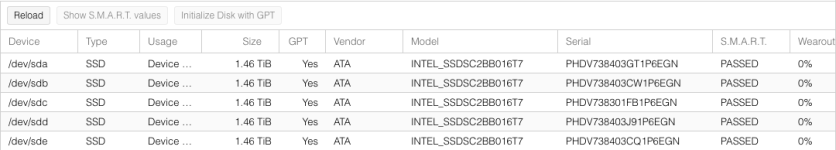

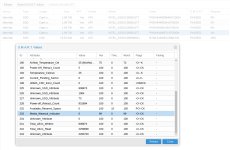

Since moving from ESXi to Proxmox, these wear indicators are -barely- moving for me. And I had these NVME drives in a mirror in an ESXi VM (via passthrough) presented back to the host. When moving to proxmox, I just re-imported the pool. And started using them. In a year, my drives went from 0 to +- 20% wear with hardly any use.

Now in 2 months of proxmox use, they've only gone up 1%. Extrapolate that data; and for 1 year of proxmox use: that's 6% (at worst). With ESXi and the same loads, I was at 20% somehow. That means the lifetime of my NVME drives has been extended by at least 3 times

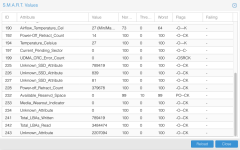

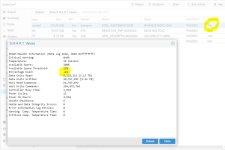

As I log all this smart data to Grafana, I can quickly see trends. Once I moved from ESXi to Proxmox; I noticed that the temperature of my NVME drives was elevated the first couple of weeks, in several "batches" it seems. I suppose this was internal garbage collection in the firmware that was somehow unable to run in ESXi, as ZFS stats didn't show any I/O; but temperature doesn't lie

. After those days, all seems normalized.

Bottom line: Hurray for Proxmox