Greetings,

remote server

ssh working, vms/ct working. Just one CT with connected host storage not working.

I cant stop/start any CT or VM. It is a remote server, possible hardware restart in a day +-.

Any ideas what happened?

It is disabled!?

After hardware reset all is good (for now)

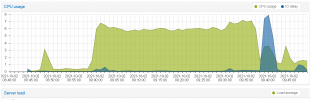

Nothing special in graphs:

The system stopped responding and checking CPU usage as the graphs ends. So GUI was not working and the stats data was not gathered.

remote server

ssh working, vms/ct working. Just one CT with connected host storage not working.

I cant stop/start any CT or VM. It is a remote server, possible hardware restart in a day +-.

Any ideas what happened?

Code:

Message from syslogd@matrix at Oct 2 01:38:50 ...

kernel:[6609484.653892] watchdog: BUG: soft lockup - CPU#6 stuck for 23s! [khugepaged:114]

Message from syslogd@matrix at Oct 2 01:39:30 ...

kernel:[6609524.654195] watchdog: BUG: soft lockup - CPU#6 stuck for 22s! [khugepaged:114]

Message from syslogd@matrix at Oct 2 01:39:30 ...

kernel:[6609524.654195] watchdog: BUG: soft lockup - CPU#6 stuck for 22s! [khugepaged:114]

Message from syslogd@matrix at Oct 2 01:39:58 ...

kernel:[6609552.654406] watchdog: BUG: soft lockup - CPU#6 stuck for 22s! [khugepaged:114]

Message from syslogd@matrix at Oct 2 01:39:58 ...

kernel:[6609552.654406] watchdog: BUG: soft lockup - CPU#6 stuck for 22s! [khugepaged:114]It is disabled!?

Code:

root@matrix:~# grep -i HugePages_Total /proc/meminfo

HugePages_Total: 0

Code:

top - 01:42:52 up 76 days, 12:01, 2 users, load average: 22.96, 22.42, 21.31

Tasks: 420 total, 6 running, 409 sleeping, 0 stopped, 5 zombie

%Cpu(s): 0.3 us, 6.7 sy, 0.0 ni, 93.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

MiB Mem : 32026.9 total, 1521.8 free, 28937.3 used, 1567.9 buff/cache

MiB Swap: 8192.0 total, 5892.2 free, 2299.8 used. 1970.7 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

114 root 39 19 0 0 0 R 100.0 0.0 266:08.88 khugepaged

113 root 25 5 0 0 0 S 9.3 0.0 7531:01 ksmd

18606 root 20 0 9272480 4.0g 4844 S 4.3 12.7 75729:15 kvm

23619 root 20 0 16628 7828 6748 S 0.3 0.0 0:00.03 sshd

25904 root 20 0 11696 4028 3208 R 0.3 0.0 0:00.01 top

1 root 20 0 172112 9492 5436 S 0.0 0.0 30:27.89 systemd

2 root 20 0 0 0 0 S 0.0 0.0 7:43.05 kthreadd

3 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_gp

4 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_par_gp

6 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 kworker/

Code:

Filesystem 1K-blocks Used Available Use% Mounted on

udev 16336928 0 16336928 0% /dev

tmpfs 3279556 357656 2921900 11% /run

/dev/mapper/pve-root 51085808 32698520 15762572 68% /

tmpfs 16397776 49920 16347856 1% /dev/shm

tmpfs 5120 0 5120 0% /run/lock

tmpfs 16397776 0 16397776 0% /sys/fs/cgroup

/dev/nvme0n1p2 523248 312 522936 1% /boot/efi

/dev/fuse 30720 20 30700 1% /etc/pve

data 7557464448 520702336 7036762112 7% /data

Code:

root@matrix:~# pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.124-1-pve)

pve-manager: 6.4-13 (running version: 6.4-13/9f411e79)

pve-kernel-5.4: 6.4-4

pve-kernel-helper: 6.4-4

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-5.4.119-1-pve: 5.4.119-1

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.98-1-pve: 5.4.98-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-3

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.12-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-1

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.2-4

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

Code:

root@matrix:~# grep AnonHugePages /proc/meminfo

AnonHugePages: 3915776 kB

Code:

root@matrix:~# egrep 'trans|thp' /proc/vmstat

nr_anon_transparent_hugepages 1912

thp_fault_alloc 9353

thp_fault_fallback 4011

thp_collapse_alloc 13526

thp_collapse_alloc_failed 72338

thp_file_alloc 0

thp_file_mapped 0

thp_split_page 9431

thp_split_page_failed 2369239777

thp_deferred_split_page 16531

thp_split_pmd 9431

thp_split_pud 0

thp_zero_page_alloc 0

thp_zero_page_alloc_failed 0

thp_swpout 0

thp_swpout_fallback 0After hardware reset all is good (for now)

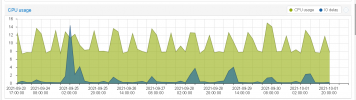

Nothing special in graphs:

The system stopped responding and checking CPU usage as the graphs ends. So GUI was not working and the stats data was not gathered.

Last edited: