Hi all,

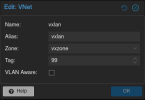

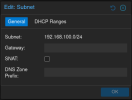

I hope you are doing well. I’ve been researching for an answer or best practices but couldn’t really find a good solution or something straightforward. I have a 4 node cluster made up of small/tiny desktop PCs with each having a single NIC. Each PC connects directly to my home router and live on the 192.168.1.XXX network. I have them set up as a cluster. I’d like to set up an isolated network in my cluster with an IP range of 192.168.100.XXX using a Pfsense router. My home router is a basic ATT fiber router so not much in terms of IO/flexibility. I read that I can create a VXLAN network under Datacenter and add all my nodes to the peer list. I set up my zones and vnets to match and was able to successfully ping across VMs in the .100.XXX network and my .1.XXX. The issue lies in that I can’t really access the Pfsense web gui from a VM that is on a different node as the PFsense VM or reach out to the Internet. Any ideas? Or would it be best to remove the vm all together and just stick to SDN/VXLAN?

Thank you

I hope you are doing well. I’ve been researching for an answer or best practices but couldn’t really find a good solution or something straightforward. I have a 4 node cluster made up of small/tiny desktop PCs with each having a single NIC. Each PC connects directly to my home router and live on the 192.168.1.XXX network. I have them set up as a cluster. I’d like to set up an isolated network in my cluster with an IP range of 192.168.100.XXX using a Pfsense router. My home router is a basic ATT fiber router so not much in terms of IO/flexibility. I read that I can create a VXLAN network under Datacenter and add all my nodes to the peer list. I set up my zones and vnets to match and was able to successfully ping across VMs in the .100.XXX network and my .1.XXX. The issue lies in that I can’t really access the Pfsense web gui from a VM that is on a different node as the PFsense VM or reach out to the Internet. Any ideas? Or would it be best to remove the vm all together and just stick to SDN/VXLAN?

Thank you