Hello All,

I'm doing things on proxmox since a few weeks. So treat me new on these things.

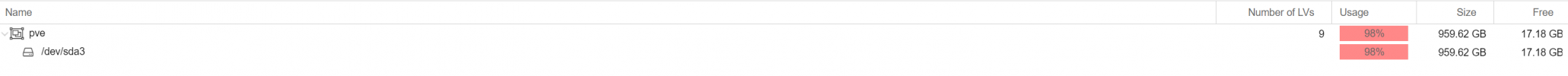

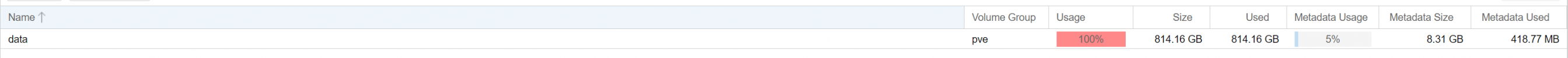

Coming to the point, whenever try to create a new LV or try to create a Snap or backup, I get warnings...

But I'm pretty sure I've enough room inside the thin-pool to hold couple of them yet.

I'm not finding any clue, what might have gone wrong.!

Please shade some light. Thank you.

I'm doing things on proxmox since a few weeks. So treat me new on these things.

Coming to the point, whenever try to create a new LV or try to create a Snap or backup, I get warnings...

Code:

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

WARNING: Sum of all thin volume sizes (<172.98 GiB) exceeds the size of thin pool pve/data and the amount of free space in volume group (<16.00 GiB).But I'm pretty sure I've enough room inside the thin-pool to hold couple of them yet.

Code:

root@pxmx:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

cache1 pve Vwi-aotz-- 10.00g data 99.94

cache2 pve Vwi-aotz-- 10.00g data 0.01

data pve twi-aotz-- <141.43g 16.54 1.95

root pve -wi-ao---- 55.75g

snap_vm-100-disk-1_Snap1 pve Vri---tz-k 16.00g data vm-100-disk-1

snap_vm-100-disk-1_Snap2 pve Vri---tz-k 16.00g data vm-100-disk-1

snap_vm-101-disk-0_Snap1 pve Vri---tz-k 20.00g data vm-101-disk-0

snap_vm-101-disk-0_Snap2 pve Vri---tz-k 20.00g data vm-101-disk-0

swap pve -wi-ao---- 7.00g

vm-100-disk-1 pve Vwi-aotz-- 16.00g data 9.71

vm-100-state-Snap1 pve Vwi-a-tz-- <3.49g data 18.07

vm-100-state-Snap2 pve Vwi-a-tz-- <3.49g data 13.01

vm-101-disk-0 pve Vwi-aotz-- 20.00g data 21.14

vm-114-disk-0 pve Vwi-aotz-- 8.00g data 21.16

vm-124-disk-0 pve Vwi-a-tz-- 6.00g data 67.80

vm-124-disk-1 pve Vwi-a-tz-- 4.00g data 1.63

root@pxmx:~# vgdisplay pve

--- Volume group ---

VG Name pve

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 127

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 16

Open LV 7

Max PV 0

Cur PV 1

Act PV 1

VG Size <223.07 GiB

PE Size 4.00 MiB

Total PE 57105

Alloc PE / Size 53010 / 207.07 GiB

Free PE / Size 4095 / <16.00 GiB

VG UUID ug5DjO-FyBB-O0Gm-rxLW-ibQC-pPrS-jTgo1i

root@pxmx:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 3.8G 0 3.8G 0% /dev

tmpfs 777M 1.1M 776M 1% /run

/dev/mapper/pve-root 55G 6.3G 46G 13% /

tmpfs 3.8G 46M 3.8G 2% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 511M 328K 511M 1% /boot/efi

zstore 3.5T 128K 3.5T 1% /zstore

zstore/media 3.6T 63G 3.5T 2% /opt/media

/dev/fuse 128M 20K 128M 1% /etc/pve

tmpfs 777M 0 777M 0% /run/user/0I'm not finding any clue, what might have gone wrong.!

Please shade some light. Thank you.