Hi,

I have installed a proxmox server (let’s call it PVE1). I have three NICs inside, which and all are used for virtual bridges :

- vmbr0 - 10.66.10.2/24 - the management network, that’s the one I access the admin page from (http://10.66.10.2:8006) - the gateway for vmbr0 is 10.66.10.1

- vmbr1 - 10.66.40.0/24 - I have one VM on this one at the moment (that VM has the following IP Address : 10.66.40.10)

- vmbr2 - 10.66.20.0/24 - I also have some VMs on it. This bridge is VLAN-Aware, so I have VMs in the following ranges (10.66.20.0, 10.66.30.0 and 10.66.40.0)

Here is the conf (from /etc/network/interfaces)

——————

——————

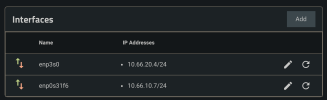

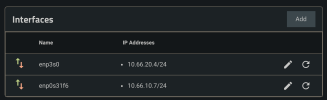

Now, I have another server, with TrueNAS installed on bare metal, with two interfaces :

Now, here is my problem, Individually, I can reach all ressources, but some don’t want to talk to each others. My router is a UDM Pro SE, but there is no firewall anywhere as of yet (internally) and no firewall rule in Proxmox (I’m still at the setup stage). There are VLANs configured in the UDM with physical Hosts and VMs in VLAN - either native or flagged - works fine. I can reach them and some of them can also reach each others (I haven’t applied any firewall rules to stop them from talking to each others) - The only interfaces that are « native VLANs » in the router are this in the following range : 10.66.10.0/24 - which is the management subnet for nearly all network equipments and the Proxmoxes.

In effect, I can ssh into PVE1 and PBS from my laptop and desktop computer. But both of them can’t see each others. I ssh into PVE1 and I realized it can’t ping to PBS (and vice-versa) which was problematic when I tried to setup PBS to host backups from PV1. That’s when I also noticed that from PVE1, I couldn’t ping VMs in the 10.66.20.0/24 and 10.66.40.0/24 - though those VMs are clearly accessible on the network for everyone else. From my Laptop, I can ssh or ping to 10.66.40.10, which has Unraid and Plex on it and I can access those fine - as well as the other VMs in the 10.66.20.0/24, 10.66.30.0/24 and 10.66.40.0/24) - I haven’t tested all configuration, but VMs

But from PVE1, I can only ping VMs that are not in the range of 10.66.40.0/24 and 10.66.20.10/24 - and from PBS, I can’t ping any VMs in the 10.66.20.10/24 and 10.66.10.10/24… On the other hand, PBS can’t ping it the Proxmox Server on 10.66.40.2 (vmbr0 on PVE1)… but can ping 10.66.10.10 (vmbr2 on PVE1).

I’m at my wits end and not an expert… I have some knowledge, but you could call me script-kiddy at best.

Could someone explain where I messed up and how can I solve this?

I have installed a proxmox server (let’s call it PVE1). I have three NICs inside, which and all are used for virtual bridges :

- vmbr0 - 10.66.10.2/24 - the management network, that’s the one I access the admin page from (http://10.66.10.2:8006) - the gateway for vmbr0 is 10.66.10.1

- vmbr1 - 10.66.40.0/24 - I have one VM on this one at the moment (that VM has the following IP Address : 10.66.40.10)

- vmbr2 - 10.66.20.0/24 - I also have some VMs on it. This bridge is VLAN-Aware, so I have VMs in the following ranges (10.66.20.0, 10.66.30.0 and 10.66.40.0)

Here is the conf (from /etc/network/interfaces)

——————

Code:

auto lo

iface lo inet loopback

auto enp0s31f6

iface enp0s31f6 inet manual

iface enp3s0 inet manual

iface enx00e04cf177f6 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.66.10.2/24

gateway 10.66.10.1

bridge-ports enp0s31f6

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address 10.66.40.0/24

bridge-ports enp3s0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

auto vmbr2

iface vmbr2 inet static

address 10.66.20.10/24

bridge-ports enx00e04cf177f6

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094Now, I have another server, with TrueNAS installed on bare metal, with two interfaces :

- enp3s0 on which I installed Proxmox Backup Server, it has the following address : 10.66.20.4/24 - and PBS has 10.66.20.5/24

- enp0s31f6 on which I usually reach the admin UI, it has the following address 10.66.10.7/24

Now, here is my problem, Individually, I can reach all ressources, but some don’t want to talk to each others. My router is a UDM Pro SE, but there is no firewall anywhere as of yet (internally) and no firewall rule in Proxmox (I’m still at the setup stage). There are VLANs configured in the UDM with physical Hosts and VMs in VLAN - either native or flagged - works fine. I can reach them and some of them can also reach each others (I haven’t applied any firewall rules to stop them from talking to each others) - The only interfaces that are « native VLANs » in the router are this in the following range : 10.66.10.0/24 - which is the management subnet for nearly all network equipments and the Proxmoxes.

In effect, I can ssh into PVE1 and PBS from my laptop and desktop computer. But both of them can’t see each others. I ssh into PVE1 and I realized it can’t ping to PBS (and vice-versa) which was problematic when I tried to setup PBS to host backups from PV1. That’s when I also noticed that from PVE1, I couldn’t ping VMs in the 10.66.20.0/24 and 10.66.40.0/24 - though those VMs are clearly accessible on the network for everyone else. From my Laptop, I can ssh or ping to 10.66.40.10, which has Unraid and Plex on it and I can access those fine - as well as the other VMs in the 10.66.20.0/24, 10.66.30.0/24 and 10.66.40.0/24) - I haven’t tested all configuration, but VMs

But from PVE1, I can only ping VMs that are not in the range of 10.66.40.0/24 and 10.66.20.10/24 - and from PBS, I can’t ping any VMs in the 10.66.20.10/24 and 10.66.10.10/24… On the other hand, PBS can’t ping it the Proxmox Server on 10.66.40.2 (vmbr0 on PVE1)… but can ping 10.66.10.10 (vmbr2 on PVE1).

I’m at my wits end and not an expert… I have some knowledge, but you could call me script-kiddy at best.

Could someone explain where I messed up and how can I solve this?