Hi,

Today i decided to upgrade PVE from V6.2.4 to V7.1

I did it according to the following steps:

1. First upgrade to the most recent 6.x version with `apt-get update` followed by `apt-get upgrade-dist`.

2. I followed these steps https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0

During the upgrade i had some errors about "disk not found" where i was woried about but the upgrade just continued. After the upgrade finished, i restarted the host to complete the upgrade. The LXC containers where started (start on boot) without any issue. Some VM's (start on boot) also worked directly.

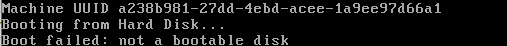

Some of the VM's gave the error `Boot failed: not a bootable disk` in the console and keeps rebooting. After some Google attempts i found a post somewhere that it sometimes helps to reboot the host so i did it again and after that all the VM's gave the concerning error. I Googled for hours and found a lot of similar issues. The only thing that worked for the most of the VM's was restoring the backup. Unfortunatly this does not work for the most important machine, the mail server. It contains 170Gb on mails and the only backup i have a "proxmox backups" (images. 7x). None of them works as they all give the same issue.

The questions:

- How can i make the VM boot again? If its not fixable, is there a way to enter the disk so i can get the data?

- How did this occur? is it my fault? is it a bug? is it a know issue?

- I don't dare to reboot proxmox anymore as i'm scared that other VM's also break permanently. How can i be sure that this is not happening again? Or at least make sure the backup works!

Some important facts:

- I'm 100% sure all the VM's worked find before the Proxmox upgrade

- I created a backup of each machine to a network share (backup server) before i executed any update related command

- The backups are made via the proxmox web interface

- All the VM's run on Ubuntu 20.04 LTS incl. the mailserver

- I tried to set the bios to UEFI (without succes)

- I'm not a Proxmox Pro user so if extra data is required, please mention how i can get it to avoid unnecessary posts

The error:

pveversion -v output

Backup configuration from concerning backup:

The VM config

============================================================================================

UPDATE1

I found this https://forum.proxmox.com/threads/after-backup-boot-failed-not-a-bootable-disk.67954/ which makes sense to my understandings. As i don't have a separated backup of the partition table, i found a "boot-repair-disk" (https://help.ubuntu.com/community/Boot-Repair). This did not fix my VM but gave me some extra information what may be usefull.

============================================================================================

UPDATE2

After a boot with Gparted live, i can say that the partition table is gone. I tried to rebuild it with testdisk (https://interworks.com/blog/smatlock/2015/02/13/restore-damaged-or-corrupted-linux-partition-table/) but this is not working.

Is it possible to create an VM with exactly the same configuration (incl. disk size) and install ubuntu on it (same disk settings as we always use the default) and copy that partition table to the "broken" server ?

Today i decided to upgrade PVE from V6.2.4 to V7.1

I did it according to the following steps:

1. First upgrade to the most recent 6.x version with `apt-get update` followed by `apt-get upgrade-dist`.

2. I followed these steps https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0

During the upgrade i had some errors about "disk not found" where i was woried about but the upgrade just continued. After the upgrade finished, i restarted the host to complete the upgrade. The LXC containers where started (start on boot) without any issue. Some VM's (start on boot) also worked directly.

Some of the VM's gave the error `Boot failed: not a bootable disk` in the console and keeps rebooting. After some Google attempts i found a post somewhere that it sometimes helps to reboot the host so i did it again and after that all the VM's gave the concerning error. I Googled for hours and found a lot of similar issues. The only thing that worked for the most of the VM's was restoring the backup. Unfortunatly this does not work for the most important machine, the mail server. It contains 170Gb on mails and the only backup i have a "proxmox backups" (images. 7x). None of them works as they all give the same issue.

The questions:

- How can i make the VM boot again? If its not fixable, is there a way to enter the disk so i can get the data?

- How did this occur? is it my fault? is it a bug? is it a know issue?

- I don't dare to reboot proxmox anymore as i'm scared that other VM's also break permanently. How can i be sure that this is not happening again? Or at least make sure the backup works!

Some important facts:

- I'm 100% sure all the VM's worked find before the Proxmox upgrade

- I created a backup of each machine to a network share (backup server) before i executed any update related command

- The backups are made via the proxmox web interface

- All the VM's run on Ubuntu 20.04 LTS incl. the mailserver

- I tried to set the bios to UEFI (without succes)

- I'm not a Proxmox Pro user so if extra data is required, please mention how i can get it to avoid unnecessary posts

The error:

pveversion -v output

Code:

root@hv1:/home/axxmin# pveversion -v

proxmox-ve: 7.1-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.1-8 (running version: 7.1-8/5b267f33)

pve-kernel-helper: 7.1-6

pve-kernel-5.13: 7.1-5

pve-kernel-5.4: 6.4-11

pve-kernel-5.3: 6.1-6

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.4.157-1-pve: 5.4.157-1

pve-kernel-5.4.41-1-pve: 5.4.41-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.18-2-pve: 5.3.18-2

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-14

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.0-4

libpve-storage-perl: 7.0-15

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.1.2-1

proxmox-backup-file-restore: 2.1.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-4

pve-cluster: 7.1-2

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-3

pve-ha-manager: 3.3-1

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.1-4

smartmontools: 7.2-pve2

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.1-pve3Backup configuration from concerning backup:

Code:

balloon: 6144

boot: cdn

bootdisk: sata0

cores: 2

ide2: none,

media=cdrom

memory: 12288

name: axx-mcow-srv01

net0: virtio=4E:86:95:6A:FC:46,bridge=vmbr20,

firewall=1

numa: 0

onboot: 1

ostype: l26

sata0: vm_instances:vm-140-disk-0,size=200G

scsihw: virtio-scsi-pci

smbios1: uuid=a238b981-27dd-4ebd-acee-1a9ee97d66a1

sockets: 1

vmgenid: 971eb84d-7502-4a68-97af-66c595c011b9 #qmdump#map:sata0:drive-sata0:vm_instances:raw:The VM config

Code:

root@hv1:~# qm config 140

balloon: 6144

boot: cdn

bootdisk: sata0

cores: 2

ide2: none,media=cdrom

memory: 12288

name: axx-mcow-srv01

net0: virtio=4E:86:95:6A:FC:46,bridge=vmbr20,firewall=1

numa: 0

onboot: 1

ostype: l26

sata0: vm_instances:vm-140-disk-0,size=200G

scsihw: virtio-scsi-pci

smbios1: uuid=a238b981-27dd-4ebd-acee-1a9ee97d66a1

sockets: 1

vmgenid: 66102f99-158b-451b-a8e2-187ebed7b183

[/CODE/============================================================================================

UPDATE1

I found this https://forum.proxmox.com/threads/after-backup-boot-failed-not-a-bootable-disk.67954/ which makes sense to my understandings. As i don't have a separated backup of the partition table, i found a "boot-repair-disk" (https://help.ubuntu.com/community/Boot-Repair). This did not fix my VM but gave me some extra information what may be usefull.

============================================================================================

UPDATE2

After a boot with Gparted live, i can say that the partition table is gone. I tried to rebuild it with testdisk (https://interworks.com/blog/smatlock/2015/02/13/restore-damaged-or-corrupted-linux-partition-table/) but this is not working.

Is it possible to create an VM with exactly the same configuration (incl. disk size) and install ubuntu on it (same disk settings as we always use the default) and copy that partition table to the "broken" server ?

Last edited: