Greetings. I have Proxmox 8.3.2 installed on a mirror zpool with mostly default settings:

I also have two guest VMs with block level devices on zvols.

One guest is running, another is being restored by dd reading image file and writing it to VM's zvol. E. g. we are reading from host's root FS and writing to VM's zvol, everything is happening within the same mirror. This single dd is enough to make completely unresponsive block level devices on the guest that is already running.

The problem seem to manifest after ARC is exausted, before that the running guest can write to its disk.

Do you have any idea what can I do to improve parallel writes to the pool? Yes, I know that HDDs are slow, and cache SSD can improve situation, and mirrored RAID doesn't help to get higher iops compared to a single HDD, and it's not best idea to keep root FS and guests on the same mirror, and I can imagine that something went wrong so I have write amplification (I used mostly default settings though, and I'm going to show it below, so I doubt it) but what kind of write amplification can make VM disks to be unresponsive for 5 minutes straight?

I don't want to mask the problem with thin provision, compression, etc., so I turned it off. I also don't want to set ARC size too high, because in real life you can't have 3/4 of hosts RAM dedicated to disk writes. My current ARC size is 6 GiB, but I had more or less same results with 12 GiB.

Full history of my zpool:

HDDs have caching turned on:

The problem persists on both my ProLiant DL360 Gen10 servers (same hardware configuration: Intel Xeon Silver 4110 CPU/64GiB RAM/2x8TB HDDs), so it's not something specific to only one server. HDDs are connected to HPE Smart Array but left unconfigured:

To be precise about what is happening I made the following test:

code_language.shell:

# zpool list -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 7.27T 69.5G 7.20T - - 0% 0% 1.00x ONLINE -

mirror-0 7.27T 69.5G 7.20T - - 0% 0.93% - ONLINE

ata-HGST_HUH728080ALE604_...-part3 7.28T - - - - - - - ONLINE

ata-HGST_HUH728080ALE604_...-part3 7.28T - - - - - - - ONLINE

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 132G 7.01T 104K /rpool

rpool/ROOT 32.3G 7.01T 96K /rpool/ROOT

rpool/ROOT/pve-1 32.3G 17.7G 32.3G /

rpool/data 99.2G 6.90T 96K /rpool/data

rpool/data/vm-112-disk-0 7.11G 6.90T 7.02G -

rpool/data/vm-113-disk-0 15.1G 6.90T 15.0G -

rpool/data/vm-113-disk-1 15.1G 6.90T 15.0G -

rpool/data/vm-113-disk-2 15.1G 6.92T 56K -

rpool/var-lib-vz 104K 50.0G 104K /var/lib/vzI also have two guest VMs with block level devices on zvols.

code_language.shell:

# qm list

VMID NAME STATUS MEM(MB) BOOTDISK(GB) PID

112 app-disk-test-1 running 512 7.00 1824

113 app-disk-test-zvol32k-2 stopped 2048 15.00 0One guest is running, another is being restored by dd reading image file and writing it to VM's zvol. E. g. we are reading from host's root FS and writing to VM's zvol, everything is happening within the same mirror. This single dd is enough to make completely unresponsive block level devices on the guest that is already running.

The problem seem to manifest after ARC is exausted, before that the running guest can write to its disk.

Do you have any idea what can I do to improve parallel writes to the pool? Yes, I know that HDDs are slow, and cache SSD can improve situation, and mirrored RAID doesn't help to get higher iops compared to a single HDD, and it's not best idea to keep root FS and guests on the same mirror, and I can imagine that something went wrong so I have write amplification (I used mostly default settings though, and I'm going to show it below, so I doubt it) but what kind of write amplification can make VM disks to be unresponsive for 5 minutes straight?

I don't want to mask the problem with thin provision, compression, etc., so I turned it off. I also don't want to set ARC size too high, because in real life you can't have 3/4 of hosts RAM dedicated to disk writes. My current ARC size is 6 GiB, but I had more or less same results with 12 GiB.

Full history of my zpool:

code_language.shell:

# zpool history

History for 'rpool':

2025-01-16.14:29:53 zpool create -f -o cachefile=none -o ashift=12 rpool mirror /dev/disk/by-id/ata-HGST_HUH728080ALE604_...-part3 /dev/disk/by-id/ata-HGST_HUH728080ALE604_...-part3

2025-01-16.14:29:54 zfs create rpool/ROOT

2025-01-16.14:29:54 zfs create rpool/ROOT/pve-1

2025-01-16.14:29:54 zfs create rpool/data

2025-01-16.14:29:54 zfs create -o mountpoint=/rpool/ROOT/pve-1/var/lib/vz rpool/var-lib-vz

2025-01-16.14:29:54 zfs set atime=on relatime=on rpool

2025-01-16.14:29:54 zfs set compression=on rpool

2025-01-16.14:29:54 zfs set acltype=posix rpool/ROOT/pve-1

2025-01-16.14:29:54 zfs set sync=disabled rpool

2025-01-16.15:08:40 zfs set sync=standard rpool

2025-01-16.15:08:41 zfs set mountpoint=/ rpool/ROOT/pve-1

2025-01-16.15:08:41 zfs set mountpoint=/var/lib/vz rpool/var-lib-vz

2025-01-16.15:08:41 zpool set bootfs=rpool/ROOT/pve-1 rpool

...

2025-01-21.13:09:47 zfs set quota=50g rpool/ROOT/pve-1

2025-01-21.13:09:55 zfs set quota=7168g rpool/data

2025-01-21.13:10:02 zfs set quota=50g rpool/var-lib-vz

...

2025-01-21.14:30:15 zfs set compression=off rpool/dataHDDs have caching turned on:

code_language.shell:

# hdparm -W /dev/sda

/dev/sda:

write-caching = 1 (on)

# hdparm -W /dev/sdb

/dev/sdb:

write-caching = 1 (on)The problem persists on both my ProLiant DL360 Gen10 servers (same hardware configuration: Intel Xeon Silver 4110 CPU/64GiB RAM/2x8TB HDDs), so it's not something specific to only one server. HDDs are connected to HPE Smart Array but left unconfigured:

To be precise about what is happening I made the following test:

- Guest VM writes 1MiB of random data in a loop:

See vm-writes-1dd.txt in attachment for result.code_language.shell:while(:); do bash -c 'date "+[%T]"; dd if=/dev/urandom of=test.bin bs=1M count=1; '\''rm'\'' -v test.bin'; sleep 1; done - Host is writing 15GiB file to zvol:

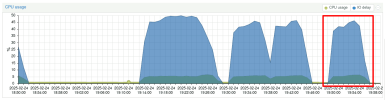

See arcstat-1dd.txt and zpool-iostat-1-1dd.txt in attachment for result.code_language.shell:# dd if=test.bin of=/dev/zvol/rpool/data/vm-113-disk-0 bs=1M status=progress 16091447296 bytes (16 GB, 15 GiB) copied, 204 s, 78,9 MB/s15360+0 records in 15360+0 records out 16106127360 bytes (16 GB, 15 GiB) copied, 395,183 s, 40,8 MB/s - CPU load during test:

- Zpool settings: see zfs-get-all.txt, zpool-get-all.txt in attachment.

Attachments

Last edited: