Thanks a lot for the suggestion~ I've built it, was just about to reboot the servers :] Good day!Yeah! I strong recommend that you give a try with 1.8.0-dirty. It's work perfect.

See previously messages here in this thread.

I wish good luck.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

let us know how you get on and if it improves everything! I will start my virtiofsd adventures in the next couple of weeks. So thanks to everyone on this thread for blazing the way!Thanks a lot for the suggestion~ I've built it, was just about to reboot the servers :] Good day!

Got this running on 4 servers now, mounting the same set of folder on all. I guess I also found 'the solution' (workaround) for the

My systems

Host: Proxmox 8.0

Linux pve01 6.2.16-14-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-14 (2023-09-19T08:17Z) x86_64 GNU/Linux

Vm's: Debian 12

Linux dswarm03 6.1.0-12-cloud-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.52-1 (2023-09-07) x86_64 GNU/Linux

PS: I reverted to virtiofsd 1.7.2 to test if that versions works too.

It seems also fine, and now I'm sure the next virtiofsd update isn't going to break stuff if its reverting from 1.8.0 tot 1.7.2.

bad superblock error and updated my guide to reflect my learnings.My systems

Host: Proxmox 8.0

Linux pve01 6.2.16-14-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-14 (2023-09-19T08:17Z) x86_64 GNU/Linux

Vm's: Debian 12

Linux dswarm03 6.1.0-12-cloud-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.52-1 (2023-09-07) x86_64 GNU/Linux

PS: I reverted to virtiofsd 1.7.2 to test if that versions works too.

It seems also fine, and now I'm sure the next virtiofsd update isn't going to break stuff if its reverting from 1.8.0 tot 1.7.2.

Bash:

-rwxr-xr-x 1 root root 5.4M Oct 3 12:06 virtiofsd

-rwxr-xr-x 1 root root 2.6M Jul 20 09:21 'virtiofsd 1.7.0'

-rwxr-xr-x 1 root root 5.4M Oct 3 12:10 'virtiofsd 1.7.2'

-rwxr-xr-x 1 root root 5.6M Oct 3 12:07 'virtiofsd 1.8.0'

Last edited:

I have been testing virtiofsd in one of my VMs for the last few days and noticed that some services that process and copy large files sometimes hang, could it be that the hookscript perlscript suggested here does not use "queue-size=1024" in the VM's args?

I have now added the argument to my shares and will test it the next few days to see if that was the problem

So I can now report back that after over a week of intensive use with a lot of data transfer via virtiofsd, the VM no longer hangs, as was previously the case almost daily without the setting of the "queue size"

Gilberto Ferreira

Renowned Member

Sounds goodSo I can now report back that after over a week of intensive use with a lot of data transfer via virtiofsd, the VM no longer hangs, as was previously the case almost daily without the setting of the "queue size"

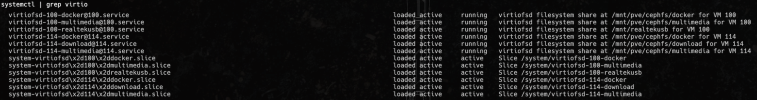

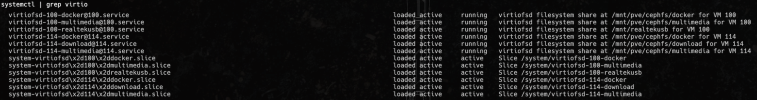

I ran into a problem that I couldn't mount the same folder on another VM on the same host.

Turns out that the tag format (mnt_pve_cephfs_docker) used was not unique and also the slice(s) were not (system-virtiofsd\x2ddocker.slice).

This is required when the same folder needs to be attached to multiple vm's on the same host.

I shortened the tag and included the vmid to make it unique (100-docker), as long as you don't have foldernames ending with same string, and want those to be mounted on the same VM!

The slice(s) (system-virtiofsd\x2d100\x2ddocker.slice) now has the vmid in it to make it unique.

Who needs this, can replace this section in the hookscript below:

This is the first time, that I'm fooling around with Perl, so my modifications are far from perfect, but they work. (Hopefully someone else can make it better)

Also, useful to know that pre / post stop are not doing much, unfortunately it's needed to manually clean up stuff until a pre / post stop is made!

And even host reboot are needed to get to forget about old virtiofs stuff!

Turns out that the tag format (mnt_pve_cephfs_docker) used was not unique and also the slice(s) were not (system-virtiofsd\x2ddocker.slice).

This is required when the same folder needs to be attached to multiple vm's on the same host.

I shortened the tag and included the vmid to make it unique (100-docker), as long as you don't have foldernames ending with same string, and want those to be mounted on the same VM!

The slice(s) (system-virtiofsd\x2d100\x2ddocker.slice) now has the vmid in it to make it unique.

Who needs this, can replace this section in the hookscript below:

# TODO: Have removal logic. Probably need to glob the systemd directory for matching files.

Perl:

for (@{$associations{$vmid}}) {

my $share_id = $_ =~ m!/([^/]+)$! ? $1 : ''; # only last folder from path

my $unit_name = 'virtiofsd-' . $vmid . '-' . $share_id;

my $unit_file = '/etc/systemd/system/' . $unit_name . '@.service';

print "attempting to install unit $unit_name...\n";

if (not -d $virtiofsd_dir) {

print "ERROR: $virtiofsd_dir does not exist!\n";

}

else { print "DIRECTORY DOES EXIST!\n"; }

if (not -e $unit_file) {

$tt->process(\$unit_tpl, { share => $_, share_id => $share_id }, $unit_file)

|| die $tt->error(), "\n";

system("/usr/bin/systemctl daemon-reload");

system("/usr/bin/systemctl enable $unit_name\@$vmid.service");

}

system("/usr/bin/systemctl start $unit_name\@$vmid.service");

$vfs_args .= " -chardev socket,id=char$char_id,path=/run/virtiofsd/$vmid-$share_id.sock";

$vfs_args .= " -device vhost-user-fs-pci,chardev=char$char_id,tag=$vmid-$share_id";

$char_id += 1;

}This is the first time, that I'm fooling around with Perl, so my modifications are far from perfect, but they work. (Hopefully someone else can make it better)

Also, useful to know that pre / post stop are not doing much, unfortunately it's needed to manually clean up stuff until a pre / post stop is made!

And even host reboot are needed to get to forget about old virtiofs stuff!

Last edited:

First of all - thanks for the great tutorial @BobC. I have successfully mounted a virtiofs share into one of my VMs.

However, I do experience quite poor performance. I have also already posted over in the proxmox-sub (where I mainly compare different iodepths, I have since retested with different blocksizes).

I used fio (direct=1, size 10G) to compare "native" write performance on the host with the performance inside the VM.

On the host the most I was able to get was ~1GB/s with 8KiB blocks (100-800 MB/s with other block sizes). When I ran the same tests inside the VM, the most I got was ~400 MB/s with 32 KiB blocks (other block sizes were 100-200 MB/s).

I'm wondering if those results are to be expected or if there is something wrong here. Perhaps you can share some experience and/or give advice of how to further debug/improve the situation.

PS: I have also repeated the tests on my Desktop PC (Arch, virtiofsd v1.8) with virt-manager. Here, I did not see any noticeable difference in performance. Quite the opposite: The VM did even outperform my host starting with blocksizes ~8K. Note that had to enable "shared memory" in virt-manager for it to support viritofs.

However, I do experience quite poor performance. I have also already posted over in the proxmox-sub (where I mainly compare different iodepths, I have since retested with different blocksizes).

I used fio (direct=1, size 10G) to compare "native" write performance on the host with the performance inside the VM.

On the host the most I was able to get was ~1GB/s with 8KiB blocks (100-800 MB/s with other block sizes). When I ran the same tests inside the VM, the most I got was ~400 MB/s with 32 KiB blocks (other block sizes were 100-200 MB/s).

I'm wondering if those results are to be expected or if there is something wrong here. Perhaps you can share some experience and/or give advice of how to further debug/improve the situation.

PS: I have also repeated the tests on my Desktop PC (Arch, virtiofsd v1.8) with virt-manager. Here, I did not see any noticeable difference in performance. Quite the opposite: The VM did even outperform my host starting with blocksizes ~8K. Note that had to enable "shared memory" in virt-manager for it to support viritofs.

sorry @GamerBene19, my use-case was never sequential, it was random, and it was mainly for home directories. NFS performance was substantially worse (for me) when using NFS between VM and host, even where the VM was running on the same node as the NFS export. And that was even with zfs logbias set to random for the NFS export too.

Last edited:

Hello,

I am first time trying to use virtiofs together with the proposed hook script. I try to export 3 folders from host to vm, I see 6 virtiofsd processes running and everything looks ok. Only the vm cannot find the tags and can therefore perform no mount. dmesg shows that the tags are unknown.

They look like "100-somefolder" which is clearly what virtiofsd seems to have configured, too. the args line shows the same tags.

virtiofsd is 1.7.2 from standard. Is there a way to list all proposed tags inside the vm?

I am first time trying to use virtiofs together with the proposed hook script. I try to export 3 folders from host to vm, I see 6 virtiofsd processes running and everything looks ok. Only the vm cannot find the tags and can therefore perform no mount. dmesg shows that the tags are unknown.

They look like "100-somefolder" which is clearly what virtiofsd seems to have configured, too. the args line shows the same tags.

virtiofsd is 1.7.2 from standard. Is there a way to list all proposed tags inside the vm?

After some reboots and fiddling I managed to mount the virtiofs filesystems and can use them on this vm. But now I tried to export them via nfs and that seems to make troubles again. The nfs clients seem to see the basic fs tree (one can ls and cd to folders), but as soon as I try to open an existing file I get a stale filehandle error message.

Before this situation I got errors from the nfs server saying the exported fs need a fsid set. I gave them some small integers (1-6), maybe this is causing the problem on the client?

Before this situation I got errors from the nfs server saying the exported fs need a fsid set. I gave them some small integers (1-6), maybe this is causing the problem on the client?

I have been struggling with this for a month.

I have followed the instructions here but cannot get a zfs directory on the host to mount inside the vm.

args that allow the vm to start

Manually starting virtiofsd

After running virtiofsd manually and starting the vm I get this on the host after the vm completes boot

Anybody have any ideas?

I have followed the instructions here but cannot get a zfs directory on the host to mount inside the vm.

Code:

Proxmox 8 - 6.5.11-8-pveargs that allow the vm to start

Code:

args: -chardev socket,id=char0,path=/run/vfs600.sock -device vhost-user-fs-pci,queue-size=1024,chardev=char0,tag=vfs600 -object memory-backend-file,id=mem,size=4G,mem-path=/dev/shm,share=onManually starting virtiofsd

Code:

/usr/libexec/virtiofsd --log-level debug --socket-path=/run/vfs600.sock --shared-dir /zfs --announce-submounts --inode-file-handles=mandatory

Code:

virtiofsd 1.7.2After running virtiofsd manually and starting the vm I get this on the host after the vm completes boot

Code:

[2024-02-10T04:04:58Z DEBUG virtiofsd::passthrough::mount_fd] Creating MountFd: mount_id=310, mount_fd=10

[2024-02-10T04:04:58Z DEBUG virtiofsd::passthrough::mount_fd] Dropping MountFd: mount_id=310, mount_fd=10

[2024-02-10T04:04:58Z INFO virtiofsd] Waiting for vhost-user socket connection...

[2024-02-10T04:05:07Z INFO virtiofsd] Client connected, servicing requests

[2024-02-10T04:05:25Z ERROR virtiofsd] Waiting for daemon failed: HandleRequest(InvalidParam)Anybody have any ideas?

I can tell you, using the script made by Drallas from above in this thread got me a working setup. At least in regard of the virtiofs part. There is a "my guide" link above, walk to that. It installs a hookscript, and you have to reboot the vm twice probably, but then it should work.

I got it figured out. I didn't use perl scripts from other places because I intended to track down what my specific issue was. I essentially bashed a debug process on my system and found that the args line needs to be the first line in the conf file. My system uses numa so using the file backend instead of memfd was my next issue. Right now I am progressing in getting it to connect reliably because sometimes the virtiofsd service process doesn't get a connection from the vm on start. My own bash hookscripts only need the vmid to do all pre and post works so I am pressing forward now.

EDIT: I got this thing licked now. If your vm is throwing superblock errors on first start when trying to mount a directory, there's a chance the vm didn't connect to the virtiofsd process. When debugging I noticed this was when the issue arose the most. Also, systemd-run (transient) and .service files with virtiofsd (only virtiofsd; I can use transient and .service files on other things with 0 issues) seemed to be a bit flaky when it comes to needing to start/stop vms especially "hard stops". I was able to "bash" the crap out of the command in order to get it to run and not need a transient or .service file. When the vm stops, there really is no need to clean anything up since virtiofsd (when the vm is connected correctly) will automatically shut itself down when it detects vm shutdown. What I do is just make sure there aren't any existing args line/s during the pre-start hook and add in the correct args line for that vm. I went so far as to write a hookscript entirely in perl and still ended up being able to reliably get it done by just calling the bash script from the perl hookscript.

EDIT: I got this thing licked now. If your vm is throwing superblock errors on first start when trying to mount a directory, there's a chance the vm didn't connect to the virtiofsd process. When debugging I noticed this was when the issue arose the most. Also, systemd-run (transient) and .service files with virtiofsd (only virtiofsd; I can use transient and .service files on other things with 0 issues) seemed to be a bit flaky when it comes to needing to start/stop vms especially "hard stops". I was able to "bash" the crap out of the command in order to get it to run and not need a transient or .service file. When the vm stops, there really is no need to clean anything up since virtiofsd (when the vm is connected correctly) will automatically shut itself down when it detects vm shutdown. What I do is just make sure there aren't any existing args line/s during the pre-start hook and add in the correct args line for that vm. I went so far as to write a hookscript entirely in perl and still ended up being able to reliably get it done by just calling the bash script from the perl hookscript.

Last edited:

Hi,

i am trying to get this running on Win11.

But when i am trying to start the "VirtIO-FS Service" in Win11 i get the following error:

I am using the script from Drallas from: https://gist.github.com/Drallas/7e4a6f6f36610eeb0bbb5d011c8ca0be

My config looks like:

The config of the vm looks good for me:

Does someone has an idea for me how solve the problem?

i am trying to get this running on Win11.

But when i am trying to start the "VirtIO-FS Service" in Win11 i get the following error:

Der Dienst "VirtIO-FS service" auf "Lokaler Computer" konnte nicht gestartet werden. Fehler 1053: Der Dienst antwortete nicht rechtzeitig auf die Start- oder Steuerungsanforderung.

I am using the script from Drallas from: https://gist.github.com/Drallas/7e4a6f6f36610eeb0bbb5d011c8ca0be

My config looks like:

Code:

root@pve:/var/lib/vz/snippets# cat virtiofs_hook.conf

100: /mnt/pve/pictures

101: /mnt/pve/picturesThe config of the vm looks good for me:

Code:

root@pve:/var/lib/vz/snippets# qm config 100

args: -object memory-backend-memfd,id=mem,size=16000M,share=on -numa node,memdev=mem -chardev socket,id=char0,path=/run/virtiofsd/100-bud.sock -device vhost-user-fs-pci,chardev=char0,tag=100-bud

bios: ovmf

boot: order=scsi0

cores: 8

cpu: x86-64-v2-AES

efidisk0: nob:vm-100-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

hookscript: local:snippets/virtiofs_hook.pl

machine: pc-q35-8.1

memory: 16000

meta: creation-qemu=8.1.5,ctime=1711959689

name: steuern

net0: virtio=BC:24:11:4D:F1:8D,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsi0: nob:vm-100-disk-1,iothread=1,size=80G

scsihw: virtio-scsi-single

smbios1: uuid=037688a8-c1a2-495c-9df0-3d34c6cecc94

sockets: 1

tpmstate0: nob:vm-100-disk-2,size=4M,version=v2.0

unused0: nob:vm-100-disk-3

vmgenid: b9a0c41d-f083-4d75-8eea-ac7c7c888a74Does someone has an idea for me how solve the problem?

Only thing i missed is the installation of winfsp. (I guess this is the FUSE-Client on the Windows side. Not sure why I do not need this on the linux VM.)

https://winfsp.dev/

After installation it is working perfectly.

https://winfsp.dev/

After installation it is working perfectly.

I am trying to get virtiofsd working in debian guest where I am trying to create a samba share.

I am running problems with acl support. Samba is unable to show the owner of a share.

I read in the virtiofsd documentation that ACL support has to be explicitly enabled. I tried adding "acl" option to the fstab but that throws an error

Does that need to be done when running the Virtiofsd service or the vm config file and how do can we enable it in the guest?

I am running problems with acl support. Samba is unable to show the owner of a share.

I read in the virtiofsd documentation that ACL support has to be explicitly enabled. I tried adding "acl" option to the fstab but that throws an error

Does that need to be done when running the Virtiofsd service or the vm config file and how do can we enable it in the guest?

Last edited:

I have managed to follow the guide written by Drallas and the folder mounts successfully as far as I can tell, I can read/write to the folder etc

When running a benchmark I get considerably slower speeds in my VM when compared to the host, 16.6MiB/sec in the VM compared to 489/MiB/sec on the host.

I'm on Proxmox 8.3.0

Brand new VM on Ubuntu 24.10.1

virtiofsd 1.10.1

Host

Guest

VM conf

When running a benchmark I get considerably slower speeds in my VM when compared to the host, 16.6MiB/sec in the VM compared to 489/MiB/sec on the host.

I'm on Proxmox 8.3.0

Brand new VM on Ubuntu 24.10.1

virtiofsd 1.10.1

Host

Code:

root@pve1:~# fio --name=write_test --directory=/mnt/bindmounts/shared --size=1G --time_based --runtime=60 --rw=write --bs=4k --numjobs=1 --iodepth=1 --group_reporting

write_test: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.33

Starting 1 process

Jobs: 1 (f=1): [W(1)][100.0%][w=693MiB/s][w=177k IOPS][eta 00m:00s]

write_test: (groupid=0, jobs=1): err= 0: pid=188545: Thu Nov 21 16:15:52 2024

write: IOPS=125k, BW=489MiB/s (513MB/s)(28.7GiB/60001msec); 0 zone resets

clat (usec): min=4, max=74942, avg= 7.57, stdev=83.91

lat (usec): min=4, max=74942, avg= 7.63, stdev=83.95

clat percentiles (usec):

| 1.00th=[ 5], 5.00th=[ 5], 10.00th=[ 5], 20.00th=[ 5],

| 30.00th=[ 5], 40.00th=[ 5], 50.00th=[ 5], 60.00th=[ 5],

| 70.00th=[ 5], 80.00th=[ 6], 90.00th=[ 11], 95.00th=[ 21],

| 99.00th=[ 31], 99.50th=[ 58], 99.90th=[ 182], 99.95th=[ 359],

| 99.99th=[ 1139]

bw ( KiB/s): min=111000, max=748200, per=99.77%, avg=499877.48, stdev=179396.54, samples=119

iops : min=27750, max=187050, avg=124969.31, stdev=44849.12, samples=119

lat (usec) : 10=89.93%, 20=4.46%, 50=5.06%, 100=0.24%, 250=0.24%

lat (usec) : 500=0.04%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=12.41%, sys=75.94%, ctx=55352, majf=4, minf=12

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,7515732,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=489MiB/s (513MB/s), 489MiB/s-489MiB/s (513MB/s-513MB/s), io=28.7GiB (30.8GB), run=60001-60001msecGuest

Code:

root@ubuntu01:/home/user# fio --name=write_test --directory=/mnt/bindmounts/shared --size=1G --time_based --runtime=60 --rw=write --bs=4k --numjobs=1 --iodepth=1 --group_reporting

write_test: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.36

Starting 1 process

Jobs: 1 (f=1): [W(1)][100.0%][w=8472KiB/s][w=2118 IOPS][eta 00m:00s]

write_test: (groupid=0, jobs=1): err= 0: pid=1277: Thu Nov 21 16:18:39 2024

write: IOPS=4260, BW=16.6MiB/s (17.5MB/s)(999MiB/60001msec); 0 zone resets

clat (usec): min=45, max=61547, avg=231.80, stdev=375.67

lat (usec): min=45, max=61549, avg=232.20, stdev=375.89

clat percentiles (usec):

| 1.00th=[ 50], 5.00th=[ 62], 10.00th=[ 71], 20.00th=[ 83],

| 30.00th=[ 92], 40.00th=[ 105], 50.00th=[ 120], 60.00th=[ 141],

| 70.00th=[ 182], 80.00th=[ 408], 90.00th=[ 562], 95.00th=[ 652],

| 99.00th=[ 1156], 99.50th=[ 1614], 99.90th=[ 3228], 99.95th=[ 3949],

| 99.99th=[ 8356]

bw ( KiB/s): min= 5731, max=57736, per=100.00%, avg=17134.75, stdev=10517.79, samples=119

iops : min= 1432, max=14434, avg=4283.62, stdev=2629.50, samples=119

lat (usec) : 50=0.97%, 100=35.84%, 250=38.30%, 500=9.88%, 750=11.97%

lat (usec) : 1000=1.65%

lat (msec) : 2=1.08%, 4=0.27%, 10=0.04%, 20=0.01%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=3.80%, sys=29.70%, ctx=241869, majf=0, minf=9

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,255638,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=16.6MiB/s (17.5MB/s), 16.6MiB/s-16.6MiB/s (17.5MB/s-17.5MB/s), io=999MiB (1047MB), run=60001-60001msecVM conf

Code:

agent: 1

args: -object memory-backend-memfd,id=mem,size=2048M,share=on -numa node,memdev=mem -chardev socket,id=char0,path=/run/virtiofsd/2000-shared.sock -device vhost-user-fs-pci,chardev=char0,tag=2000-shared

boot: order=scsi0;ide2;net0

cores: 4

cpu: x86-64-v2-AES

hookscript: local:snippets/virtiofs_hook.pl

ide2: none,media=cdrom

memory: 2048

meta: creation-qemu=9.0.2,ctime=1732199146

name: ubuntu01

net0: virtio=02:BD:7F:BD:4B:11,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-zfs:vm-2000-disk-0,backup=0,size=32G

scsihw: virtio-scsi-single

smbios1: uuid=a32528e6-b7a5-4bf5-9cc1-e16b2b841445

sockets: 1

tpmstate0: local-zfs:vm-2000-disk-1,size=4M,version=v2.0

vmgenid: c40101bf-7d4a-4d6f-94cf-79fdefaed293FYI my fix for this was enabling hugepages support on the proxmox host

I don't have a guide or instructions how to do this as a pretty much bashed commands and config until it worked, but it certainly is related to hugepages for which I've set to 1GB x4

The benchmarks are now roughly 50% of what I get from the same test on the host so that'll do me for now until I have time to further tweak.

I don't have a guide or instructions how to do this as a pretty much bashed commands and config until it worked, but it certainly is related to hugepages for which I've set to 1GB x4

The benchmarks are now roughly 50% of what I get from the same test on the host so that'll do me for now until I have time to further tweak.

What am I doing wrong? (Not an expert of Perl)of

Got it working. Leaving last post for posterity, unless others suggest I delete it (sorry, I don't make my way into forums much anymore).

Anyway - THANK YOU, again, @sikha !

here's my modified script for Proxmox 8:

Perl:#!/usr/bin/perl use strict; use warnings; my %associations = ( 102 => ['/mnt/local'], # 101 => ['/zpool/audio', '/zpool/games'], ); use PVE::QemuServer; use Template; my $tt = Template->new; print "GUEST HOOK: " . join(' ', @ARGV) . "\n"; my $vmid = shift; my $conf = PVE::QemuConfig->load_config($vmid); my $vfs_args_file = "/run/$vmid.virtfs"; my $virtiofsd_dir = "/run/virtiofsd/"; my $DEBUG = 1; my $phase = shift; my $unit_tpl = "[Unit] Description=virtiofsd filesystem share at [% share %] for VM %i StopWhenUnneeded=true [Service] Type=simple RuntimeDirectory=virtiofsd PIDFile=/run/virtiofsd/.run.virtiofsd.%i-[% share_id %].sock.pid ExecStart=/usr/libexec/virtiofsd --log-level debug --socket-path /run/virtiofsd/%i-[% share_id %].sock --shared-dir [% share %] --cache=auto --announce-submounts --inode-file-handles=mandatory [Install] RequiredBy=%i.scope\n"; if ($phase eq 'pre-start') { print "$vmid is starting, doing preparations.\n"; my $vfs_args = "-object memory-backend-memfd,id=mem,size=$conf->{memory}M,share=on -numa node,memdev=mem"; my $char_id = 0; # TODO: Have removal logic. Probably need to glob the systemd directory for matching files. for (@{$associations{$vmid}}) { my $share_id = $_ =~ s/^\///r =~ s/\//_/gr; my $unit_name = 'virtiofsd-' . $share_id; my $unit_file = '/etc/systemd/system/' . $unit_name . '@.service'; print "attempting to install unit $unit_name...\n"; if (not -d $virtiofsd_dir) { print "ERROR: $virtiofsd_dir does not exist!\n"; } else { print "DIRECTORY DOES EXIST!\n"; } if (not -e $unit_file) { $tt->process(\$unit_tpl, { share => $_, share_id => $share_id }, $unit_file) || die $tt->error(), "\n"; system("/usr/bin/systemctl daemon-reload"); system("/usr/bin/systemctl enable $unit_name\@$vmid.service"); } system("/usr/bin/systemctl start $unit_name\@$vmid.service"); $vfs_args .= " -chardev socket,id=char$char_id,path=/run/virtiofsd/$vmid-$share_id.sock"; $vfs_args .= " -device vhost-user-fs-pci,chardev=char$char_id,tag=$share_id"; $char_id += 1; } open(FH, '>', $vfs_args_file) or die $!; print FH $vfs_args; close(FH); print $vfs_args . "\n"; if (defined($conf->{args}) && not $conf->{args} =~ /$vfs_args/) { print "Appending virtiofs arguments to VM args.\n"; $conf->{args} .= " $vfs_args"; } else { print "Setting VM args to generated virtiofs arguments.\n"; print "vfs_args: $vfs_args\n" if $DEBUG; $conf->{args} = " $vfs_args"; } PVE::QemuConfig->write_config($vmid, $conf); } elsif($phase eq 'post-start') { print "$vmid started successfully.\n"; my $vfs_args = do { local $/ = undef; open my $fh, "<", $vfs_args_file or die $!; <$fh>; }; if ($conf->{args} =~ /$vfs_args/) { print "Removing virtiofs arguments from VM args.\n"; print "conf->args = $conf->{args}\n" if $DEBUG; print "vfs_args = $vfs_args\n" if $DEBUG; $conf->{args} =~ s/\ *$vfs_args//g; print $conf->{args}; $conf->{args} = undef if $conf->{args} =~ /^$/; print "conf->args = $conf->{args}\n" if $DEBUG; PVE::QemuConfig->write_config($vmid, $conf) if defined($conf->{args}); } } elsif($phase eq 'pre-stop') { #print "$vmid will be stopped.\n"; } elsif($phase eq 'post-stop') { #print "$vmid stopped. Doing cleanup.\n"; } else { die "got unknown phase '$phase'\n"; } exit(0);

I had to modify:

1. ExecStart command with new args for virtiofsd (old args did not work, so new args may require some tuning)

2. Added a line to mkdir /run/virtiofsd/ if it doesn't exist (otherwise, virtiofsd couldnt write its socket)

3. post-start function to not rewrite the config (PVE::QemuConfig->write_config) if it's undefined

Bash:

root@proxmox:~# perl virtiofs.pl 100

GUEST HOOK: 100

Use of uninitialized value $phase in string eq at virtiofs.pl line 40.

Use of uninitialized value $phase in string eq at virtiofs.pl line 84.

Use of uninitialized value $phase in string eq at virtiofs.pl line 103.

Use of uninitialized value $phase in string eq at virtiofs.pl line 106.

Use of uninitialized value $phase in concatenation (.) or string at virtiofs.pl line 109.

got unknown phase ''

Last edited:

I switched to using the Rust version of virtiofsd back in PVE 7 and meant to do a write-up of the process but never got around to it.

The last time I touched this was back in May last year, so I don't remember much, but I use the following Perl hookscript to create a templated systemd service file for starting virtiofsd on a particular directory, and then enable it for a particular VMID (to launch whenever the VM launches, and stop when it stops) and add arguments to the VM's configuration. It currently uses hardcoded associations, and at the moment does not have any cleanup steps, but it may be helpful as a stepping stone for someone else (or I might get around to completing it eventually—alternatively, if this is something I can implement within PVE itself that might motivate me to fix up and submit a changesetedit: I forgot, there's already an in-queue implementation that doesn't make use of the VM's systemd scope).

Perl:#!/usr/bin/perl use strict; use warnings; my %associations = ( 100 => ['/zpool/audio', '/zpool/books', '/zpool/games', '/zpool/work'], 101 => ['/zpool/audio', '/zpool/games'], ); use PVE::QemuServer; use Template; my $tt = Template->new; print "GUEST HOOK: " . join(' ', @ARGV) . "\n"; my $vmid = shift; my $conf = PVE::QemuConfig->load_config($vmid); my $vfs_args_file = "/run/$vmid.virtfs"; my $phase = shift; my $unit_tpl = "[Unit] Description=virtiofsd filesystem share at [% share %] for VM %i StopWhenUnneeded=true [Service] Type=simple PIDFile=/run/virtiofsd/.run.virtiofsd.%i-[% share_id %].sock.pid ExecStart=/usr/lib/kvm/virtiofsd -f --socket-path=/run/virtiofsd/%i-[% share_id %].sock -o source=[% share %] -o cache=always [Install] RequiredBy=%i.scope\n"; if ($phase eq 'pre-start') { print "$vmid is starting, doing preparations.\n"; my $vfs_args = "-object memory-backend-memfd,id=mem,size=$conf->{memory}M,share=on -numa node,memdev=mem"; my $char_id = 0; # TODO: Have removal logic. Probably need to glob the systemd directory for matching files. for (@{$associations{$vmid}}) { my $share_id = $_ =~ s/^\///r =~ s/\//_/gr; my $unit_name = 'virtiofsd-' . $share_id; my $unit_file = '/etc/systemd/system/' . $unit_name . '@.service'; print "attempting to install unit $unit_name...\n"; if (not -e $unit_file) { $tt->process(\$unit_tpl, { share => $_, share_id => $share_id }, $unit_file) || die $tt->error(), "\n"; system("/usr/bin/systemctl daemon-reload"); system("/usr/bin/systemctl enable $unit_name\@$vmid.service"); } system("/usr/bin/systemctl start $unit_name\@$vmid.service"); $vfs_args .= " -chardev socket,id=char$char_id,path=/run/virtiofsd/$vmid-$share_id.sock"; $vfs_args .= " -device vhost-user-fs-pci,chardev=char$char_id,tag=$share_id"; $char_id += 1; } open(FH, '>', $vfs_args_file) or die $!; print FH $vfs_args; close(FH); print $vfs_args . "\n"; if (defined($conf->{args}) && not $conf->{args} =~ /$vfs_args/) { print "Appending virtiofs arguments to VM args.\n"; $conf->{args} .= " $vfs_args"; } else { print "Setting VM args to generated virtiofs arguments.\n"; $conf->{args} = " $vfs_args"; } PVE::QemuConfig->write_config($vmid, $conf); } elsif($phase eq 'post-start') { print "$vmid started successfully.\n"; my $vfs_args = do { local $/ = undef; open my $fh, "<", $vfs_args_file or die $!; <$fh>; }; if ($conf->{args} =~ /$vfs_args/) { print "Removing virtiofs arguments from VM args.\n"; $conf->{args} =~ s/\ *$vfs_args//g; print $conf->{args}; $conf->{args} = undef if $conf->{args} =~ /^$/; PVE::QemuConfig->write_config($vmid, $conf); } } elsif($phase eq 'pre-stop') { #print "$vmid will be stopped.\n"; } elsif($phase eq 'post-stop') { #print "$vmid stopped. Doing cleanup.\n"; } else { die "got unknown phase '$phase'\n"; } exit(0);

I have yet to use this with PVE 8, however. If I understand correctly, the key difference is just `/usr/lib/kvm/virtiofsd` changes to `/usr/libexec/virtiofsd`. But I'll eventually test things out on PVE 8 and report back.

So it's been a while since I've visited but I noticed that this script has been picked up in a few places. My fault for just haphazardly posting it on a whim and not specifying its license, I guess, but this script is GPLv3 licensed (Copyright 2022 路宇図シカ). Which means this license would apply to @Drallas's redistribution/modifications and anyone else who is also redistributing it.

The original post does not seem to be editable anymore unfortunately.

it's nice to see that it's been helpful to a number of people over the past year.