Hey there,

I have a Proxmox VE host (pve-manager/6.2-4/9824574a (running kernel: 5.4.41-1-pve) which gives me some trouble without direct indication as to why.

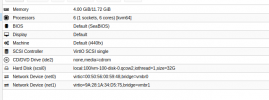

The host hardware is the following:

From time to time (random intervals, sometimes once within a days, other times every hour for a certain duration) it seems the VMs and CTs (not the OPNSense VM and not the host) are losing network connectivity for a split-second (failed name resolutions, pings from the outside don't go through, packet loss on the vmbr1 LAN interface)

After a few days of checking on my OPNSense installation and even paying for support, nothing was found on that end. Today I notice very high Load Average spikes for the host, which don't match the rest of the utilization but might be the cause.

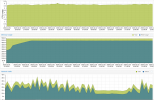

Load averages range from a normal 3 - 4, up to a 20+

The outputs below were generated during a high phase (24+)

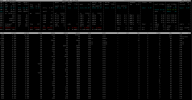

iostat -x 5

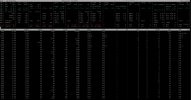

pveperf /var/lib/vz

vmstat 1

Nothing special in dmesg or journald

Graphs from the UI:

The VMs and CTs don't run anything special (most of them only have 1-2 cores and 512MB RAM and idle), there is also no replication or backup running on the host (or guests) currently.

Any pointers would be apreciated - maybe not for the dropped virtual LAN, but at least to understand how it jumps from an average of 3 to 24

I have a Proxmox VE host (pve-manager/6.2-4/9824574a (running kernel: 5.4.41-1-pve) which gives me some trouble without direct indication as to why.

The host hardware is the following:

- Ryzen 5 3600 (6 cores/12 threads)

- 2x SAMSUNG MZVLB512HAJQ - 512GB NVMe as RAID1

- 1x TOSHIBA MG06ACA1 - 10TB HDD

- 1x SAMSUNG MZ7LH960 - 960GB Datacenter SSD

- 64GB DDR4

- Intel I210 network card

From time to time (random intervals, sometimes once within a days, other times every hour for a certain duration) it seems the VMs and CTs (not the OPNSense VM and not the host) are losing network connectivity for a split-second (failed name resolutions, pings from the outside don't go through, packet loss on the vmbr1 LAN interface)

After a few days of checking on my OPNSense installation and even paying for support, nothing was found on that end. Today I notice very high Load Average spikes for the host, which don't match the rest of the utilization but might be the cause.

Load averages range from a normal 3 - 4, up to a 20+

The outputs below were generated during a high phase (24+)

iostat -x 5

Code:

Device r/s w/s rkB/s wkB/s rrqm/s wrqm/s %rrqm %wrqm r_await w_await aqu-sz rareq-sz wareq-sz svctm %util

loop0 0.00 2.00 0.00 8.00 0.00 0.00 0.00 0.00 0.00 0.20 0.00 0.00 4.00 0.80 0.16

loop1 0.00 1.20 0.00 4.80 0.00 0.00 0.00 0.00 0.00 0.17 0.00 0.00 4.00 1.33 0.16

loop2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

loop3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

nvme0n1 1.60 72.20 24.00 4108.40 0.00 7.80 0.00 9.75 0.12 0.47 0.00 15.00 56.90 2.20 16.24

nvme1n1 0.00 72.20 0.00 4108.40 0.00 7.80 0.00 9.75 0.00 0.42 0.00 0.00 56.90 2.25 16.24

md2 1.60 78.20 24.00 4104.80 0.00 0.00 0.00 0.00 0.00 0.00 0.00 15.00 52.49 0.00 0.00

md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sdb 0.60 32.00 2.40 173.60 0.00 3.60 0.00 10.11 0.33 0.06 0.00 4.00 5.42 0.93 3.04

dm-0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00pveperf /var/lib/vz

Code:

CPU BOGOMIPS: 86238.48

REGEX/SECOND: 3353685

HD SIZE: 436.34 GB (/dev/md2)

BUFFERED READS: 1468.38 MB/sec

AVERAGE SEEK TIME: 0.09 ms

FSYNCS/SECOND: 277.27

DNS EXT: 364.28 ms

DNS INT: 345.99 msvmstat 1

Code:

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 0 1198592 12683312 43112 1328444 0 0 4 4358 80883 184020 38 10 52 0 0

1 0 1198592 12683452 43136 1326532 0 0 0 4235 82798 192389 38 11 51 0 0

5 0 1198592 12681392 43144 1329056 0 0 48 4064 85589 191985 47 12 41 0 0

2 0 1198592 12681596 43152 1329480 0 0 0 5169 77190 161692 29 6 64 0 0

2 0 1198592 12681832 43168 1330008 0 0 80 5317 75106 156471 21 6 73 0 0

3 0 1198592 12681892 43192 1330224 0 0 4 1536 74959 156326 23 6 71 0 0

2 0 1198592 12681652 43216 1330572 0 0 4 734 74329 154642 20 5 74 0 0

3 0 1198592 12681232 43224 1330744 0 0 48 595 75263 160303 23 6 71 0 0

8 0 1198592 12680628 43232 1331332 0 0 104 3006 76174 158956 29 7 65 0 0

1 0 1198592 12681868 43256 1331768 4 0 28 628 77327 156333 28 8 64 0 0

3 0 1198592 12678752 43304 1332240 0 0 68 1477 77001 171764 30 7 63 0 0

8 0 1198592 12679388 43360 1333212 0 0 172 2953 79669 173986 36 9 54 0 0

5 0 1198592 12679224 43368 1333824 0 0 4 687 77106 169109 31 9 60 0 0

2 0 1198592 12676340 43424 1334348 0 0 72 1490 76611 166185 26 8 66 0 0

0 0 1198592 12665992 43452 1334876 0 0 40 1266 81597 194728 32 10 58 0 0

1 0 1198592 12667492 43452 1335308 0 0 4 986 88513 212946 48 15 36 0 0

2 0 1198592 12667744 43492 1335832 0 0 104 2020 77761 176221 21 7 71 0 0

4 0 1198592 12672260 43508 1336116 0 0 0 759 78894 176994 28 10 63 0 0

2 0 1198592 12671760 43532 1336516 0 0 52 1189 82058 192067 33 12 54 0 0

5 0 1198592 12674836 43556 1337208 0 0 84 1150 77534 167231 25 10 65 0 0

2 0 1198592 12673764 43580 1337616 0 0 108 1769 79338 186212 28 10 62 0 0

0 0 1198592 12672992 43596 1338548 0 0 40 1432 80418 194020 30 11 59 0 0

4 0 1198592 12673516 43660 1339224 0 0 48 1679 76585 167502 19 8 72 1 0Nothing special in dmesg or journald

Graphs from the UI:

The VMs and CTs don't run anything special (most of them only have 1-2 cores and 512MB RAM and idle), there is also no replication or backup running on the host (or guests) currently.

Any pointers would be apreciated - maybe not for the dropped virtual LAN, but at least to understand how it jumps from an average of 3 to 24

Last edited: