Hello dear Proxmox,

for our university's institute (law...) i had to build a new server infrastructure for VMs. Since we successfully use proxmox since a couple of years now, of course i built the new infrastructure with proxmox again:

Our setup:

2 Proxmox VE nodes with local zfs (uses ssd for ZIL and Cache) and storage replication to each other

1 Proxmox Backup Server with local zfs storage (uses ssd for ZIL and Cache)

1 older netapp storage we use through nfs

Backup speed benchmarks

Each systems speed seams to be quite sufficient:

but from within a VM which is in the same LAN (but different VLAN) it looks a bit different, but still ok:

Ping is around 0.3 ms from that VM to the proxmox-backup-server.

The issue

I am not sure if the above TLS speed of roughly 120 MB/s is related to bug 2983 (i checked all backup-server and -clients versions, we are on 1.0.6) and i also don't necessarily think, that 120 MB/s is too slow. After the initial 22h backup of our 1.8 TB nextcloud user's files (thousands of thousands of typical small files like .docx and pdfs...) i wouldn't be to scared abut huge amount of changed data to be send over the land for each follow up backup.

Problem is, it even takes almost the same amount of scanning through all those files for actual changes. This would mean that the VM would be in a constant backup-loop if i wanted daily backups.

The nextcloud user files are on an nfs share (netapp) mounted to the VM which does the backup-client job. I am planning on moving the files away from the netapp into the local zfs of the nodes. But for the actual migration of that data i wanted to temporarily stop our nextcloud service and create an actual backup, then restore the files into a freshly built VM on local node's storage. But the duration of creating a follow up backup is too long and stopping our nextcloud service for the whole duration isn't sufficient.

Any ideas of how to speed up that whole process? Where would you think the bottle neck is? Do you think, backing up all these files becomes a lot faster, when the data is moved to the local zfs?

Thank you for your thoughts on this in advance,

Martin

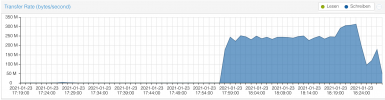

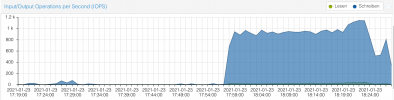

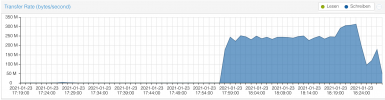

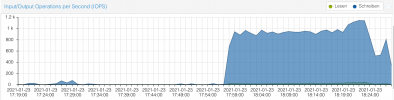

PS: This is some speed information on a 320 GB VM backup from one of the nodes to the backup-server:

on the backup server:

for our university's institute (law...) i had to build a new server infrastructure for VMs. Since we successfully use proxmox since a couple of years now, of course i built the new infrastructure with proxmox again:

Our setup:

2 Proxmox VE nodes with local zfs (uses ssd for ZIL and Cache) and storage replication to each other

1 Proxmox Backup Server with local zfs storage (uses ssd for ZIL and Cache)

1 older netapp storage we use through nfs

Backup speed benchmarks

Each systems speed seams to be quite sufficient:

Code:

# proxmox-backup-client benchmark on backup-server directly:

Time per request: 4962 microseconds.

TLS speed: 845.12 MB/s

SHA256 speed: 419.69 MB/s

Compression speed: 612.28 MB/s

Decompress speed: 984.28 MB/s

AES256/GCM speed: 2524.01 MB/s

Verify speed: 294.12 MB/sbut from within a VM which is in the same LAN (but different VLAN) it looks a bit different, but still ok:

Code:

# proxmox-backup-client benchmark from VM:

Time per request: 35574 microseconds.

TLS speed: 117.90 MB/s

SHA256 speed: 346.01 MB/s

Compression speed: 420.34 MB/s

Decompress speed: 658.98 MB/s

AES256/GCM speed: 1557.17 MB/s

Verify speed: 233.52 MB/sPing is around 0.3 ms from that VM to the proxmox-backup-server.

The issue

I am not sure if the above TLS speed of roughly 120 MB/s is related to bug 2983 (i checked all backup-server and -clients versions, we are on 1.0.6) and i also don't necessarily think, that 120 MB/s is too slow. After the initial 22h backup of our 1.8 TB nextcloud user's files (thousands of thousands of typical small files like .docx and pdfs...) i wouldn't be to scared abut huge amount of changed data to be send over the land for each follow up backup.

Problem is, it even takes almost the same amount of scanning through all those files for actual changes. This would mean that the VM would be in a constant backup-loop if i wanted daily backups.

The nextcloud user files are on an nfs share (netapp) mounted to the VM which does the backup-client job. I am planning on moving the files away from the netapp into the local zfs of the nodes. But for the actual migration of that data i wanted to temporarily stop our nextcloud service and create an actual backup, then restore the files into a freshly built VM on local node's storage. But the duration of creating a follow up backup is too long and stopping our nextcloud service for the whole duration isn't sufficient.

Any ideas of how to speed up that whole process? Where would you think the bottle neck is? Do you think, backing up all these files becomes a lot faster, when the data is moved to the local zfs?

Thank you for your thoughts on this in advance,

Martin

PS: This is some speed information on a 320 GB VM backup from one of the nodes to the backup-server:

Code:

# above backup job's results:

101: 2021-01-23 18:33:38 INFO: backup is sparse: 145.35 GiB (45%) total zero data

101: 2021-01-23 18:33:38 INFO: backup was done incrementally, reused 145.35 GiB (45%)

101: 2021-01-23 18:33:38 INFO: transferred 320.00 GiB in 2137 seconds (153.3 MiB/s)

101: 2021-01-23 18:33:38 INFO: Finished Backup of VM 101 (00:35:38)on the backup server:

Last edited: