Okay, let me preface this with I'm not new to Proxmox and I've run this on lots of Dell servers. I've also been working on this for a few days so I might have missed a step or two of the actual troubleshooting

This issue is only for UEFI boot and not bios boot.

The setup consists of an 11-node cluster that is made from of 3 dell R620s and 4 Dell R630s and 4 supermicro servers.

The 4 super micro servers were for a 24 drive all SSD cluster that I've decided to retire and remove and do hyper-converged on the Dells to save power. During this transition, I will be pulling out the 3 R620's and replacing them with R630's so the end configuration will be 7 R630s 10 bays with 7 bays dedicated to ceph and ssds.

Because I'm removing the Perc730s and replacing them with a LSI 9300-8I in IT mode I'm dropping out each server one by one and then replacing the storage controller and then installing Proxomx again and adopting back into the cluster under the same name. I've done this multiple times over the years, no issue here.

The first two servers went without a hitch and Proxmox 7.4 was installed from the USB drive and I was able to set up ZFS on root using UEFI. The next server I tried failed and it didn't matter what I attempted it would install but wouldn't boot on the next boot There were about 2 months between the first two and the next one. When I came back I was originally trying to install this over IDRAC and virtual disc. Then I remembered that it would fail and I had to USB boot for UEFI to work. So I tried another and it failed as well. I got fed up with it so I pulled it from the rack and drove home where I'd have more time to mess with it. Once I got it home and powered on I put the USB drive back in and connected to the server through the IPMI and completed the install and the server rebooted into live proxmox with no issue. I scratched my head on this and decided that maybe it was the USB drive because I'd formatted it and installed the iso back onto it.

I take this working server back to the data center and rack and everything is perfect. It joined the cluster, I installed ceph, did some sketchy remove drives from another ceph node and then discover the physical volumes and then the logical volumes, and then start all the Ceph osds, and boom server is online and has replaced one of the existing supermicros. Don't try this unless you are willing to lose data. YMMV.

Ok now back to the first server that will not boot after installation. Using the same USB stick as the previous day I did the install and then the server hung on first uefi boot.

I've tried upgrading IDRAC, Lifecycle controller, BIOS going up in releases and also trying to Proxmox from 6.4 to 8.0 and I've tried going down on the dell IDRAC and Lifecycle and BIOS and going through all the Proxmox versions again each time.

The servers that have completed the installation (3) with the new LSI cards are running

BIOS 2.13.0

Firmware 2.82.82.82

Lifecycle Controller Firmware 2.82.82.82

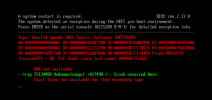

On this server, BIOS 2.13 and firmware cause a UEFI boot exception and crashes. The only information I can find that is similar from dell is a known issue if the firmware isn't upgraded in the correct order. https://www.dell.com/support/kbdoc/...protection-fault-during-uefi-pre-boot-startup

Dell Says: To prevent this issue updates should be performed in the following order

On Bios 2.17.0 and firmware 2.84.84.84 the latest for this server, the server hangs after enumerating boot devices and will not continue to boot. At least with the 2.13.0 I get an error code.

What I don't understand is that I can have a working server with the same bios and firmware as the one that I'm trying to install and it fails.

Tonight I'm going to go down one release older than the versions that I want to get to and then upgrade the order that Dell says one more time. Hopefully, this will work or someone here can shed some light on something that I've missed.

I've got 3 more new to me servers to get this done on after this one is completed. Trust me the thought of mdadm and vanalla debain has crossed my mind since I don't need zfs on root really. I just need an easy way to fail over and grub on both boot drives and a hot-swap has worked for many years.

This issue is only for UEFI boot and not bios boot.

The setup consists of an 11-node cluster that is made from of 3 dell R620s and 4 Dell R630s and 4 supermicro servers.

The 4 super micro servers were for a 24 drive all SSD cluster that I've decided to retire and remove and do hyper-converged on the Dells to save power. During this transition, I will be pulling out the 3 R620's and replacing them with R630's so the end configuration will be 7 R630s 10 bays with 7 bays dedicated to ceph and ssds.

Because I'm removing the Perc730s and replacing them with a LSI 9300-8I in IT mode I'm dropping out each server one by one and then replacing the storage controller and then installing Proxomx again and adopting back into the cluster under the same name. I've done this multiple times over the years, no issue here.

The first two servers went without a hitch and Proxmox 7.4 was installed from the USB drive and I was able to set up ZFS on root using UEFI. The next server I tried failed and it didn't matter what I attempted it would install but wouldn't boot on the next boot There were about 2 months between the first two and the next one. When I came back I was originally trying to install this over IDRAC and virtual disc. Then I remembered that it would fail and I had to USB boot for UEFI to work. So I tried another and it failed as well. I got fed up with it so I pulled it from the rack and drove home where I'd have more time to mess with it. Once I got it home and powered on I put the USB drive back in and connected to the server through the IPMI and completed the install and the server rebooted into live proxmox with no issue. I scratched my head on this and decided that maybe it was the USB drive because I'd formatted it and installed the iso back onto it.

I take this working server back to the data center and rack and everything is perfect. It joined the cluster, I installed ceph, did some sketchy remove drives from another ceph node and then discover the physical volumes and then the logical volumes, and then start all the Ceph osds, and boom server is online and has replaced one of the existing supermicros. Don't try this unless you are willing to lose data. YMMV.

Ok now back to the first server that will not boot after installation. Using the same USB stick as the previous day I did the install and then the server hung on first uefi boot.

I've tried upgrading IDRAC, Lifecycle controller, BIOS going up in releases and also trying to Proxmox from 6.4 to 8.0 and I've tried going down on the dell IDRAC and Lifecycle and BIOS and going through all the Proxmox versions again each time.

The servers that have completed the installation (3) with the new LSI cards are running

BIOS 2.13.0

Firmware 2.82.82.82

Lifecycle Controller Firmware 2.82.82.82

On this server, BIOS 2.13 and firmware cause a UEFI boot exception and crashes. The only information I can find that is similar from dell is a known issue if the firmware isn't upgraded in the correct order. https://www.dell.com/support/kbdoc/...protection-fault-during-uefi-pre-boot-startup

Dell Says: To prevent this issue updates should be performed in the following order

- iDRAC

- LCC

- BIOS

On Bios 2.17.0 and firmware 2.84.84.84 the latest for this server, the server hangs after enumerating boot devices and will not continue to boot. At least with the 2.13.0 I get an error code.

What I don't understand is that I can have a working server with the same bios and firmware as the one that I'm trying to install and it fails.

Tonight I'm going to go down one release older than the versions that I want to get to and then upgrade the order that Dell says one more time. Hopefully, this will work or someone here can shed some light on something that I've missed.

I've got 3 more new to me servers to get this done on after this one is completed. Trust me the thought of mdadm and vanalla debain has crossed my mind since I don't need zfs on root really. I just need an easy way to fail over and grub on both boot drives and a hot-swap has worked for many years.