[TUTORIAL] Tutorial: Unprivileged LXCs - Mount CIFS shares

- Thread starter TheHellSite

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It seems that i have a similar issue with jellyfin. I've added the user "jellyfin" to the lxc-shares group (10000), the webpage sees the mounted folder, while being root in the console i have full read/write access, but the library scan doesn't work.i am making some progress on this.. able to get everything working, except for Plex being able to see the contents of the directory. it's weird as i can go to the console of Plex, see the mount, see the files but Plex says the directory is empty. any ideas?

Did you find a solution? Maybe i'll add also lxc root to the shared group, but at this point i guess it's almost the same issue of having a privileged container...

i haven't. pretty much given up. I found it a lot easier to spin up a VM, mount the shares and then everything seems to work. will try again when i have some time off over the holidays. it really shouldn't be so difficult.It seems that i have a similar issue with jellyfin. I've added the user "jellyfin" to the lxc-shares group (10000), the webpage sees the mounted folder, while being root in the console i have full read/write access, but the library scan doesn't work.

Did you find a solution? Maybe i'll add also lxc root to the shared group, but at this point i guess it's almost the same issue of having a privileged container...

I've got the same issue as many others have said in here. I can see the files/folders from the plex LXC as root (via shell), but Plex cannot (via GUI). My SMB LXC was created with cockpit using this guide. That LXC and GUi setup is nice to work with.i am making some progress on this.. able to get everything working, except for Plex being able to see the contents of the directory. it's weird as i can go to the console of Plex, see the mount, see the files but Plex says the directory is empty. any ideas?

I've read through everything on here and can't see anything that's glaringly different in my config. The user 'plex' is in the lxc_shares group mentioned in the beginning, but root is not, so it's a bit confusing as to what's happening.

I was able to get the SMB share to work on a Ubuntu VM. It was very finnicky and took a few reboots and lots of plugging around. This at least lets me get my plex up, until the LXC issue is resolved.

Process followed on VM:

Used this guide a bit

mkdir /plexmedia /plexmedia/tv /plexmedia/movies

chmod -R 755 /plexmedia/*

My fstab:

//myip/plexmedia /plexmedia cifs auto,defaults,nofail,credentials=/etc/plex.cred,uid=plex 0 0

My plex.cred:

username=plex

password=mypassword

Then "sudo mount -a" to mount the fstab

On my Docker LXC user 'plex' is part of 'plexusers' with rxw permissions.

I'm not sure what else is different after this point. I rebooted a few times in here, tried a bunch of other fstab changes and config changes.

Last edited:

I have just installed Jellyfin on Proxmox and connected it to a Synology NAS which is also running on another instance of Proxmox. From the jellyfish LXC shell I can see and touch the files on the NAS. However the jellyfish web interface is blank. any suggestions, support is much appreciated.

*** SOLVED *** I installed Samba onto Proxmox via the shell and that seemed to have done the trick, however I now have the same issue as some of the others here in that Jellyfin can see the mounted folder but none of the subfolders

Hi I am trying to follow the guide but with I get to step three on setting up the share on the host i get this error message

If I try to access using smbclient I get this error message

I can see and access the share fine from both my Mac and Windows PC

any help would be greatly appreciated

Hi I am trying to follow the guide but with I get to step three on setting up the share on the host i get this error message

Code:

root@homelab-n01:~# mount /mnt/lxc_shares/FS01

mount error(111): could not connect to 192.168.1.165Unable to find suitable address.If I try to access using smbclient I get this error message

Code:

root@homelab-n01:~# smbclient //192.168.1.165/FS01

Password for [WORKGROUP\root]:

do_connect: Connection to 192.168.1.165 failed (Error NT_STATUS_CONNECTION_REFUSED)

root@homelab-n01:~#I can see and access the share fine from both my Mac and Windows PC

any help would be greatly appreciated

Last edited:

I have a similar problem i can see the contents of my shared folders when i Jellyfin LXC shell to the folders

but running the Jellyfin GUI i can see the folders ive created but not the contents of the folders, Anyone

have an idea what ive missed ? i'd say a read/write issue but i can modify files ok when i use shell and access the files

but running the Jellyfin GUI i can see the folders ive created but not the contents of the folders, Anyone

have an idea what ive missed ? i'd say a read/write issue but i can modify files ok when i use shell and access the files

The most amazing part of how difficult this seems to be is that the shares I'm trying to get my LXC containers to access are open shares with no security on them. Anyone on my network can access the share with any computer...except of course any service running in an LXC container on Proxmox. Seems like Proxmox is making this harder than it needs to be.

I'm struggling quite a lot with this and wondering if anyone can help. I followed the instructions exactly but I get the following error when I try to mount in the host. Looked everywhere but can't seem to work out what the problem is!

mount error(13): Permission denied

Refer to the mount.cifs(8) manual page (e.g. man mount.cifs) and kernel log messages (dmesg)

mount error(13): Permission denied

Refer to the mount.cifs(8) manual page (e.g. man mount.cifs) and kernel log messages (dmesg)

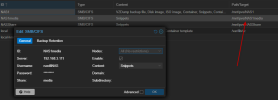

Instead of creating the mount point on each PVE host under (2.), use the data center. on item "Storage" do add -> SMB/CIFS

You find mounts on /mnt/pve/[ID you have chosen in the dialog] on each proxmox node. By adding the ",shared=1" after the bind mount in LXC_ID.conf, you can migrate the container.

You find mounts on /mnt/pve/[ID you have chosen in the dialog] on each proxmox node. By adding the ",shared=1" after the bind mount in LXC_ID.conf, you can migrate the container.

Any more details on this aproach please?Instead of creating the mount point on each PVE host under (2.), use the data center. on item "Storage" do add -> SMB/CIFS

You find mounts on /mnt/pve/[ID you have chosen in the dialog] on each proxmox node. By adding the ",shared=1" after the bind mount in LXC_ID.conf, you can migrate the container.

Sounds MUCH easier.

Well: Step 2. (see the begining of this thread) is replaced so:Any more details on this aproach please?

Sounds MUCH easier.

is replaced by doing an add to "storage" in the datacenter with chosen ID:

and

The indicated mountpoint is available also when migrating after you manually edit the LXC_ID.conf and add ",shared=1" in the shell beloging to the node where the container resides on in /etc/pve/lxc/[ID of containe].conf:

Last edited:

Made an account to correct this error, in case anyone else stumbles across this thread from a search engine. This allows it to mount the drive as SMB/CIFS in the datacenter for specific storage types, such as iso's, backups, etc. This doesn't accomplish what we're all trying to do in this thread which is to access CIFS shares in unprivileged containers.Well: Step 2. (see the begining of this thread) is replaced so:

View attachment 81937

is replaced by doing an add to "storage" in the datacenter with chosen ID:

View attachment 81939

and

View attachment 81938

The indicated mountpoint is available also when migrating after you manually edit the LXC_ID.conf and add ",shared=1" in the shell beloging to the node where the container resides on in /etc/pve/lxc/[ID of containe].conf:

View attachment 81941

Working solution if your plex wont see the files! Thanks!I ran into this same issue. It's a permissions issue and I fixed it with (change dir_mode and file_mode) :

Code:{ echo '' ; echo '# Mount CIFS share on demand with rwx permissions for use in LXCs (manually added)' ; echo '//NAS/nas/ /mnt/lxc_shares/nas_rwx cifs _netdev,x-systemd.automount,noatime,uid=100000,gid=110000,dir_mode=0777,file_mode=0774,user=smb_username,pass=smb_password 0 0' ; } | tee -a /etc/fstab

Note that I didn't say the way I fixed it is the best/most secure way. Just that plex can see the subfolders now.

It is indeed true that there will be an empty directory (snippets in my case) in the filesystem on the NAS. Also the filesystem shared that way is in principle mountable in al containers running in the datacenter. For me these disadvantages are not blocking, as I privately manage my containers and the advantage is that my NAS is now very easily accessble. Just one mount from the cluster CIFS directory of choice into a local directory (step 4), and adding the ",shared=1" as described in the /etc/pve/lxc/[id].conf of the node un which the container resides.Made an account to correct this error, in case anyone else stumbles across this thread from a search engine. This allows it to mount the drive as SMB/CIFS in the datacenter for specific storage types, such as iso's, backups, etc. This doesn't accomplish what we're all trying to do in this thread which is to access CIFS shares in unprivileged containers.

I followed this technique to have my music files in a plex server, my books in various calibre web services, it works for me.

Are there disadvantages to this technique that I am not aware of?

Last edited:

Just wanted to chime in that steps in the OP (mostly*) worked for me BUT I was trying to fix the perms for

Paraphrasing from the OP for a DAS setup:

---

Side-notes:

Also, regarding passing these mounts to a Docker container within the LXC, this helped! Thanks

And I echo Ryan_Malone's statement above

> Direct-attached storage (DAS)

> Connected via USB3 to my mini-PC Proxmox host v8.2.2

> That was already mounted inside my LXCs (separately running Runtipi and Plex)

So for a NAS setup, skip my /etc/fstab entries below (use the ones from OP)Paraphrasing from the OP for a DAS setup:

- Follow the OP tutorial steps insidethe container(s)

- I double-checked the perms on my mounts with

ls -lah /mnt←mnt= parent directory of my mount location- Found they were indeed owned by

nobody:nogroup

- Found they were indeed owned by

- I double-checked the perms on my mounts with

- Create the group and add your user(s) to the group

- For me it was

sudo usermod -a -G lxc_shares usernamehere - Then log out and log back in (twice for me)

groups usernameto see if your user has been added to the group

- For me it was

- Stop any LXC containers that already have the drive mounted to them

Disconnect any other network shares to the drives ← needed at least for me for a later step

Disconnect any other network shares to the drives ← needed at least for me for a later step

- Unmount the drives on your host shell using

umount /mount/location/here- This is where disconnecting earlier helps, otherwise it may fail because the "drive is busy"

*Using lazy unmounting

*Using lazy unmounting umount -l /mount/second/driveended up deferring the unmounting, so maybe avoid that..?

- On the host I prefer to edit the

/etc/fstabfile directly via a separate terminal rather that Proxmox Web UInano /etc/fstab- For the NTFSformatted DAS drive

UUID=XXXXXXXXX /mount/location/here ntfs-3g rw,uid=100000,gid=110000,dmask=0000,fmask=0000,noatime,nofail 0 0- Note that it says

ntfs-3g? That took me hours to figure was needed for my Debian host setup, so try and install that from your package manager; in my case it wasapt install -y ntfs-3g

- For the exFATformatted DAS drive

UUID=XXXX-XXXX /mount/second/drive exfat rw,exec,uid=100000,gid=110000,dmask=0000,fmask=0000,noatime,nofail 0 0

- After any change to

/etc/fstab, update your system withsystemctl daemon-reload- Then re-mount your drives with

mount -ato apply all/etc/fstabentries

- Then re-mount your drives with

- If the drives are not already added to LXCs, use the Web UI to add them as Directories, then to the LXCs, or follow the OP (I already had them mounted)

- Start the containers back up

- Run

ls -lah /mount/(on the parent directory, in case you mounted multiple drives) - The drives should now be mounted as

root:lxc_sharesinstead ofnobody:nogroup

- Run

---

Side-notes:

- Look up how to add devices using their

UUID/PART-UUIDto ensure they persist, since mounting/dev/sdxXcan fail sometimes if there's ever a disconnect/reconnect - I was editing an existing

/etc/fstabentry that took me hours to put together so I didn't updatedmaskandfmaskto thedir_modeandfile_modekey=value format, respectively - I needed this approach because the Immich self-hosted photos backup app did NOT play nicely with the

nobody:nogroupperms

Also, regarding passing these mounts to a Docker container within the LXC, this helped! Thanks

If anyone else is having this issue, I put the PUID and PGID of the docker images as both 0 (which is output of `id root` from the unprivileged docker host). I doubt this is best practice, better would be to add another user to host with less permissions (also added to the LXC group) and use the PUID/PGID of that.

And I echo Ryan_Malone's statement above

Personally I'm running into permissions issues with this method. I added my user to lxc_shares/10000 but the mounts are coming through as nobody:nogroup (65534:65534) instead, meaning I only have rx permissions. I imagine adding the mount to fstab instead of the GUI would give me more control of perms. Any thoughts on this?Are there disadvantages to this technique that I am not aware of?

EDIT: Just redid this with fstab instead of GUI and everything worked out of the box. I suspect we should be adding replicate=0 to the bind mount in LXC_ID.conf so if you use replication for backup you aren't duping your NAS.

Last edited: