Hi chaps,

Having a strange issue where my Intel T540-X2 nic won't seem to show it's full capabilities inside my TrueNAS Scale VM. Its using a Virtio driver. connected to a 10Gb switch.

Ethtool on Proxmox host shows the correct linkmode, duplex and speed. Ethtool inside TrueNAS shows everything as unknown, and seems to be stuck at 1Gb speeds when transferring data from my PC (also using the same nic at 10Gb)

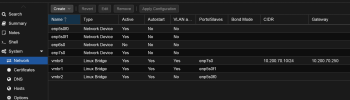

qm config shows - net0: virtio=FE:E0:94:C4:50:8C,bridge=vmbr1,queues=4,tag=60

Inside the host, ethtool shows everything as "not reported"

Virtio drivers are installed by default inside Scale

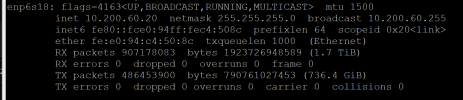

This is from TrueNAS

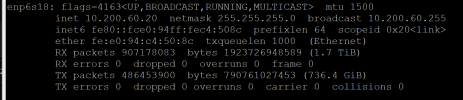

This is the host nic (bridged)

Nothing particularly special about the setup, standard MTU on everything, no jumbo frames etc

iperf results to VM from my 10Gb desktop

Disk array inside TrueNAS is 3 x 8 (14TB disks) RAIDZ2 vdevs. So plenty to saturate a 10Gb link from my local nvme SSD

Any ideas?

many thanks

EDIT - Just to add, 10Gb worked fine until i virtualised the system

Having a strange issue where my Intel T540-X2 nic won't seem to show it's full capabilities inside my TrueNAS Scale VM. Its using a Virtio driver. connected to a 10Gb switch.

Ethtool on Proxmox host shows the correct linkmode, duplex and speed. Ethtool inside TrueNAS shows everything as unknown, and seems to be stuck at 1Gb speeds when transferring data from my PC (also using the same nic at 10Gb)

qm config shows - net0: virtio=FE:E0:94:C4:50:8C,bridge=vmbr1,queues=4,tag=60

Inside the host, ethtool shows everything as "not reported"

Virtio drivers are installed by default inside Scale

This is from TrueNAS

This is the host nic (bridged)

Nothing particularly special about the setup, standard MTU on everything, no jumbo frames etc

iperf results to VM from my 10Gb desktop

Disk array inside TrueNAS is 3 x 8 (14TB disks) RAIDZ2 vdevs. So plenty to saturate a 10Gb link from my local nvme SSD

Any ideas?

many thanks

EDIT - Just to add, 10Gb worked fine until i virtualised the system

Last edited: