I spent the better part of last night trying to figure out what was wrong, gave up around midnight. I realized I needed to RTFM...https://pve.proxmox.com/pve-docs/chapter-pvesm.html

The problem stemmed from the

lvconvert command above not combining my data and metadata LVs like it was supposed to.

There was lots of troubleshooting in-between what I'm showing here, but it was getting tedious. I blew it away and started over with pvesm remove and vgremove.

Now I start fresh, making it smaller as I thought that was part of the issue:

Code:

root@psivmh-snh-001:~# lvcreate -i 2 -m 1 --type raid10 -L 1T -n SAS_LV SAS_VG

Using default stripesize 64.00 KiB.

Logical volume "SAS_LV" created.

root@psivmh-snh-001:~# lvcreate -i 2 -m 1 --type raid10 -L 15G -n SAS_META_LV SAS_VG

Using default stripesize 64.00 KiB.

Logical volume "SAS_META_LV" created.

root@psivmh-snh-001:~# lvconvert --thinpool SAS_VG/SAS_LV --poolmetadata SAS_VG/SAS_META_LV

Thin pool volume with chunk size 64.00 KiB can address at most 15.81 TiB of data.

WARNING: Converting SAS_VG/SAS_LV and SAS_VG/SAS_META_LV to thin pool's data and metadata volumes with metadata wiping.

THIS WILL DESTROY CONTENT OF LOGICAL VOLUME (filesystem etc.)

Do you really want to convert SAS_VG/SAS_LV and SAS_VG/SAS_META_LV? [y/n]: y

Converted SAS_VG/SAS_LV and SAS_VG/SAS_META_LV to thin pool.

LVM seems to have combined the LVs:

Code:

root@psivmh-snh-001:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi-a-tz-- 1.00t 0.00 0.14

data pve twi-a-tz-- 59.66g 0.00 1.59

root pve -wi-ao---- 27.75g

swap pve -wi-ao---- 8.00g

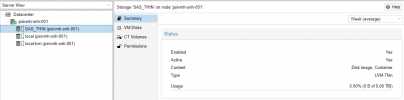

And now Proxmox shows my joined LV in the LVM-Thin space! However, it's not showing right in the web gui. pvesm status doesn't show it either:

Code:

root@psivmh-snh-001:~# pvesm status

Name Type Status Total Used Available %

local dir active 28510260 2551800 24487180 8.95%

local-lvm lvmthin active 62562304 0 62562304 0.00%

Use pvesm add:

Code:

root@psivmh-snh-001:~# pvesm add lvmthin SAS_THIN --thinpool SAS_LV --vgname SAS_VG

root@psivmh-snh-001:~# pvesm status

Name Type Status Total Used Available %

SAS_THIN lvmthin active 1073741824 0 1073741824 0.00%

local dir active 28510260 2551892 24487088 8.95%

local-lvm lvmthin active 62562304 0 62562304 0.00%

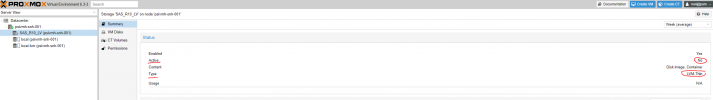

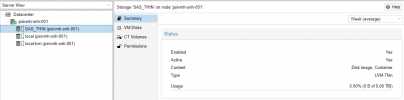

pvesm now reports it as active with the expected space! GUI shows it in the left hand server section as well:

Now I want to extend the LV so I can use all of my storage

Now I want to extend the LV so I can use all of my storage. Take caution here, there's math.

Since we're doing RAID 10, I can't actually use the full 10.92TB that vgs thinks is available:

Code:

root@psivmh-snh-001:~# vgs

VG #PV #LV #SN Attr VSize VFree

SAS_VG 4 1 0 wz--n- <10.92t 8.87t

I only have about 5.45TB usable since that's total space of half of my drives. I must use this amount instead of the 10.92 total because using the 10.92 tb would cause the RAID10 to fill and cause many problems. I think LVM would stop any real damage from being done, but just to be on the safe side, I will shoot for a 5TB volume. Now I extend my data to 5TB:

Code:

lvextend -L 5T SAS_VG/SAS_LV

Extending 2 mirror images.

Size of logical volume SAS_VG/SAS_LV_tdata changed from 1.00 TiB (262144 extents) to 5.00 TiB (1310720 extents).

Logical volume SAS_VG/SAS_LV_tdata successfully resized.

root@psivmh-snh-001:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi-a-tz-- 5.00t 0.00 0.24

data pve twi-a-tz-- 59.66g 0.00 1.59

root pve -wi-ao---- 27.75g

swap pve -wi-ao---- 8.00g

Now we need to extend the

metadata size. If we don't do this, LVM won't know how to reference our RAID volume once it fills up the metadata section. Please reference this section of

RedHat guide to LVM:

For each RAID image, every 500MB data requires 4MB of additional storage space for storing the integrity metadata.

4/500 is .008 (.08%) which is the percentage of your volume that needs to reserved for metadata. be My total is 5TB, so we multiply 5,000,000MB by .008 to get 40 GB needed for metadata storage.

But how do we mess with the now baked-in metadata size?

We can see how the data is split across the disks (including metadata size) through

lvs -a:

Code:

root@psivmh-snh-001:~# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi-a-tz-- 5.00t 0.00 0.24

[SAS_LV_tdata] SAS_VG rwi-aor--- 5.00t 20.23

[SAS_LV_tdata_rimage_0] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_1] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_2] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_3] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rmeta_0] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_1] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_2] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_3] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tmeta] SAS_VG ewi-aor--- 15.00g 100.00

[SAS_LV_tmeta_rimage_0] SAS_VG iwi-aor--- 7.50g

[SAS_LV_tmeta_rimage_1] SAS_VG iwi-aor--- 7.50g

[SAS_LV_tmeta_rimage_2] SAS_VG iwi-aor--- 7.50g

[SAS_LV_tmeta_rimage_3] SAS_VG iwi-aor--- 7.50g

[SAS_LV_tmeta_rmeta_0] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tmeta_rmeta_1] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tmeta_rmeta_2] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tmeta_rmeta_3] SAS_VG ewi-aor--- 4.00m

[lvol0_pmspare] SAS_VG ewi------- 15.00g

The SAS_LV_tmeta and lvol0_pmspare LVs are the metadata and mirror of the metadata, respectively. We can extend the metadata size to 40G from 15G, with this command (

lvmextend reference):

Code:

lvextend --poolmetadatasize +25G SAS_VG/SAS_LV

Extending 2 mirror images.

Size of logical volume SAS_VG/SAS_LV_tmeta changed from 15.00 GiB (3840 extents) to 40.00 GiB (10240 extents).

Logical volume SAS_VG/SAS_LV_tmeta successfully resized.

HOWEVER, this does not resize our mirror of the metadata, leaving it at 15GB. We need to resize that too. LVM doesn't seem to know to do that on its own, so we must take the pool offline, then tell it to repair the thin LV, which will fix it:

Code:

root@psivmh-snh-001:~# lvconvert --repair SAS_VG/SAS_LV

Active pools cannot be repaired. Use lvchange -an first.

root@psivmh-snh-001:~# lvchange -an SAS_VG/SAS_LV

root@psivmh-snh-001:~# lvconvert --repair SAS_VG/SAS_LV

WARNING: LV SAS_VG/SAS_LV_meta0 holds a backup of the unrepaired metadata. Use lvremove when no longer required.

WARNING: New metadata LV SAS_VG/SAS_LV_tmeta might use different PVs. Move it with pvmove if required.

root@psivmh-snh-001:~# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi---tz-- 5.00t

SAS_LV_meta0 SAS_VG rwi---r--- 40.00g

[SAS_LV_meta0_rimage_0] SAS_VG Iwi---r--- 20.00g

[SAS_LV_meta0_rimage_1] SAS_VG Iwi---r--- 20.00g

[SAS_LV_meta0_rimage_2] SAS_VG Iwi---r--- 20.00g

[SAS_LV_meta0_rimage_3] SAS_VG Iwi---r--- 20.00g

[SAS_LV_meta0_rmeta_0] SAS_VG ewi---r--- 4.00m

[SAS_LV_meta0_rmeta_1] SAS_VG ewi---r--- 4.00m

[SAS_LV_meta0_rmeta_2] SAS_VG ewi---r--- 4.00m

[SAS_LV_meta0_rmeta_3] SAS_VG ewi---r--- 4.00m

[SAS_LV_tdata] SAS_VG rwi---r--- 5.00t

[SAS_LV_tdata_rimage_0] SAS_VG Iwi---r--- 2.50t

[SAS_LV_tdata_rimage_1] SAS_VG Iwi---r--- 2.50t

[SAS_LV_tdata_rimage_2] SAS_VG Iwi---r--- 2.50t

[SAS_LV_tdata_rimage_3] SAS_VG Iwi---r--- 2.50t

[SAS_LV_tdata_rmeta_0] SAS_VG ewi---r--- 4.00m

[SAS_LV_tdata_rmeta_1] SAS_VG ewi---r--- 4.00m

[SAS_LV_tdata_rmeta_2] SAS_VG ewi---r--- 4.00m

[SAS_LV_tdata_rmeta_3] SAS_VG ewi---r--- 4.00m

[SAS_LV_tmeta] SAS_VG ewi------- 40.00g

[lvol1_pmspare] SAS_VG ewi------- 40.00g

Another problem. We got two warning messages - one about potentially using different PVs (which is false for me, everything related to this is using SAS_VG, so I can safely ignore it), and the other telling us to remove a backup of the pre-repaired metadata LVM made.

Simple enough since it shows up as a top-level LV now:

Code:

root@psivmh-snh-001:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi---tz-- 5.00t

SAS_LV_meta0 SAS_VG rwi---r--- 40.00g

data pve twi-a-tz-- 59.66g 0.00 1.59

root pve -wi-ao---- 27.75g

swap pve -wi-ao---- 8.00g

root@psivmh-snh-001:~# lvremove SAS_VG/SAS_LV_meta0

Do you really want to remove and DISCARD logical volume SAS_VG/SAS_LV_meta0? [y/n]: y

Logical volume "SAS_LV_meta0" successfully removed

Last, we reactivate our pool since we needed to deactivate it to fix it...

Code:

root@psivmh-snh-001:~# lvchange -ay SAS_VG/SAS_LV

root@psivmh-snh-001:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi-a-tz-- 5.00t 0.00 0.23

data pve twi-a-tz-- 59.66g 0.00 1.59

root pve -wi-ao---- 27.75g

swap pve -wi-ao---- 8.00g

root@psivmh-snh-001:~# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

SAS_LV SAS_VG twi-a-tz-- 5.00t 0.00 0.23

[SAS_LV_tdata] SAS_VG rwi-aor--- 5.00t 25.88

[SAS_LV_tdata_rimage_0] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_1] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_2] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rimage_3] SAS_VG Iwi-aor--- 2.50t

[SAS_LV_tdata_rmeta_0] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_1] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_2] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tdata_rmeta_3] SAS_VG ewi-aor--- 4.00m

[SAS_LV_tmeta] SAS_VG ewi-ao---- 40.00g

[lvol1_pmspare] SAS_VG ewi------- 40.00g

data pve twi-a-tz-- 59.66g 0.00 1.59

[data_tdata] pve Twi-ao---- 59.66g

[data_tmeta] pve ewi-ao---- 1.00g

[lvol0_pmspare] pve ewi------- 1.00g

root pve -wi-ao---- 27.75g

swap pve -wi-ao---- 8.00g

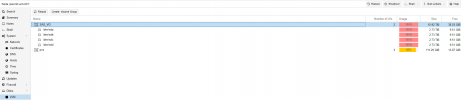

Now we go look at Proxmox web GUI: