I do not think that you are running into the same issue as you are running older versions. Please upgrade to the latest packages available and see if the issue persists.Hi, everybody, Very interesting, but I have similar problems with backups:

1. Unrelated drawdown in backup speed

2. Timeouts during the backup start

I am using version PBS

ii proxmox-backup-server 3.4.8-1 amd64 Proxmox Backup Server daemon with tools and GUI

6.8.12-8-pve

[SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20

- Thread starter Heracleos

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

This does not sound like the same issue as reported in this thread. Please check the systemd journal on the PBS side. Further, make sure you have enough memory available on the PBS and check the io pressure.Hello,

I've been having stalls on PVE as well. I'm a new Proxmox user and I never installed it on the desktop I'm using. In my setup I have a PVE on a desktop with i5 Intel, 16GB RAM, an consumer ssd where I install pve, LXCs, VMS and PBS as an LXC and a WD Red 6TB HDD. PBS has 2 cores and 2GB RAM. Backups are stored in 6TB HDD. I have verification ON. To troubleshoot the stalls I started a backup a frequent backup schedule of 3VMs.

The kernel I was using6.17.4-1-pve. After seeing this post I moved back to kernel6.14.11-4-pveBut I still experience the same stalls. I will try the test kernel and report back.

Bash:proxmox-boot-tool kernel list Manually selected kernels: None. Automatically selected kernels: 6.14.11-4-pve 6.17.4-1-pve Pinned kernel: 6.14.11-4-pve

Stalls happen especially during or after backups. When it happens PVE, VMs, LXCs are unreachable, cannot be pinged. Caps Lock doesn't work. The connected monitor over HDMI doesn't receive signal.

list-boots:

Bash:-1 f6f11f11cbaf4512b6f3ccaadd7f423b Sat 2026-01-03 00:43:56 +03 Sat 2026-01-03 16:40:59 +03

journalctl until the crash happens:

Bash:Oca 03 16:40:51 pve kernel: veth114i0: entered allmulticast mode Oca 03 16:40:51 pve kernel: veth114i0: entered promiscuous mode Oca 03 16:40:51 pve kernel: eth0: renamed from vethgEWLqB Oca 03 16:40:51 pve pvescheduler[1469364]: INFO: Finished Backup of VM 114 (00:00:18) Oca 03 16:40:52 pve pvescheduler[1469364]: INFO: Starting Backup of VM 1001 (lxc) Oca 03 16:40:52 pve kernel: audit: type=1400 audit(1767447652.091:19408): apparmor="DENIED" operation="mount" class="mount" info="failed flags match" error=-13 profile="lxc-1001_</var/lib/lxc>" name="/run/systemd/namespace-ngqfa5/" pid=> Oca 03 16:40:52 pve kernel: vmbr0: port 7(veth1001i0) entered disabled state Oca 03 16:40:52 pve kernel: veth1001i0 (unregistering): left allmulticast mode Oca 03 16:40:52 pve kernel: veth1001i0 (unregistering): left promiscuous mode Oca 03 16:40:52 pve kernel: vmbr0: port 7(veth1001i0) entered disabled state Oca 03 16:40:52 pve kernel: audit: type=1400 audit(1767447652.389:19409): apparmor="STATUS" operation="profile_remove" profile="/usr/bin/lxc-start" name="lxc-1001_</var/lib/lxc>" pid=1473070 comm="apparmor_parser" Oca 03 16:40:52 pve kernel: fwbr114i0: port 2(veth114i0) entered blocking state Oca 03 16:40:52 pve kernel: fwbr114i0: port 2(veth114i0) entered forwarding state Oca 03 16:40:53 pve kernel: EXT4-fs (loop5): unmounting filesystem e89cf519-de30-4fa8-8bc4-3c21f4280ecc. Oca 03 16:40:53 pve systemd[1]: pve-container@1001.service: Deactivated successfully. Oca 03 16:40:53 pve systemd[1]: pve-container@1001.service: Consumed 706ms CPU time, 94.1M memory peak. Oca 03 16:40:53 pve kernel: loop5: detected capacity change from 0 to 8388608 Oca 03 16:40:53 pve kernel: EXT4-fs (loop5): mounted filesystem e89cf519-de30-4fa8-8bc4-3c21f4280ecc r/w with ordered data mode. Quota mode: none. Oca 03 16:40:53 pve iptag[839]: = LXC 105: IP tag [1.105] unchanged Oca 03 16:40:54 pve pvedaemon[1460770]: VM 113 qga command failed - VM 113 qga command 'guest-ping' failed - got timeout Oca 03 16:40:56 pve iptag[839]: = LXC 114: IP tag [1.121] unchanged Oca 03 16:40:57 pve iptag[839]: = LXC 1001: No IP detected, tags unchanged Oca 03 16:40:57 pve iptag[839]: ✓ Completed processing LXC containers Oca 03 16:40:57 pve iptag[839]: ℹ Processing 2 virtual machine(s) sequentially Oca 03 16:40:58 pve kernel: audit: type=1400 audit(1767447658.848:19410): apparmor="DENIED" operation="create" class="net" info="failed protocol match" error=-13 profile="docker-default" pid=1277 comm="agent" family="unix" sock_type="st> Oca 03 16:40:59 pve iptag[839]: = VM 112: IP tag [1.107] unchanged

task list from the PBS UI:

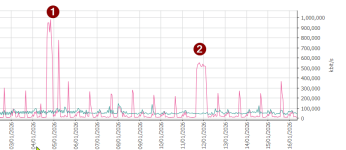

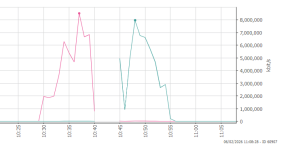

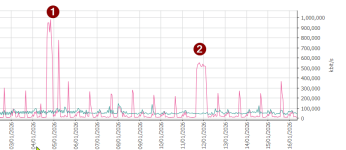

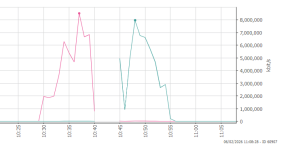

View attachment 94579

proxmox-backup-manager task list:

View attachment 94582

Task log is below. However stalls don't happen always at the same stage of the backup process.

Bash:pvenode task log UPID:pve:00166BB4:00578C0E:69591C39:vzdump::root@pam: INFO: starting new backup job: vzdump 1001 114 104 --storage pbs-unencrypted-datastore1 --notification-mode notification-system --quiet 1 --fleecing 0 --mode stop --notes-template '{{cluster}}, {{guestname}}, {{node}}, {{vmid}}' INFO: Starting Backup of VM 104 (lxc) INFO: Backup started at 2026-01-03 16:40:09 INFO: status = running INFO: backup mode: stop INFO: ionice priority: 7 INFO: CT Name: tstui INFO: including mount point rootfs ('/') in backup INFO: stopping virtual guest INFO: creating Proxmox Backup Server archive 'ct/104/2026-01-03T13:40:09Z' INFO: set max number of entries in memory for file-based backups to 1048576 INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=encrypt --keyfd=11 pct.conf:/var/tmp/vzdumptmp1469364_104/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --exclude=/tmp/?* --exclude=/var/tmp/?* --exclude=/var/run/?*.pid --backup-type ct --backup-id 104 --backup-time 1767447609 --entries-max 1048576 --repository root@pam@pbs.***.net:pbs-unencrypted-datastore1 INFO: Starting backup: ct/104/2026-01-03T13:40:09Z INFO: Client name: pve INFO: Starting backup protocol: Sat Jan 3 16:40:11 2026 INFO: Using encryption key from file descriptor.. INFO: Encryption key fingerprint: 7f:a1:42:c8:a3:b3:ed:e8 INFO: Downloading previous manifest (Sat Jan 3 16:20:09 2026) INFO: Upload config file '/var/tmp/vzdumptmp1469364_104/etc/vzdump/pct.conf' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as pct.conf.blob INFO: Upload directory '/mnt/vzsnap0' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as root.pxar.didx INFO: root.pxar: had to backup 86.922 MiB of 4.335 GiB (compressed 22.363 MiB) in 18.18 s (average 4.782 MiB/s) INFO: root.pxar: backup was done incrementally, reused 4.25 GiB (98.0%) INFO: Uploaded backup catalog (1.691 MiB) INFO: Duration: 18.75s INFO: End Time: Sat Jan 3 16:40:30 2026 INFO: adding notes to backup INFO: restarting vm INFO: guest is online again after 23 seconds INFO: Finished Backup of VM 104 (00:00:23) INFO: Backup finished at 2026-01-03 16:40:32 INFO: Starting Backup of VM 114 (lxc) INFO: Backup started at 2026-01-03 16:40:33 INFO: status = running INFO: backup mode: stop INFO: ionice priority: 7 INFO: CT Name: turnkeyFM INFO: including mount point rootfs ('/') in backup INFO: stopping virtual guest INFO: creating Proxmox Backup Server archive 'ct/114/2026-01-03T13:40:33Z' INFO: set max number of entries in memory for file-based backups to 1048576 INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=encrypt --keyfd=11 pct.conf:/var/tmp/vzdumptmp1469364_114/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --exclude=/tmp/?* --exclude=/var/tmp/?* --exclude=/var/run/?*.pid --backup-type ct --backup-id 114 --backup-time 1767447633 --entries-max 1048576 --repository root@pam@pbs.***.net:pbs-unencrypted-datastore1 INFO: Starting backup: ct/114/2026-01-03T13:40:33Z INFO: Client name: pve INFO: Starting backup protocol: Sat Jan 3 16:40:35 2026 INFO: Using encryption key from file descriptor.. INFO: Encryption key fingerprint: 7f:a1:42:c8:a3:b3:ed:e8 INFO: Downloading previous manifest (Sat Jan 3 16:20:36 2026) INFO: Upload config file '/var/tmp/vzdumptmp1469364_114/etc/vzdump/pct.conf' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as pct.conf.blob INFO: Upload directory '/mnt/vzsnap0' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as root.pxar.didx INFO: root.pxar: had to backup 83.119 MiB of 2.053 GiB (compressed 18.343 MiB) in 12.82 s (average 6.483 MiB/s) INFO: root.pxar: backup was done incrementally, reused 1.972 GiB (96.0%) INFO: Uploaded backup catalog (2.083 MiB) INFO: Duration: 13.64s INFO: End Time: Sat Jan 3 16:40:49 2026 INFO: adding notes to backup INFO: restarting vm INFO: guest is online again after 18 seconds INFO: Finished Backup of VM 114 (00:00:18) INFO: Backup finished at 2026-01-03 16:40:51 INFO: Starting Backup of VM 1001 (lxc) INFO: Backup started at 2026-01-03 16:40:52 INFO: status = running INFO: backup mode: stop INFO: ionice priority: 7 INFO: CT Name: pve-scripts-local INFO: including mount point rootfs ('/') in backup INFO: stopping virtual guest INFO: creating Proxmox Backup Server archive 'ct/1001/2026-01-03T13:40:52Z' INFO: set max number of entries in memory for file-based backups to 1048576 INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=encrypt --keyfd=11 pct.conf:/var/tmp/vzdumptmp1469364_1001/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --exclude=/tmp/?* --exclude=/var/tmp/?* --exclude=/var/run/?*.pid --backup-type ct --backup-id 1001 --backup-time 1767447652 --entries-max 1048576 --repository root@pam@pbs.***.net:pbs-unencrypted-datastore1 INFO: Starting backup: ct/1001/2026-01-03T13:40:52Z INFO: Client name: pve INFO: Starting backup protocol: Sat Jan 3 16:40:53 2026 INFO: Using encryption key from file descriptor.. INFO: Encryption key fingerprint: 7f:a1:42:c8:a3:b3:ed:e8 INFO: Downloading previous manifest (Sat Jan 3 16:20:55 2026) INFO: Upload config file '/var/tmp/vzdumptmp1469364_1001/etc/vzdump/pct.conf' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as pct.conf.blob INFO: Upload directory '/mnt/vzsnap0' to 'root@pam@pbs.***.net:8007:pbs-unencrypted-datastore1' as root.pxar.didx

proxmox-backup-manager task logs are below but don't show much info:

Bash:proxmox-backup-manager task log "UPID:proxmox-backup-server:00000119:00001185:00000280:69591C4E:verify:pbs\x2dunencrypted\x2ddatastore1\x3act-104-69591C39:root@pam:" Automatically verifying newly added snapshot verify pbs-unencrypted-datastore1:ct/104/2026-01-03T13:40:09Z check pct.conf.blob check root.pxar.didx Error: task failed (status unknown) proxmox-backup-manager task log "UPID:proxmox-backup-server:00000119:00001185:00000282:69591C61:verify:pbs\x2dunencrypted\x2ddatastore1\x3act-114-69591C51:root@pam:" Automatically verifying newly added snapshot verify pbs-unencrypted-datastore1:ct/114/2026-01-03T13:40:33Z check pct.conf.blob check root.pxar.didx Error: task failed (status unknown) proxmox-backup-manager task log "UPID:proxmox-backup-server:00000119:00001185:00000283:69591C65:backup:pbs\x2dunencrypted\x2ddatastore1\x3act-1001:root@pam:" Error: task failed (status unknown)

I run iostat and vmstat continuously to see any issues. The HDD utilization goes crazy but not sure if it can be a cause to stop whole system.

As I said my stalls happen both on6.17.4-1-pveand6.14.11-4-pvebelow are from 6.17.4-1-pve . But perf script didn't show much on pve:

Bash:cat /proc/sys/net/ipv4/tcp_rmem 4096 131072 33554432 perf record -a -e tcp:tcp_rcvbuf_grow sleep 30 ; perf script [ perf record: Woken up 1 times to write data ] [ perf record: Captured and wrote 0,623 MB perf.data ] rm: cannot remove 'dump.pcap': No such file or directory [1] 6501 tcpdump: your-interface: No such device exists (No such device exists) SHA256 speed: 469.70 MB/s Compression speed: 438.59 MB/s Decompress speed: 722.22 MB/s AES256/GCM speed: 2484.33 MB/s Verify speed: 280.96 MB/s ┌───────────────────────────────────┬────────────────────┐ │ Name │ Value │ ╞═══════════════════════════════════╪════════════════════╡ │ TLS (maximal backup upload speed) │ not tested │ ├───────────────────────────────────┼────────────────────┤ │ SHA256 checksum computation speed │ 469.70 MB/s (23%) │ ├───────────────────────────────────┼────────────────────┤ │ ZStd level 1 compression speed │ 438.59 MB/s (58%) │ ├───────────────────────────────────┼────────────────────┤ │ ZStd level 1 decompression speed │ 722.22 MB/s (60%) │ ├───────────────────────────────────┼────────────────────┤ │ Chunk verification speed │ 280.96 MB/s (37%) │ ├───────────────────────────────────┼────────────────────┤ │ AES256 GCM encryption speed │ 2484.33 MB/s (68%) │ └───────────────────────────────────┴────────────────────┘ [1]+ Exit 1 tcpdump port 8007 -i $iface -w dump.pcap tcpdump: no process found 8

I'm not sure if this issue is because of a faulty HW (RAM -though I run memtest several times-, HDD/SSD, CPU, PSU or mainboard) or SW. But I'd appreciate any help to troubleshoot more or solve the issue.

Many thanks in advance!

I had the same issues with backups after upgrading to PBS 4.1. I reverted to the 6.11.11-2-pve kernel that was previously used and backups work fine again. I didn't want to upgrade to 6.17.4-2-pve as on my home lab my Proxmox box crashed after a few days running that kernel.

Now I'm a little concerned running the 6.17 on my production cluster. My home lab box is AMD 7945HX cpu, 64GB RAM, and a couple NVME drives. I also run PBS in a container that backs up to a usb4 flash drive. Running AMD EPYC 9 CPUs on our prod cluster with Intel NICs.

thanks all

Now I'm a little concerned running the 6.17 on my production cluster. My home lab box is AMD 7945HX cpu, 64GB RAM, and a couple NVME drives. I also run PBS in a container that backs up to a usb4 flash drive. Running AMD EPYC 9 CPUs on our prod cluster with Intel NICs.

thanks all

Last edited:

After a upgrade to the latest kernel we noticed a severe drop in performance when it comes to operations like cloning a VM or CT.

We use a seperate SSD based CEPH cluster on a 40GB network. Before the update speeds were normal and after the update a cloning procedure of a 600GB vm disk takes more than 5 hours. Nothing has been changed when it comes to the config of the storage cluster.

We use a seperate SSD based CEPH cluster on a 40GB network. Before the update speeds were normal and after the update a cloning procedure of a 600GB vm disk takes more than 5 hours. Nothing has been changed when it comes to the config of the storage cluster.

from which kernel version to which kernel version? were other components/packages upgraded at the same time? do you see other symptoms described in this thread (connections stalling with tiny receive windows)?After a upgrade to the latest kernel we noticed a severe drop in performance when it comes to operations like cloning a VM or CT.

We use a seperate SSD based CEPH cluster on a 40GB network. Before the update speeds were normal and after the update a cloning procedure of a 600GB vm disk takes more than 5 hours. Nothing has been changed when it comes to the config of the storage cluster.

After updating PBS from 4.0.4 to the current version 4.1.0 (Enterprise repository) with kernel 6.17.2 on Monday, we suddenly experienced backup failures, even resulting in the destruction of VMs during the backup process. This cost us a great deal of work. After finding this thread, we temporarily switched PBS to the Community repository and performed the update to PBS 4.1.1 with kernel 6.17.4. The backup process now seems to be running stably again.

Therefore, two questions:

1. Why is a kernel released in the Enterprise repository and redistributed across multiple versions (PBS 4.0.20 - 4.1.0) when a bug has been known since the end of November 2025? We use the Enterprise repository precisely to prevent such issues!

2. When will the 6.17.4 kernel be available in the Enterprise repository?

Therefore, two questions:

1. Why is a kernel released in the Enterprise repository and redistributed across multiple versions (PBS 4.0.20 - 4.1.0) when a bug has been known since the end of November 2025? We use the Enterprise repository precisely to prevent such issues!

2. When will the 6.17.4 kernel be available in the Enterprise repository?

Last edited:

1. we did fix the issue we could reproduce quite fast

2. despite this long thread, only a (small!) subset of systems is affected, which is the reason why we haven't been able to reproduce the remaining issue at all so far, despite many hours spent by multiple team members on our end

3. rebooting into a working kernel as a workaround is possible, if your system/setup is affected

4. the 6.17.4-2 kernel will probably be moved to enterprise today or on Monday (we've waited for feedback by affected users, which only came in this week after everybody returned from their respective holidays). the package will be identical to the one on no-subscription (this is always true), so you can already install it from there if you want to have it now

2. despite this long thread, only a (small!) subset of systems is affected, which is the reason why we haven't been able to reproduce the remaining issue at all so far, despite many hours spent by multiple team members on our end

3. rebooting into a working kernel as a workaround is possible, if your system/setup is affected

4. the 6.17.4-2 kernel will probably be moved to enterprise today or on Monday (we've waited for feedback by affected users, which only came in this week after everybody returned from their respective holidays). the package will be identical to the one on no-subscription (this is always true), so you can already install it from there if you want to have it now

Many thanks for your reply. The good news: "the 6.17.4-2 kernel will probably be moved to enterprise today or on Monday", so we can change back to enterprise-repo. Especially since this kernel also runs on the PVEs, and I have little confidence in it anymore.

Let me elaborate a bit more regarding this issue. After the kernel upgrade of PBS the situation has improved. But I need to say it is still not as it used to be. I have noticed that some backups of a random VM takes way longer as it should be. For instance, a backup of a same VM takes one day 28 seconds while next day can be 2 hours or it even fails. If it fails there is a great chance that dirty-map is invalid so the next backup will take way longer.

To quantify a bit, before the kernel upgrade of PBS to the latest version some backups were running at kilo bits per second, now this slowness is "up" to few tens of M bits per second (10-40).

As I mention before, we have two clusters, one is still being populated - 9.1.1 (VMs imported from VMware), while the other one was just recently upgrade from PVE 8.4.14 to 9.1.1. So basically both clusters are now exactly same version with kernel 6.17.2-1.

So my assumption is that the current slowness is coming from PVE and also PVE kernel needs to be upgraded to 6.17.4-2. As we are using Enterprise repo I assume this kernel will be there soon(hopefully).

I am surprised that this regression has not been noticed before, as to me it looks quite serious one. I just need to say luckily this slowness is not being "noticed" by Ceph, otherwise it would have been way bigger problem.

To quantify a bit, before the kernel upgrade of PBS to the latest version some backups were running at kilo bits per second, now this slowness is "up" to few tens of M bits per second (10-40).

As I mention before, we have two clusters, one is still being populated - 9.1.1 (VMs imported from VMware), while the other one was just recently upgrade from PVE 8.4.14 to 9.1.1. So basically both clusters are now exactly same version with kernel 6.17.2-1.

So my assumption is that the current slowness is coming from PVE and also PVE kernel needs to be upgraded to 6.17.4-2. As we are using Enterprise repo I assume this kernel will be there soon(hopefully).

I am surprised that this regression has not been noticed before, as to me it looks quite serious one. I just need to say luckily this slowness is not being "noticed" by Ceph, otherwise it would have been way bigger problem.

fwiw, I had a stall on backups after upgrading and unpinning my PBS server but not upgrading PVE to the newest kernel a couple days ago. I then upgraded the PVE cluster to the new kernel and so far its working as it should.

We have a similar setup (seperate ceph) and noticed the same. The main difference we saw was during backups. The same backup job is now taking almost double time to completeAfter a upgrade to the latest kernel we noticed a severe drop in performance when it comes to operations like cloning a VM or CT.

We use a seperate SSD based CEPH cluster on a 40GB network. Before the update speeds were normal and after the update a cloning procedure of a 600GB vm disk takes more than 5 hours. Nothing has been changed when it comes to the config of the storage cluster.

As the user you quoted never got back to reply again on Fabians follow-up questions, maybe you can chime in here:We have a similar setup (seperate ceph) and noticed the same. The main difference we saw was during backups. The same backup job is now taking almost double time to complete

from which kernel version to which kernel version? were other components/packages upgraded at the same time? do you see other symptoms described in this thread (connections stalling with tiny receive windows)?

Hi Thomas,

sorry for the lack of details in my previous post.

Our setup consists of a 10 node PVE cluster with qdevice. Then we have two separate 5 node Ceph clusters.

Ceph version for both clusters is 19.2.2 squid (stable)

In January we updated our PVE cluster nodes from Proxmox Version: 8.4.14 Kernel: Linux 6.8.12-15-pve to Proxmox Version: 9.1.4 Kernel: Linux 6.17.4-2-pve. We did not change anything on ceph.

We are using Veeam as our backup tool. At first we were running Veeam version 12 and we though maybe a compatibility issues with PVE 9, but even after the upgrade to version 13 and the slowness persisted.

I also did a comparison of the logs during the backup time.

Proxmox Version: 8.4.14

facility Count

pvescheduler 4

pveproxy 2188

pvedaemon 678

pvestatd 6324

Proxmox Version: 9.1.4

facility Count

pvescheduler 7

pveproxy 282988

pvedaemon 700

pvestatd 4272

pvefw-logger 30

The pveproxy has a 279445 messages with this error that was not present in v8.4.14 : Use of uninitialized value $value in addition (+) at /usr/share/perl5/PVE/PullMetric.pm line 72.

The issue is around throughput, our backup jobs used to achieve 700MB/s now its around 320MB/s.

sorry for the lack of details in my previous post.

Our setup consists of a 10 node PVE cluster with qdevice. Then we have two separate 5 node Ceph clusters.

Ceph version for both clusters is 19.2.2 squid (stable)

In January we updated our PVE cluster nodes from Proxmox Version: 8.4.14 Kernel: Linux 6.8.12-15-pve to Proxmox Version: 9.1.4 Kernel: Linux 6.17.4-2-pve. We did not change anything on ceph.

We are using Veeam as our backup tool. At first we were running Veeam version 12 and we though maybe a compatibility issues with PVE 9, but even after the upgrade to version 13 and the slowness persisted.

| Job Comparison |

| Duration | Data Transferred | |

| Proxmox Version: 8.4.14 | 127 | 06:43:06 | 5.3TB | |

| Proxmox Version: 9.1.4 | 130 | 11:46:40 | 5.3TB |

I also did a comparison of the logs during the backup time.

Proxmox Version: 8.4.14

facility Count

pvescheduler 4

pveproxy 2188

pvedaemon 678

pvestatd 6324

Proxmox Version: 9.1.4

facility Count

pvescheduler 7

pveproxy 282988

pvedaemon 700

pvestatd 4272

pvefw-logger 30

The pveproxy has a 279445 messages with this error that was not present in v8.4.14 : Use of uninitialized value $value in addition (+) at /usr/share/perl5/PVE/PullMetric.pm line 72.

The issue is around throughput, our backup jobs used to achieve 700MB/s now its around 320MB/s.

Hi Thomas,

below please find the network throughput graph from one of the PVE nodes on the network bond related to the Ceph traffic. This bond is dedicated to ceph client network only. The purple line is the received traffic on the node. 2 is after the upgrade to 9.1.4

To eliminate any doubt on the network infrastructure, we also have a dedicated bond for Live Migrations and speed is excellent

This further point to Kernel vs Ceph module. At least that is my opinion

below please find the network throughput graph from one of the PVE nodes on the network bond related to the Ceph traffic. This bond is dedicated to ceph client network only. The purple line is the received traffic on the node. 2 is after the upgrade to 9.1.4

To eliminate any doubt on the network infrastructure, we also have a dedicated bond for Live Migrations and speed is excellent

This further point to Kernel vs Ceph module. At least that is my opinion

Last edited: