Hey all!

I had a perfectly working PVE7 not so long ago. Today I went to ssh into my LXC container managed by Portainer to edit some conifig file (for Frigate NVR) only to see that I cannot ssh into it.

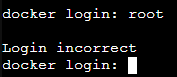

I have tried FileZilla and PuttY and get "ECONNREFUSED - Connection refused by server". From the lxc console inside proxmox I cannot proceed past the username (root):

What I have checked so far is

I'm not sure what to do or where to start to fix the problem. Already spent few hours searching around. I do have moderate knowledge in Linux, but this is me experimenting with Proxmox so the user input error could be something vague.

Only thing that comes to mind is that I have upgraded storage on my machine. I have added one SSD inside and an USB SSD.

For adding storage I have followed these (I basically used binding mount points):

https://www.youtube.com/watch?v=w9X94bAm3dI

https://www.youtube.com/watch?v=65woVFSmEGE

https://www.youtube.com/watch?v=ATuUBocesmA

Best regards,

Bruno

I had a perfectly working PVE7 not so long ago. Today I went to ssh into my LXC container managed by Portainer to edit some conifig file (for Frigate NVR) only to see that I cannot ssh into it.

I have tried FileZilla and PuttY and get "ECONNREFUSED - Connection refused by server". From the lxc console inside proxmox I cannot proceed past the username (root):

What I have checked so far is

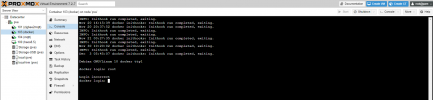

nano /var/log/auth.log from inside the lxc (I have used lxc-attach --name 103 to access):

Bash:

Nov 21 02:25:07 docker sudo: root : TTY=pts/1 ; PWD=/ ; USER=root ; COMMAND=/usr/bin/intel_gpu_top

Nov 21 02:25:07 docker sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

Nov 21 02:25:07 docker sudo: pam_unix(sudo:session): session closed for user root

Nov 21 02:28:39 docker sshd[35005]: Accepted password for root from 192.168.1.122 port 60417 ssh2

Nov 21 02:28:39 docker sshd[35005]: pam_unix(sshd:session): session opened for user root by (uid=0)

Nov 21 02:29:39 docker sshd[35005]: pam_unix(sshd:session): session closed for user root

Nov 21 02:35:04 docker sshd[35241]: Accepted password for root from 192.168.1.122 port 60589 ssh2

Nov 21 02:35:04 docker sshd[35241]: pam_unix(sshd:session): session opened for user root by (uid=0)

Nov 21 02:36:47 docker sshd[35241]: pam_unix(sshd:session): session closed for user root

Nov 21 02:36:58 docker sshd[36189]: Accepted password for root from 192.168.1.122 port 60648 ssh2

Nov 21 02:36:58 docker sshd[36189]: pam_unix(sshd:session): session opened for user root by (uid=0)

Nov 21 02:38:43 docker sshd[36189]: pam_unix(sshd:session): session closed for user root

Nov 21 04:22:22 docker sshd[9003]: pam_unix(sshd:session): session closed for user root

Dec 3 05:32:35 docker login[245]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 05:32:35 docker login[245]: PAM adding faulty module: pam_unix.so

Dec 3 05:32:39 docker login[245]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 05:34:56 docker login[109300]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 05:34:56 docker login[109300]: PAM adding faulty module: pam_unix.so

Dec 3 05:34:58 docker login[109300]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 05:38:10 docker login[109360]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 05:38:10 docker login[109360]: PAM adding faulty module: pam_unix.so

Dec 3 05:38:12 docker login[109360]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 05:38:19 docker login[109360]: FAILED LOGIN (2) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 05:45:29 docker login[247]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 05:45:29 docker login[247]: PAM adding faulty module: pam_unix.so

Dec 3 05:45:33 docker login[247]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 05:59:37 docker login[1402]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 05:59:37 docker login[1402]: PAM adding faulty module: pam_unix.so

Dec 3 05:59:40 docker login[1402]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 06:06:04 docker login[1807]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 06:06:04 docker login[1807]: PAM adding faulty module: pam_unix.so

Dec 3 06:06:07 docker login[1807]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failure

Dec 3 06:12:01 docker passwd[2103]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 06:12:01 docker passwd[2103]: PAM adding faulty module: pam_unix.so

Dec 3 06:36:40 docker login[1981]: PAM unable to dlopen(pam_unix.so): /lib/security/pam_unix.so: cannot open shared object file: No such file or directory

Dec 3 06:36:40 docker login[1981]: PAM adding faulty module: pam_unix.so

Dec 3 06:36:43 docker login[1981]: FAILED LOGIN (1) on '/dev/tty1' FOR 'root', Authentication failureI'm not sure what to do or where to start to fix the problem. Already spent few hours searching around. I do have moderate knowledge in Linux, but this is me experimenting with Proxmox so the user input error could be something vague.

Only thing that comes to mind is that I have upgraded storage on my machine. I have added one SSD inside and an USB SSD.

For adding storage I have followed these (I basically used binding mount points):

https://www.youtube.com/watch?v=w9X94bAm3dI

https://www.youtube.com/watch?v=65woVFSmEGE

https://www.youtube.com/watch?v=ATuUBocesmA

Best regards,

Bruno

Last edited: