After upgrading pve and rebooting the host, all but 1 VMs crashed during OS start.

Attempted:

Noticed the log contained

On a whim, we decided to enable writethrough caching and then the VM was able to start. This was only needed on the boot volume. Additional drives connected to the VM remain without any cache. Obviously, this is a temporary fix just to get up and running again...

pveversion:

Attempted:

- Reboot host

- Repair drive inside of VM

- Restore backup

- OS Recovery

- Pin 5.15.64-1-pve kernel

Noticed the log contained

blk_set_enable_write_cache: Assertion `qemu_in_main_thread()' failed and assumed this was expected because caching was disabled for all drives. On a whim, we decided to enable writethrough caching and then the VM was able to start. This was only needed on the boot volume. Additional drives connected to the VM remain without any cache. Obviously, this is a temporary fix just to get up and running again...

pveversion:

Code:

proxmox-ve: 7.2-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.2-14 (running version: 7.2-14/65898fbc)

pve-kernel-5.15: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-7

libpve-guest-common-perl: 4.2-2

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.2-12

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.7-1

proxmox-backup-file-restore: 2.2.7-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.2

pve-cluster: 7.2-3

pve-container: 4.3-5

pve-docs: 7.2-3

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

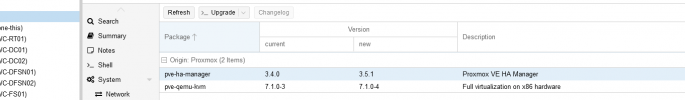

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 7.1.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-10

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1

Last edited: