I've already read through several threads, but unfortunately I can't see through them. That's why I'd like to go through it properly here.

Problem:

What I did:

I added my NAS via SMB/CIFS via Data Center > Storage:

ID: MyCloudEX2Ultra | Server: 192.168.2.134 | Username: srv-01 | Share: srv-01 | Nodes: All | Enable: Yes | Content: VZDump backup file

I have added a job via Datacenter > Backup:

Node: All | Storage: MyCloudEX2Ultra | Schedule: 9 pm everyday | Selection Mode: All | Compression: ZSTD | Mode: Snapshot | Enable: Yes | Retain for 3 months

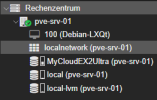

The storage MyCloudEX2Ultra (pve-srv-01) also appears on the left in the pve-srv-01 node. I am logged in to Webaccess as root all the time for security reasons. I am aware of the security risks, but the server is on a test basis.

In the pve-srv-01 node in the shell:

If it is relevant:

I will be happy to answer any questions. Thank you all in advance.

Problem:

INFO: starting new backup job: vzdump --notes-template '{{guestname}}' --prune-backups 'keep-monthly=3' --all 1 --fleecing 0 --storage MyCloudEX2Ultra --node pve-srv-01 --mode snapshot --compress zstdINFO: Starting Backup of VM 100 (qemu)INFO: Backup started at 2024-09-26 12:40:30INFO: status = stoppedINFO: backup mode: stopINFO: ionice priority: 7INFO: VM Name: Debian-LXQtINFO: include disk 'virtio0' 'local-lvm:vm-100-disk-1' 32GINFO: include disk 'efidisk0' 'local-lvm:vm-100-disk-0' 4MERROR: Backup of VM 100 failed - unable to open '/mnt/pve/MyCloudEX2Ultra/dump/vzdump-qemu-100-2024_09_26-12_40_30.tmp/qemu-server.conf' - Stale file handleINFO: Failed at 2024-09-26 12:40:30INFO: Backup job finished with errorsTASK ERROR: job errorsWhat I did:

I added my NAS via SMB/CIFS via Data Center > Storage:

ID: MyCloudEX2Ultra | Server: 192.168.2.134 | Username: srv-01 | Share: srv-01 | Nodes: All | Enable: Yes | Content: VZDump backup file

I have added a job via Datacenter > Backup:

Node: All | Storage: MyCloudEX2Ultra | Schedule: 9 pm everyday | Selection Mode: All | Compression: ZSTD | Mode: Snapshot | Enable: Yes | Retain for 3 months

The storage MyCloudEX2Ultra (pve-srv-01) also appears on the left in the pve-srv-01 node. I am logged in to Webaccess as root all the time for security reasons. I am aware of the security risks, but the server is on a test basis.

In the pve-srv-01 node in the shell:

mount | grep MyCloudEX2Ultra://192.168.2.134/srv-01 on /mnt/pve/MyCloudEX2Ultra type cifs (rw,relatime,vers=3.1.1,cache=strict,username=srv-01,uid=0,noforceuid,gid=0,noforcegid,addr=192.168.2.134,file_mode=0755,dir_mode=0755,soft,nounix,serverino,mapposix,rsize=4194304,wsize=4194304,bsize=1048576,retrans=1,echo_interval=60,actimeo=1,closetimeo=1)df -h:Filesystem Size Used Avail Use% Mounted onudev 12G 0 12G 0% /devtmpfs 2.4G 1.4M 2.4G 1% /run/dev/mapper/pve-root 94G 4.1G 86G 5% /tmpfs 12G 46M 12G 1% /dev/shmtmpfs 5.0M 0 5.0M 0% /run/lockefivarfs 128K 121K 2.7K 98% /sys/firmware/efi/efivars/dev/nvme0n1p2 1022M 12M 1011M 2% /boot/efi/dev/fuse 128M 16K 128M 1% /etc/pve//192.168.2.134/srv-01 64G 26G 39G 40% /mnt/pve/MyCloudEX2Ultratmpfs 2.4G 0 2.4G 0% /run/user/0ls -ld /mnt/pve/MyCloudEX2Ultra/:drwxr-xr-x 2 root root 0 Sep 26 12:40 /mnt/pve/MyCloudEX2Ultra/If it is relevant:

- I have a Fritz!Box 7530

- The user srv-01 is actually present on the MyCloudEX2Ultra. The NAS is actively used.

- I can reach the NAS with the ping command

I will be happy to answer any questions. Thank you all in advance.