You don't need to try this, with atime already disabled, unless you really need the atime. Not writing a atime at all does less overhead than updating the atime once per day (what enabled atime + enabled relatime would do).

Doesn't make much sense. A consumer SSD isn't much faster than a HDD when doing sync writes. And sync writes is all a SLOG is doing. So when using a consumer SSD this won't be much better than without a SLOG.

Fair points! And honestly I have a hard time believing it's relating to not having a SLOG. Even if I set sync=never, the writes slowly filter out of memory at the same speeds, around 20MB/s, same as doing a copy on a guest or running ATTO or FIO. I have 64GB of DDR4 memory on that node as well, and these speeds are seen both if the memory fills up and the transfer pauses, or when the transfer finishes. Also, these drives test, with the same types of data, at 200+MB/s outside of the mirror. I have 4 of them now so I could raidz1 them or something to see if it's an issue with mirrors, but I have the same exact issue on another box w/ 8 10k rpm SAS drives in raidz2, which also test to test similar speeds outside of the ZFS pool, 150-200MB/s.

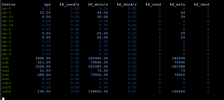

Interesting, when testing w/ ATTO in Windows, iostat shows that zd0 is writing at 200+MB/s mostly as we'd expect (it's a striped mirror so should be higher but whatever its good), but there is no file being written to the drive. Nothing shows up, just seems like data is being streamed to it? Then of course a crystal disk mark or file transfer test shows the low 20MB/s speeds on zd0 and 10ish MB/s on the drives.

I know there's lots of confounding variables here but the speeds I'm seeing on all the Proxmox boxes w/ ZFS mirror a raidz2 are so low it just doesn't feel like a performance tuning problem.

Outside of getting an enterprise SSD, is there anything else I can test here? I'll put it on the list but just hoping to be able to try something else in the meantime haha. If I just need to start from scratch and follow specific setup path, I can do that, I can move the VMs to another node and rebuild the one we're testing on. I just need to know what to change since I've had this issue on several installs.

Appreciate all the help everyone.