Hello everyone,

I've been working on this issue for a little while now where I'm not seeing the expected write performance for a ZFS array. First, I'd like to go over the system setup and specs:

This is a temporary set-up. I have a Windows Veeam VM (data drive on local-vm-zfs) that takes hourly back-ups of an Ubuntu SMB VM (data drive on local-vm-zfs2). The permanent setup is that they are on separate nodes and since they're on a single node for now, I put their storage drives on two different ZFS mirrors.

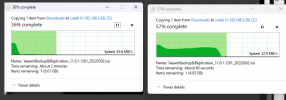

Is this set-up the problem in and of itself? My read and write speeds do seem to be about half of what I'd expect for sequential writes:

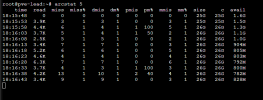

It ranges from 30-110MB/s. iotop on the host shows it ranges from 80-180, sometimes lower , overall very sporadic. I do not believe the drives are the issue, as I have tried offlining both of them to see if one had failed. They were purchased a year apart from each other so unlikely to be a batch problem.

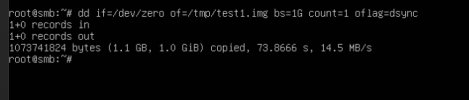

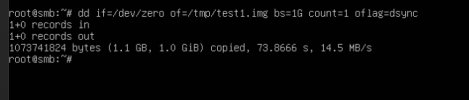

dd is somehow even slower, same for running a CrystalDiskMark benchmark in the Windows Veeam VM, around 10-20MB/s

I have tested this after rebooting and with all other VMs turned off other than the SMB VM. The fastest I've seen so far is a consistent 70MB/s, or spiking between 30-100MBs.

If I enable sync=never, I am able to verify that the issue is not the network, as speeds spike to 700MB/s and I see the RAM on the node filling up and then slowly writing to the drive. If the ZFS cache fills before the write is done, the upload speed will drop (sometimes to 0) and eventually it goes back to spiking between 30 and 100MB/s.

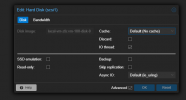

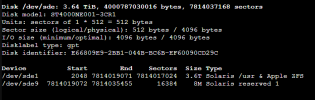

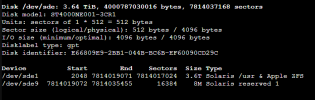

I believe this started after I created the second ZFS mirror and migrated the VMs over. Also, the drives are all plugged directly into the SATA ports on the motherboard. ZFS recordsize for both arrays is 128k, block size for the drives:

Let me know if there is any more information I can provide.

Thanks!

Caleb

I've been working on this issue for a little while now where I'm not seeing the expected write performance for a ZFS array. First, I'd like to go over the system setup and specs:

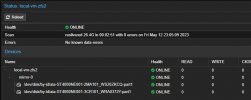

- I have two servers in a cluster, both with a ZFS Mirror local-vm-zfs.

- The node in question has two mirrors, local-vm-zfs and local-vm-zfs2. Local-vm-zfs has two Toshiba 4TB N300 CMR drives and local-vm-zfs2 has two Seagate IronWolfPro drives.

- This node has an AMD Ryzen 5500 and 64GB of DDR4 2400MHz ECC RAM

- All nodes and sending PC connected to a TP-Link 10G managed switch

- SATA cables are all new

This is a temporary set-up. I have a Windows Veeam VM (data drive on local-vm-zfs) that takes hourly back-ups of an Ubuntu SMB VM (data drive on local-vm-zfs2). The permanent setup is that they are on separate nodes and since they're on a single node for now, I put their storage drives on two different ZFS mirrors.

Is this set-up the problem in and of itself? My read and write speeds do seem to be about half of what I'd expect for sequential writes:

It ranges from 30-110MB/s. iotop on the host shows it ranges from 80-180, sometimes lower , overall very sporadic. I do not believe the drives are the issue, as I have tried offlining both of them to see if one had failed. They were purchased a year apart from each other so unlikely to be a batch problem.

dd is somehow even slower, same for running a CrystalDiskMark benchmark in the Windows Veeam VM, around 10-20MB/s

I have tested this after rebooting and with all other VMs turned off other than the SMB VM. The fastest I've seen so far is a consistent 70MB/s, or spiking between 30-100MBs.

If I enable sync=never, I am able to verify that the issue is not the network, as speeds spike to 700MB/s and I see the RAM on the node filling up and then slowly writing to the drive. If the ZFS cache fills before the write is done, the upload speed will drop (sometimes to 0) and eventually it goes back to spiking between 30 and 100MB/s.

I believe this started after I created the second ZFS mirror and migrated the VMs over. Also, the drives are all plugged directly into the SATA ports on the motherboard. ZFS recordsize for both arrays is 128k, block size for the drives:

Let me know if there is any more information I can provide.

Thanks!

Caleb