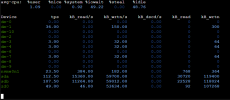

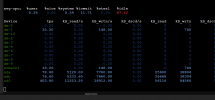

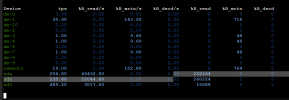

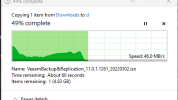

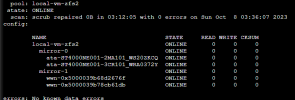

Update: just tried creating a new ZFS pool on the one where I created the test LVM pool. I also turned off compression as a test. Same performance, I used Crystaldiskmark for both tests with a 16GB test file. Task manager shows the speeds going up to 120-160MB/s as expected for a second, then dropping back down to zero. it does this until the test is done, with varying peak speeds. I've attached a file with the results of "zfs get all" for this node. It should be all default.

Edit,

I think you asked this earlier as well, when I do a disk move from an SSD to the ZFS pool, they happen at around 100-160MB/s, which is more than acceptable, and around what I would see through SMB as well.

Edit again,

I will be bringing up a third node eventually which will be running my and my partners PCs in VMs. It will have one ZFS mirror for now, one for the Proxmox host itself and VM boot drives, and it will be using NVME SSDs. . I know I just complained about using an SSD cache drive, so maybe I'll give that a go once I get the write speed sorted for the spinning drives themselves. A bigger issue is that the IO wait skyrockets to 50-80% during sustained writes on the current ZFS mirror. So we'll see if that issue persists w/ the NVME drives.

Edit,

I think you asked this earlier as well, when I do a disk move from an SSD to the ZFS pool, they happen at around 100-160MB/s, which is more than acceptable, and around what I would see through SMB as well.

Edit again,

I will be bringing up a third node eventually which will be running my and my partners PCs in VMs. It will have one ZFS mirror for now, one for the Proxmox host itself and VM boot drives, and it will be using NVME SSDs. . I know I just complained about using an SSD cache drive, so maybe I'll give that a go once I get the write speed sorted for the spinning drives themselves. A bigger issue is that the IO wait skyrockets to 50-80% during sustained writes on the current ZFS mirror. So we'll see if that issue persists w/ the NVME drives.

Attachments

Last edited: