Hallo,

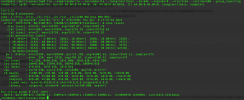

We run a 14 Node Proxmox Cluster (6.3.3) with an attached 7 Node Ceph Cluster (Nautilus 14.2.16) as Storage. Our Promox Backup Server (1.0.8 but tried also with 1.0.11) runs as Baremetal inside the Proxmox Cluster with a ca. 70TiB ZFS as Backupstorage (128GB Memory, has read+write cache SSDs). Everything is connected with 10GB network.

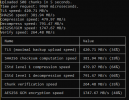

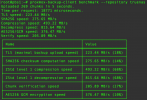

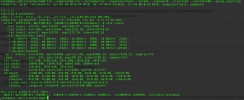

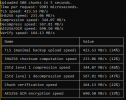

here is a actual write benchmark test from one of the Nodes to the Backupstorage:

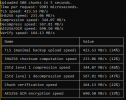

proxmox-backup-client benchmark --repository root@pam@10.10.5.6:datastore

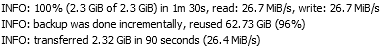

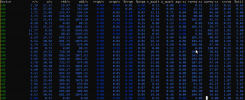

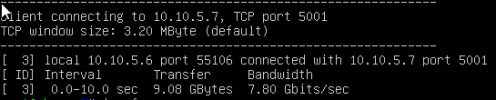

an rbd export test shows:

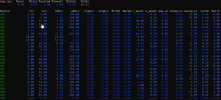

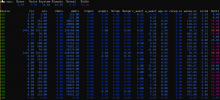

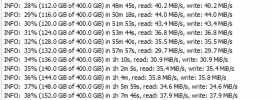

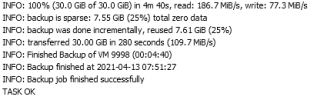

but Backup speed is compared to those numbers - slow:

any ideas where the problem might be ?

thanks in advance!

We run a 14 Node Proxmox Cluster (6.3.3) with an attached 7 Node Ceph Cluster (Nautilus 14.2.16) as Storage. Our Promox Backup Server (1.0.8 but tried also with 1.0.11) runs as Baremetal inside the Proxmox Cluster with a ca. 70TiB ZFS as Backupstorage (128GB Memory, has read+write cache SSDs). Everything is connected with 10GB network.

here is a actual write benchmark test from one of the Nodes to the Backupstorage:

proxmox-backup-client benchmark --repository root@pam@10.10.5.6:datastore

an rbd export test shows:

but Backup speed is compared to those numbers - slow:

any ideas where the problem might be ?

thanks in advance!