Hello!

I'm having this weird behavior with Proxmox installation with a ZFS pool (RAIDZ2, 12x10 TB) with a single VM with 72 Tb allocated. However, I have noticed that since September, the volume usage went from 76,89 TB to 88.55 TB, filling the pool to 100%.

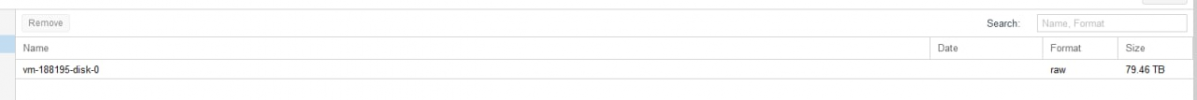

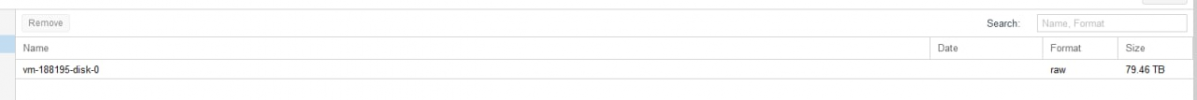

The GUI (as you can see in the picture above) reports that the .raw takes 79.46 TB. The VM itself is only using ~40% of the allocated space (32.97 TB).

I do not understand why the pool usage went to nearly 100% without even filling out the VM volume itself. We're not using snapshops, compression is enabled, and now I'm even locked up from the VM (as it doesn't boot with the filesystem in readonly mode) and somewhat afraid that I'm going to lose the data.

It's impressive that the volume grown about 1.8TB per week, since it started growing (~Sept 9). The data on the VM didn't grow at that rate, and there is still more than half of the space available in the vm image.

I'm attaching a .txt file with a bunch of commands that we have run that might help someone with a better eye (and zfs knowledge) spotting what might be wrong here.

Any ideas why this is happening? Is there anyway to reclaim the empty space being used (we already tried running zfs trim, but the results are the same)?

If you have any suggestions on how we can recover from the 100% filled pool, I also appreciate it!

Thanks.

I'm having this weird behavior with Proxmox installation with a ZFS pool (RAIDZ2, 12x10 TB) with a single VM with 72 Tb allocated. However, I have noticed that since September, the volume usage went from 76,89 TB to 88.55 TB, filling the pool to 100%.

The GUI (as you can see in the picture above) reports that the .raw takes 79.46 TB. The VM itself is only using ~40% of the allocated space (32.97 TB).

Code:

root@zfsbox1:/dev/zvol/ZFS1# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

ZFS1 109T 106T 3.41T - - 64% 96% 1.00x ONLINE -

root@zfsbox1:/dev/zvol/ZFS1# zfs list

NAME USED AVAIL REFER MOUNTPOINT

ZFS1 80.5T 1.89M 219K /ZFS1

ZFS1/vm-188195-disk-0 80.5T 1.89M 80.5T -I do not understand why the pool usage went to nearly 100% without even filling out the VM volume itself. We're not using snapshops, compression is enabled, and now I'm even locked up from the VM (as it doesn't boot with the filesystem in readonly mode) and somewhat afraid that I'm going to lose the data.

It's impressive that the volume grown about 1.8TB per week, since it started growing (~Sept 9). The data on the VM didn't grow at that rate, and there is still more than half of the space available in the vm image.

I'm attaching a .txt file with a bunch of commands that we have run that might help someone with a better eye (and zfs knowledge) spotting what might be wrong here.

Any ideas why this is happening? Is there anyway to reclaim the empty space being used (we already tried running zfs trim, but the results are the same)?

If you have any suggestions on how we can recover from the 100% filled pool, I also appreciate it!

Thanks.