We have multiple (9 now, 15 on their way) Proxmox hosts in our 2 datacenters.

Each node has an eBGP connection to two switches (ptp subnet /31) for the underlay with an unique Private AS number (ie. SW015 AS 4200000102 -- PRXMX01 || AS 4200000103).

In the SDN controller each host has EVPN peering setup to 4 route-reflectors (iBGP with AS 65000) (Two in each DC || Huawei S6730 series with VXLAN licences).

We don't use the Proxmox node as VTEP, but instead we do this at the route-reflector(s) in the DC where the firewall is located.

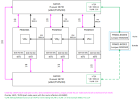

Below you will find the frr config and attached a network schematic.

Here is our problem:

If we look at the evpn mac routing table in our route-reflectors, we see all mac-addresses of the Virtual Machines.

We can ping all Virtual Machines from the firewall and vice versa. So no reason doubt our setup and config you would say.

But if we try to ping between the Virtual Machines it sometimes works and sometimes it does not.

If we re-aply the SDN config, some completly other VM's can't ping each other.

The is absolutely no logic on who can ping who. In all situations the Virtual Machine keeps the ping to the firewall and vice versa.

Help is kindly appreciated!

Each node has an eBGP connection to two switches (ptp subnet /31) for the underlay with an unique Private AS number (ie. SW015 AS 4200000102 -- PRXMX01 || AS 4200000103).

In the SDN controller each host has EVPN peering setup to 4 route-reflectors (iBGP with AS 65000) (Two in each DC || Huawei S6730 series with VXLAN licences).

We don't use the Proxmox node as VTEP, but instead we do this at the route-reflector(s) in the DC where the firewall is located.

Below you will find the frr config and attached a network schematic.

Here is our problem:

If we look at the evpn mac routing table in our route-reflectors, we see all mac-addresses of the Virtual Machines.

We can ping all Virtual Machines from the firewall and vice versa. So no reason doubt our setup and config you would say.

But if we try to ping between the Virtual Machines it sometimes works and sometimes it does not.

If we re-aply the SDN config, some completly other VM's can't ping each other.

The is absolutely no logic on who can ping who. In all situations the Virtual Machine keeps the ping to the firewall and vice versa.

Help is kindly appreciated!

Code:

Current configuration:

!

frr version 8.5.2

frr defaults datacenter

hostname prxmx01

log syslog informational

service integrated-vtysh-config

!

router bgp 4200000108

bgp router-id 10.0.103.50

no bgp hard-administrative-reset

no bgp default ipv4-unicast

bgp disable-ebgp-connected-route-check

coalesce-time 1000

no bgp graceful-restart notification

bgp bestpath as-path multipath-relax

neighbor BGP peer-group

neighbor BGP remote-as external

neighbor BGP bfd

neighbor BGP ebgp-multihop 15

neighbor VTEP peer-group

neighbor VTEP remote-as external

neighbor VTEP local-as 65000

neighbor VTEP bfd

neighbor VTEP ebgp-multihop 15

neighbor VTEP update-source lo200

neighbor 10.0.101.14 peer-group BGP

neighbor 10.0.101.16 peer-group BGP

neighbor 10.0.102.3 peer-group VTEP

neighbor 10.0.102.4 peer-group VTEP

neighbor 10.0.103.15 peer-group VTEP

neighbor 10.0.103.20 peer-group VTEP

!

address-family ipv4 unicast

network 10.0.103.50/32

neighbor BGP activate

neighbor BGP soft-reconfiguration inbound

exit-address-family

!

address-family l2vpn evpn

neighbor VTEP activate

neighbor VTEP route-map MAP_VTEP_IN in

neighbor VTEP route-map MAP_VTEP_OUT out

advertise-all-vni

autort as 65000

exit-address-family

exit

!

ip prefix-list loopbacks_ips seq 10 permit 0.0.0.0/0 le 32

!

route-map MAP_VTEP_IN permit 1

exit

!

route-map MAP_VTEP_OUT permit 1

exit

!

route-map correct_src permit 1

match ip address prefix-list loopbacks_ips

set src 10.0.103.50

exit

!

ip protocol bgp route-map correct_src

!

end