Hi,

we want to use salt cloud provider to deploy lxc & qemu. I am able to connect to the proxmox host, am able to list images and nodes, but when I try to deploy a container, I get following error:

[DEBUG ] MasterEvent PULL socket URI: /var/run/salt/master/master_event_pull.ipc

[DEBUG ] Initializing new IPCClient for path: /var/run/salt/master/master_event_pull.ipc

[DEBUG ] Sending event: tag = salt/cloud/testubuntu/requesting; data = {'_stamp': '2017-02-28T19:33:29.698622', 'event': 'requesting instance', 'kwargs': {'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'}}

[DEBUG ] Preparing to generate a node using these parameters: {'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'}

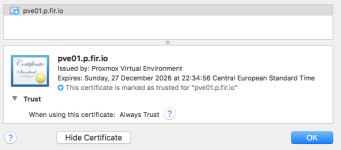

[DEBUG ] post: https://pve01.p.fir.io:8006/api2/json/nodes/pve01/lxc ({'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'})

[ERROR ] Error creating testubuntu on PROXMOX

The following exception was thrown when trying to run the initial deployment:

400 Client Error: Parameter verification failed.

Traceback (most recent call last):

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 547, in create

data = create_node(vm_, newid)

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 728, in create_node

node = query('post', 'nodes/{0}/{1}'.format(vmhost, vm_['technology']), newnode)

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 178, in query

response.raise_for_status()

File "/usr/lib/python2.7/site-packages/requests/models.py", line 834, in raise_for_status

raise HTTPError(http_error_msg, response=self)

HTTPError: 400 Client Error: Parameter verification failed.

Error: There was a profile error: Failed to deploy VM

I use the default cloud profile like on this page described: docs.saltstack.com/en/latest/topics/cloud/proxmox.html

The file lives in /etc/salt/cloud.profile.d and looks like this:

admin@salt ~ $ cat /etc/salt/cloud.profiles.d/b-pve-test-profile.conf

proxmox-ubuntu:

provider: b-pve-provider

image: local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz

technology: lxc

# host needs to be set to the configured name of the proxmox host

# and not the ip address or FQDN of the server

host: pve01

ip_address: 192.168.100.155

password: topsecret

I thought, it would be possible to use all of the api2 commands in the conf file like trunks=vlanid, etc... pve.proxmox.com/pve-docs/api-viewer/index.html

Thanks,

Michael

we want to use salt cloud provider to deploy lxc & qemu. I am able to connect to the proxmox host, am able to list images and nodes, but when I try to deploy a container, I get following error:

[DEBUG ] MasterEvent PULL socket URI: /var/run/salt/master/master_event_pull.ipc

[DEBUG ] Initializing new IPCClient for path: /var/run/salt/master/master_event_pull.ipc

[DEBUG ] Sending event: tag = salt/cloud/testubuntu/requesting; data = {'_stamp': '2017-02-28T19:33:29.698622', 'event': 'requesting instance', 'kwargs': {'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'}}

[DEBUG ] Preparing to generate a node using these parameters: {'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'}

[DEBUG ] post: https://pve01.p.fir.io:8006/api2/json/nodes/pve01/lxc ({'password': 'topsecret', 'hostname': 'testubuntu', 'vmid': 147, 'ostemplate': 'local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz', 'net0': 'bridge=vmbr0,ip=192.168.100.155/24,name=eth0,type=veth'})

[ERROR ] Error creating testubuntu on PROXMOX

The following exception was thrown when trying to run the initial deployment:

400 Client Error: Parameter verification failed.

Traceback (most recent call last):

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 547, in create

data = create_node(vm_, newid)

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 728, in create_node

node = query('post', 'nodes/{0}/{1}'.format(vmhost, vm_['technology']), newnode)

File "/usr/lib/python2.7/site-packages/salt/cloud/clouds/proxmox.py", line 178, in query

response.raise_for_status()

File "/usr/lib/python2.7/site-packages/requests/models.py", line 834, in raise_for_status

raise HTTPError(http_error_msg, response=self)

HTTPError: 400 Client Error: Parameter verification failed.

Error: There was a profile error: Failed to deploy VM

I use the default cloud profile like on this page described: docs.saltstack.com/en/latest/topics/cloud/proxmox.html

The file lives in /etc/salt/cloud.profile.d and looks like this:

admin@salt ~ $ cat /etc/salt/cloud.profiles.d/b-pve-test-profile.conf

proxmox-ubuntu:

provider: b-pve-provider

image: local:vztmpl/ubuntu-16.04-standard_16.04-1_amd64.tar.gz

technology: lxc

# host needs to be set to the configured name of the proxmox host

# and not the ip address or FQDN of the server

host: pve01

ip_address: 192.168.100.155

password: topsecret

I thought, it would be possible to use all of the api2 commands in the conf file like trunks=vlanid, etc... pve.proxmox.com/pve-docs/api-viewer/index.html

Thanks,

Michael